Pronthep Pipitsunthonsan

Identification of complex mixtures for Raman spectroscopy using a novel scheme based on a new multi-label deep neural network

Oct 29, 2020

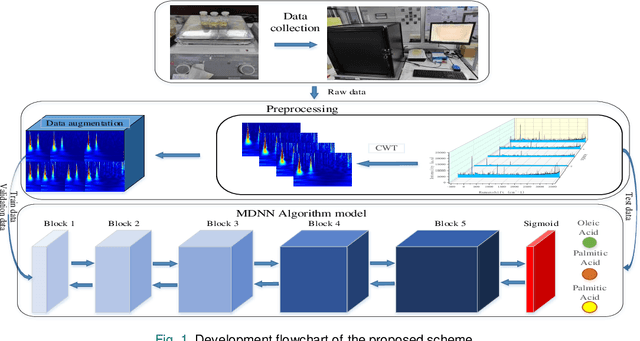

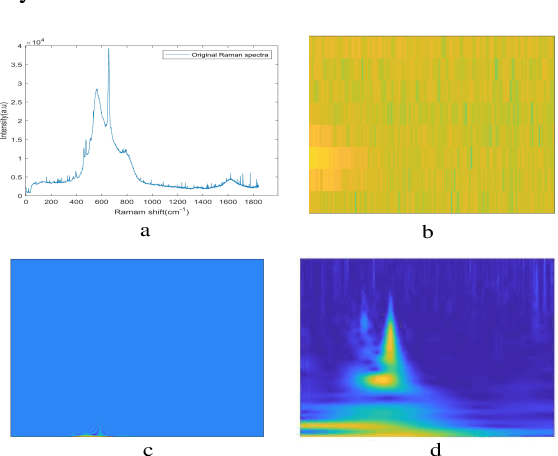

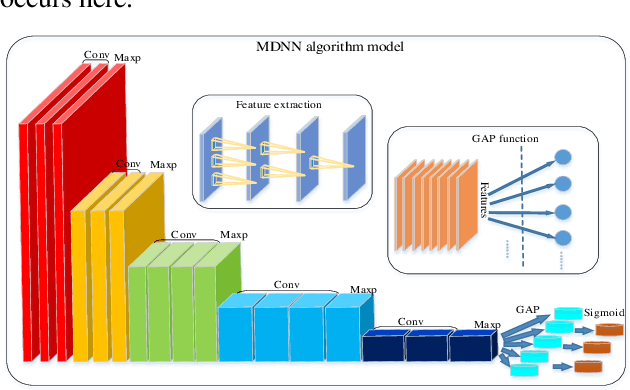

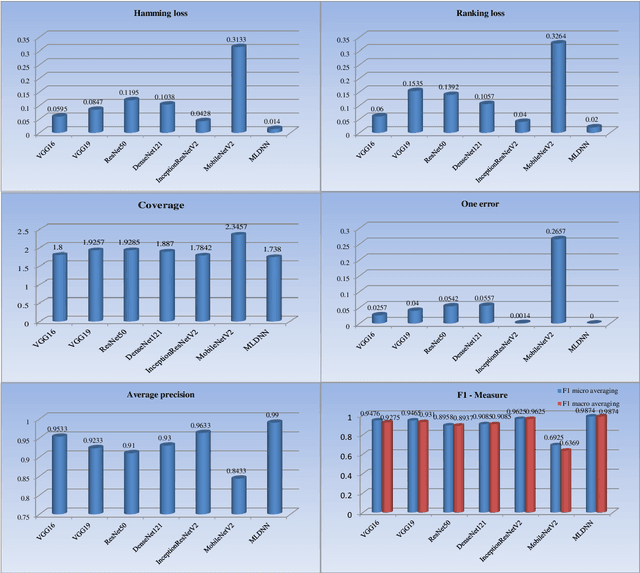

Abstract:With noisy environment caused by fluoresence and additive white noise as well as complicated spectrum fingerprints, the identification of complex mixture materials remains a major challenge in Raman spectroscopy application. In this paper, we propose a new scheme based on a constant wavelet transform (CWT) and a deep network for classifying complex mixture. The scheme first transforms the noisy Raman spectrum to a two-dimensional scale map using CWT. A multi-label deep neural network model (MDNN) is then applied for classifying material. The proposed model accelerates the feature extraction and expands the feature graph using the global averaging pooling layer. The Sigmoid function is implemented in the last layer of the model. The MDNN model was trained, validated and tested with data collected from the samples prepared from substances in palm oil. During training and validating process, data augmentation is applied to overcome the imbalance of data and enrich the diversity of Raman spectra. From the test results, it is found that the MDNN model outperforms previously proposed deep neural network models in terms of Hamming loss, one error, coverage, ranking loss, average precision, F1 macro averaging and F1 micro averaging, respectively. The average detection time obtained from our model is 5.31 s, which is much faster than the detection time of the previously proposed models.

Method for classifying a noisy Raman spectrum based on a wavelet transform and a deep neural network

Sep 09, 2020

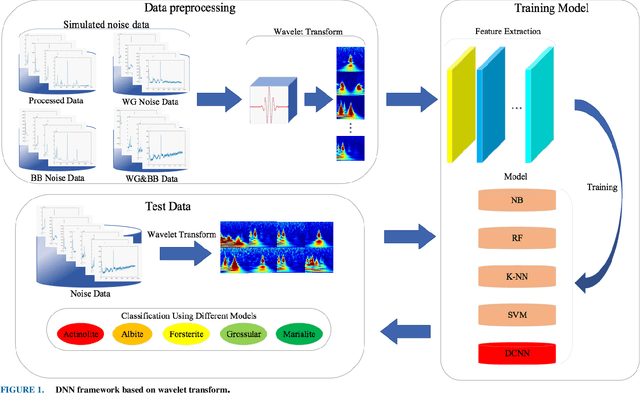

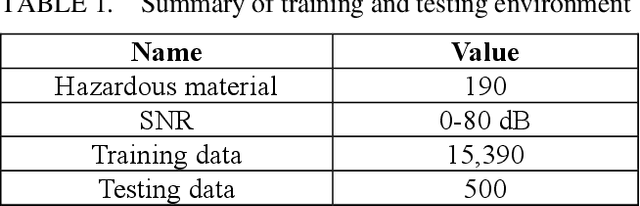

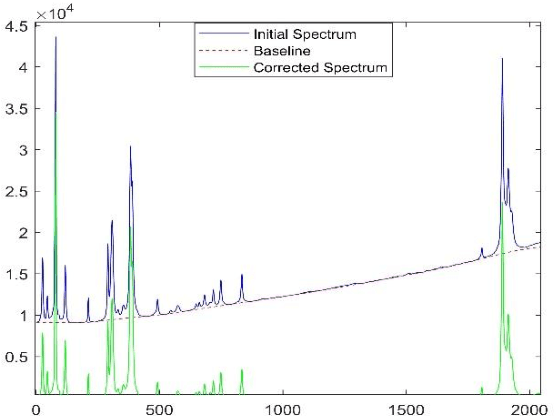

Abstract:This paper proposes a new framework based on a wavelet transform and deep neural network for identifying noisy Raman spectrum since, in practice, it is relatively difficult to classify the spectrum under baseline noise and additive white Gaussian noise environments. The framework consists of two main engines. Wavelet transform is proposed as the framework front-end for transforming 1-D noise Raman spectrum to two-dimensional data. This two-dimensional data will be fed to the framework back-end which is a classifier. The optimum classifier is chosen by implementing several traditional machine learning (ML) and deep learning (DL) algorithms, and then we investigated their classification accuracy and robustness performances. The four MLs we choose included a Naive Bayes (NB), a Support Vector Machine (SVM), a Random Forest (RF) and a K-Nearest Neighbor (KNN) where a deep convolution neural network (DCNN) was chosen for a DL classifier. Noise-free, Gaussian noise, baseline noise, and mixed-noise Raman spectrums were applied to train and validate the ML and DCNN models. The optimum back-end classifier was obtained by testing the ML and DCNN models with several noisy Raman spectrums (10-30 dB noise power). Based on the simulation, the accuracy of the DCNN classifier is 9% higher than the NB classifier, 3.5% higher than the RF classifier, 1% higher than the KNN classifier, and 0.5% higher than the SVM classifier. In terms of robustness to the mixed noise scenarios, the framework with DCNN back-end showed superior performance than the other ML back-ends. The DCNN back-end achieved 90% accuracy at 3 dB SNR while NB, SVM, RF, and K-NN back-ends required 27 dB, 22 dB, 27 dB, and 23 dB SNR, respectively. In addition, in the low-noise test data set, the F-measure score of the DCNN back-end exceeded 99.1% while the F-measure scores of the other ML engines were below 98.7%.

Noise Reduction Technique for Raman Spectrum using Deep Learning Network

Sep 09, 2020

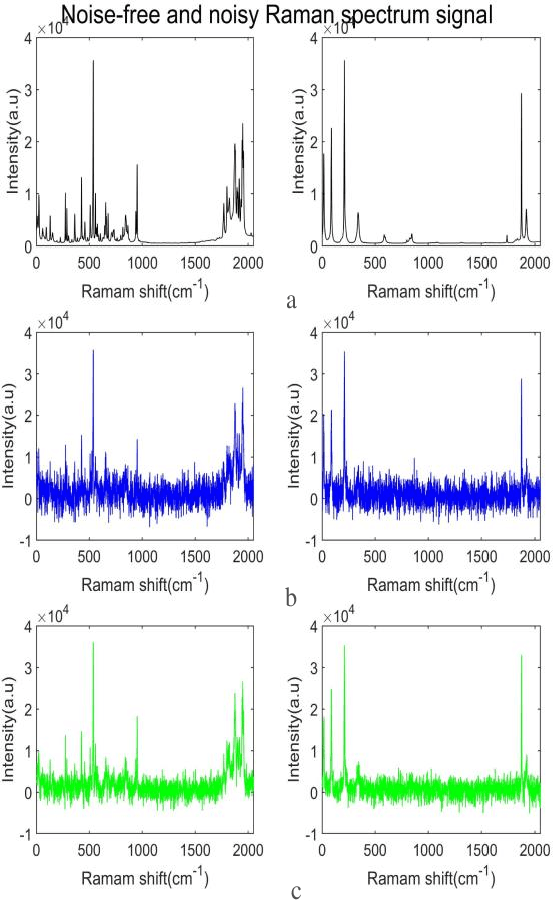

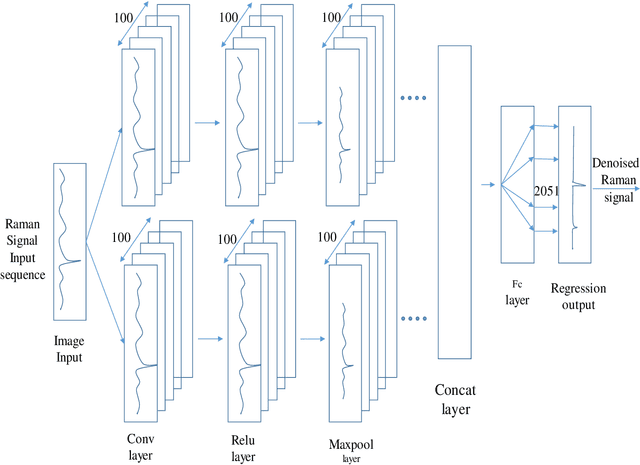

Abstract:In a normal indoor environment, Raman spectrum encounters noise often conceal spectrum peak, leading to difficulty in spectrum interpretation. This paper proposes deep learning (DL) based noise reduction technique for Raman spectroscopy. The proposed DL network is developed with several training and test sets of noisy Raman spectrum. The proposed technique is applied to denoise and compare the performance with different wavelet noise reduction methods. Output signal-to-noise ratio (SNR), root-mean-square error (RMSE) and mean absolute percentage error (MAPE) are the performance evaluation index. It is shown that output SNR of the proposed noise reduction technology is 10.24 dB greater than that of the wavelet noise reduction method while the RMSE and the MAPE are 292.63 and 10.09, which are much better than the proposed technique.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge