Pranav Mathur

Contextualized Spoken Word Representations from Convolutional Autoencoders

Jul 06, 2020

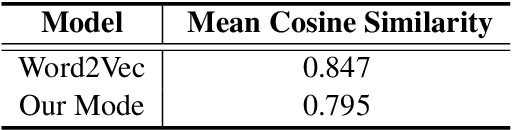

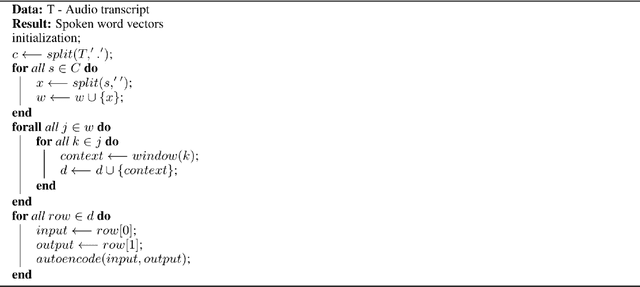

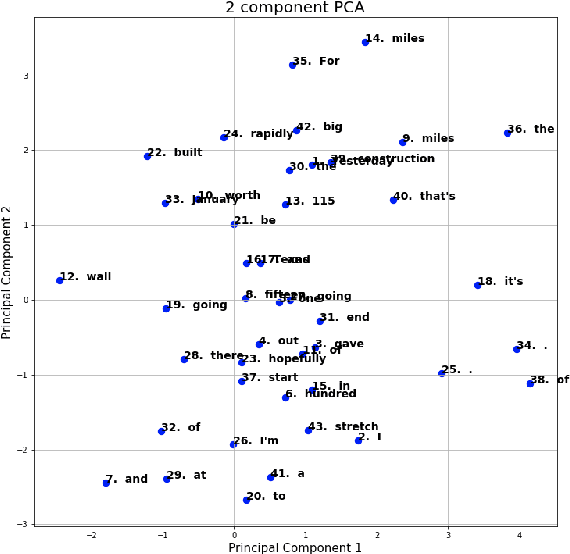

Abstract:A lot of work has been done recently to build sound language models for the textual data, but not much such has been done in the case of speech/audio type data. In the case of text, words can be represented by a unique fixed-length vector. Such models for audio type data can not only lead to great advances in the speech-related natural language processing tasks but can also reduce the need for converting speech to text for performing the same. This paper proposes a novel model architecture that produces syntactically, contextualized, and semantically adequate representation of varying length spoken words. The performance of the spoken word embeddings generated by the proposed model was validated by (1) inspecting the vector space generated, and (2) evaluating its performance on the downstream task of next spoken word prediction in a speech.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge