Prabir Barooah

A unified framework for coordination of thermostatically controlled loads

Aug 12, 2021

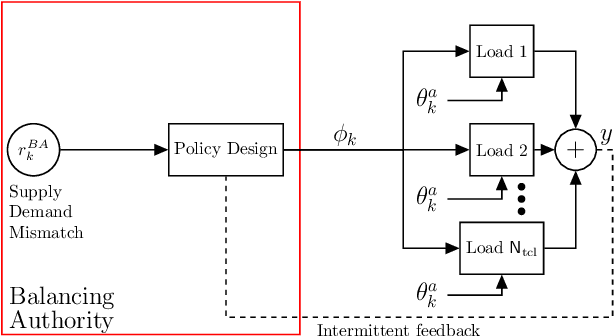

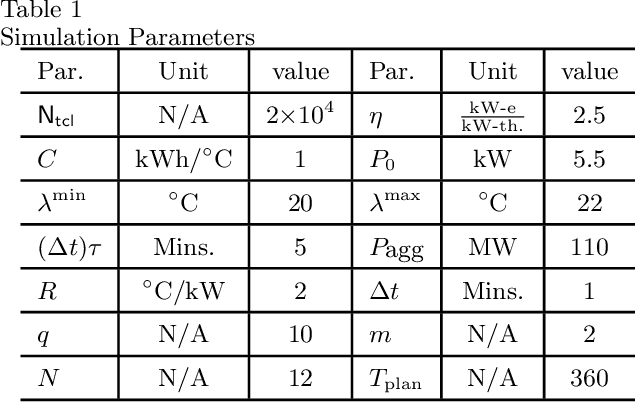

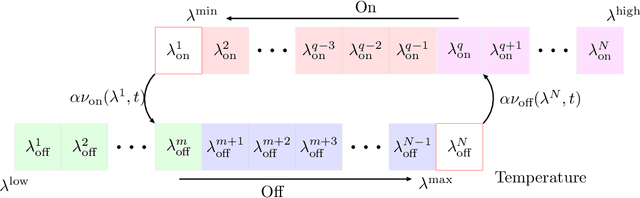

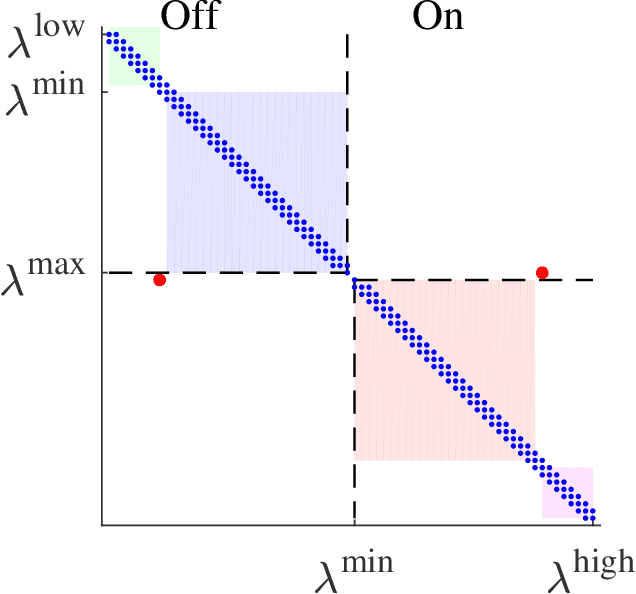

Abstract:A collection of thermostatically controlled loads (TCLs) -- such as air conditioners and water heaters -- can vary their power consumption within limits to help the balancing authority of a power grid maintain demand supply balance. Doing so requires loads to coordinate their on/off decisions so that the aggregate power consumption profile tracks a grid-supplied reference. At the same time, each consumer's quality of service (QoS) must be maintained. While there is a large body of work on TCL coordination, there are several limitations. One is that they do not provide guarantees on the reference tracking performance and QoS maintenance. A second limitation of past work is that they do not provide a means to compute a suitable reference signal for power demand of a collection of TCLs. In this work we provide a framework that addresses these weaknesses. The framework enables coordination of an arbitrary number of TCLs that: (i) is computationally efficient, (ii) is implementable at the TCLs with local feedback and low communication, and (iii) enables reference tracking by the collection while ensuring that temperature and cycling constraints are satisfied at every TCL at all times. The framework is based on a Markov model obtained by discretizing a pair of Fokker-Planck equations derived in earlier work by Malhame and Chong [21]. We then use this model to design randomized policies for TCLs. The balancing authority broadcasts the same policy to all TCLs, and each TCL implements this policy which requires only local measurement to make on/off decisions. Simulation results are provided to support these claims.

Detecting Separation in Robotic and Sensor Networks

Feb 16, 2011

Abstract:In this paper we consider the problem of monitoring detecting separation of agents from a base station in robotic and sensor networks. Such separation can be caused by mobility and/or failure of the agents. While separation/cut detection may be performed by passing messages between a node and the base in static networks, such a solution is impractical for networks with high mobility, since routes are constantly changing. We propose a distributed algorithm to detect separation from the base station. The algorithm consists of an averaging scheme in which every node updates a scalar state by communicating with its current neighbors. We prove that if a node is permanently disconnected from the base station, its state converges to $0$. If a node is connected to the base station in an average sense, even if not connected in any instant, then we show that the expected value of its state converges to a positive number. Therefore, a node can detect if it has been separated from the base station by monitoring its state. The effectiveness of the proposed algorithm is demonstrated through simulations, a real system implementation and experiments involving both static as well as mobile networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge