Pouria Sarhadi

Large Language Model-based Decision-making for COLREGs and the Control of Autonomous Surface Vehicles

Nov 25, 2024

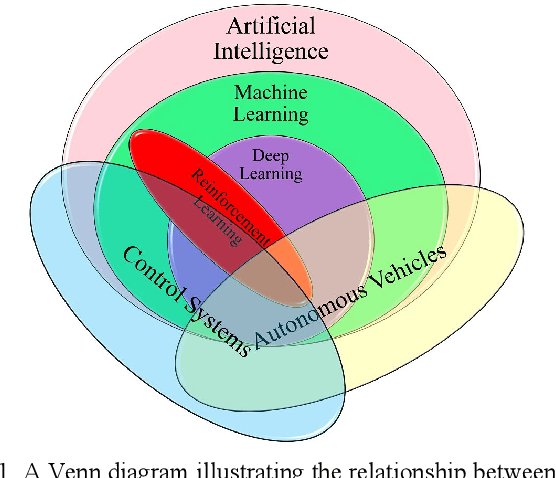

Abstract:In the field of autonomous surface vehicles (ASVs), devising decision-making and obstacle avoidance solutions that address maritime COLREGs (Collision Regulations), primarily defined for human operators, has long been a pressing challenge. Recent advancements in explainable Artificial Intelligence (AI) and machine learning have shown promise in enabling human-like decision-making. Notably, significant developments have occurred in the application of Large Language Models (LLMs) to the decision-making of complex systems, such as self-driving cars. The textual and somewhat ambiguous nature of COLREGs (from an algorithmic perspective), however, poses challenges that align well with the capabilities of LLMs, suggesting that LLMs may become increasingly suitable for this application soon. This paper presents and demonstrates the first application of LLM-based decision-making and control for ASVs. The proposed method establishes a high-level decision-maker that uses online collision risk indices and key measurements to make decisions for safe manoeuvres. A tailored design and runtime structure is developed to support training and real-time action generation on a realistic ASV model. Local planning and control algorithms are integrated to execute the commands for waypoint following and collision avoidance at a lower level. To the authors' knowledge, this study represents the first attempt to apply explainable AI to the dynamic control problem of maritime systems recognising the COLREGs rules, opening new avenues for research in this challenging area. Results obtained across multiple test scenarios demonstrate the system's ability to maintain online COLREGs compliance, accurate waypoint tracking, and feasible control, while providing human-interpretable reasoning for each decision.

Adaptive formation motion planning and control of autonomous underwater vehicles using deep reinforcement learning

Apr 01, 2023Abstract:Creating safe paths in unknown and uncertain environments is a challenging aspect of leader-follower formation control. In this architecture, the leader moves toward the target by taking optimal actions, and followers should also avoid obstacles while maintaining their desired formation shape. Most of the studies in this field have inspected formation control and obstacle avoidance separately. The present study proposes a new approach based on deep reinforcement learning (DRL) for end-to-end motion planning and control of under-actuated autonomous underwater vehicles (AUVs). The aim is to design optimal adaptive distributed controllers based on actor-critic structure for AUVs formation motion planning. This is accomplished by controlling the speed and heading of AUVs. In obstacle avoidance, two approaches have been deployed. In the first approach, the goal is to design control policies for the leader and followers such that each learns its own collision-free path. Moreover, the followers adhere to an overall formation maintenance policy. In the second approach, the leader solely learns the control policy, and safely leads the whole group towards the target. Here, the control policy of the followers is to maintain the predetermined distance and angle. In the presence of ocean currents, communication delays, and sensing errors, the robustness of the proposed method under realistically perturbed circumstances is shown. The efficiency of the algorithms has been evaluated and approved using a number of computer-based simulations.

A Survey of Recent Machine Learning Solutions for Ship Collision Avoidance and Mission Planning

Jul 15, 2022

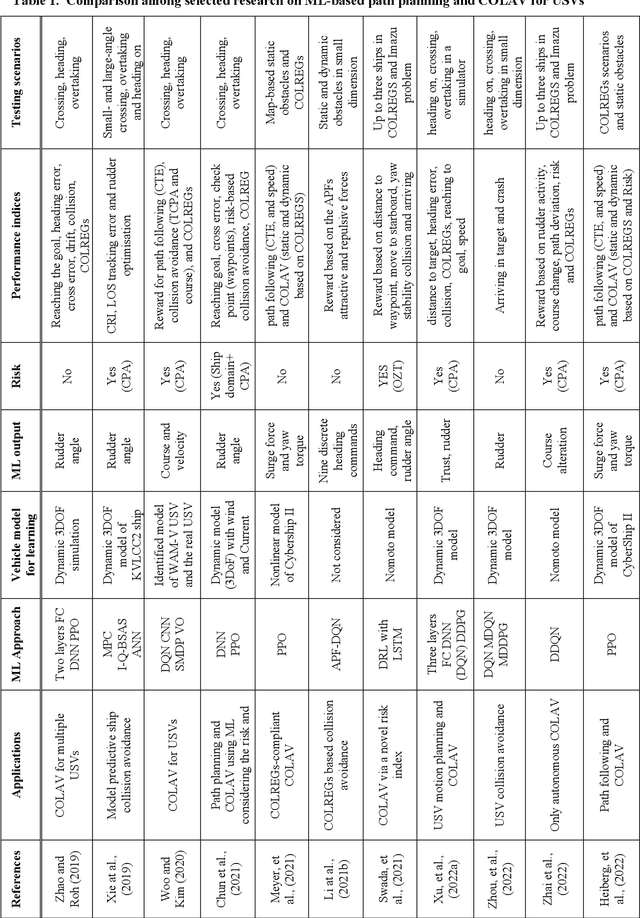

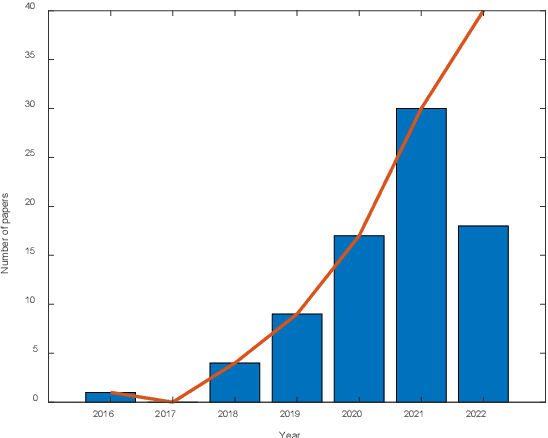

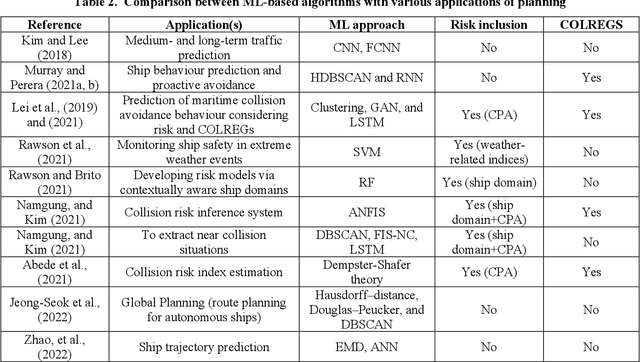

Abstract:Machine Learning (ML) techniques have gained significant traction as a means of improving the autonomy of marine vehicles over the last few years. This article surveys the recent ML approaches utilised for ship collision avoidance (COLAV) and mission planning. Following an overview of the ever-expanding ML exploitation for maritime vehicles, key topics in the mission planning of ships are outlined. Notable papers with direct and indirect applications to the COLAV subject are technically reviewed and compared. Critiques, challenges, and future directions are also identified. The outcome clearly demonstrates the thriving research in this field, even though commercial marine ships incorporating machine intelligence able to perform autonomously under all operating conditions are still a long way off.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge