Philipp Blättner

DMFC-GraspNet: Differentiable Multi-Fingered Robotic Grasp Generation in Cluttered Scenes

Aug 16, 2023

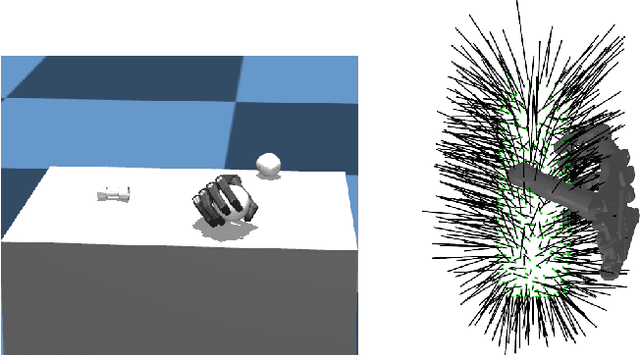

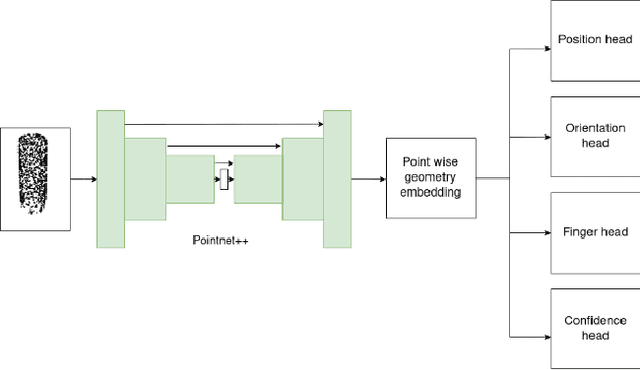

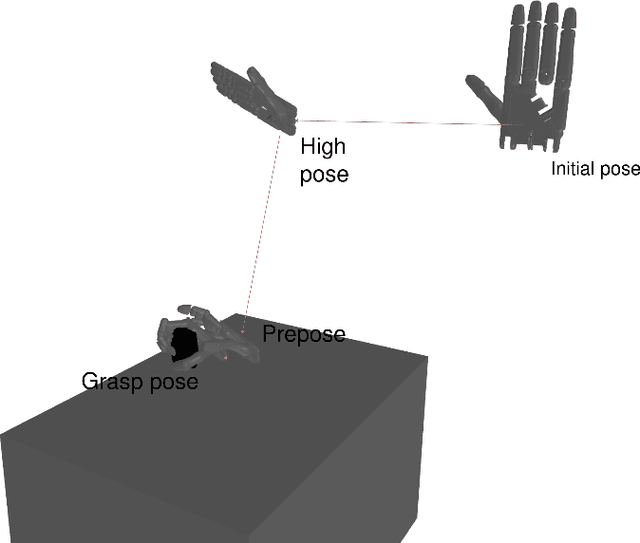

Abstract:Robotic grasping is a fundamental skill required for object manipulation in robotics. Multi-fingered robotic hands, which mimic the structure of the human hand, can potentially perform complex object manipulation. Nevertheless, current techniques for multi-fingered robotic grasping frequently predict only a single grasp for each inference time, limiting computational efficiency and their versatility, i.e. unimodal grasp distribution. This paper proposes a differentiable multi-fingered grasp generation network (DMFC-GraspNet) with three main contributions to address this challenge. Firstly, a novel neural grasp planner is proposed, which predicts a new grasp representation to enable versatile and dense grasp predictions. Secondly, a scene creation and label mapping method is developed for dense labeling of multi-fingered robotic hands, which allows a dense association of ground truth grasps. Thirdly, we propose to train DMFC-GraspNet end-to-end using using a forward-backward automatic differentiation approach with both a supervised loss and a differentiable collision loss and a generalized Q 1 grasp metric loss. The proposed approach is evaluated using the Shadow Dexterous Hand on Mujoco simulation and ablated by different choices of loss functions. The results demonstrate the effectiveness of the proposed approach in predicting versatile and dense grasps, and in advancing the field of multi-fingered robotic grasping.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge