Peter W. Sauer

Optimal Tap Setting of Voltage Regulation Transformers Using Batch Reinforcement Learning

Jul 29, 2018

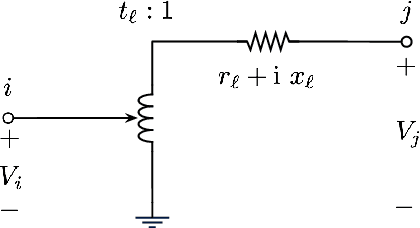

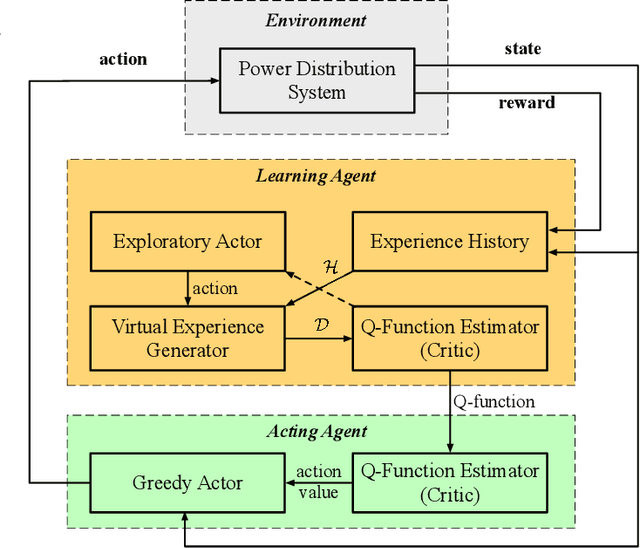

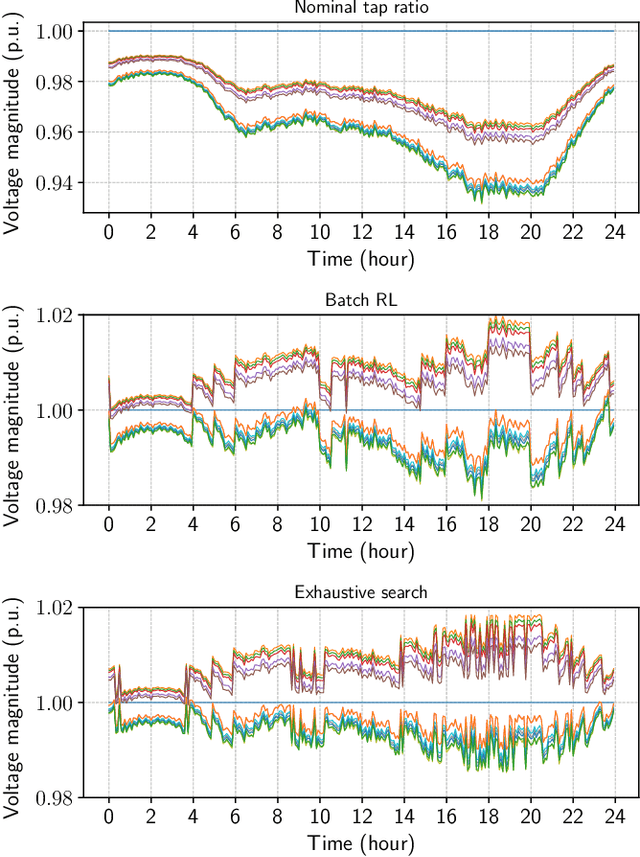

Abstract:In this paper, we address the problem of setting the tap positions of voltage regulation transformers---also referred to as the load tap changers (LTCs)---in radial power distribution systems under uncertain load dynamics. The objective is to find a policy that can determine the optimal tap positions that minimize the voltage deviation across the system, based only on the voltage magnitude measurements and the topology information. To this end, we formulate this problem as a Markov decision process (MDP), and propose a batch reinforcement learning (RL) algorithm to solve it. By taking advantage of a linearized power flow model, we propose an effective algorithm to estimate the voltage magnitudes under different tap settings, which allows the RL algorithm to explore the state and action space freely offline without impacting the real power system. To circumvent the "curse of dimensionality" resulted from the large state and action space, we propose a least squares policy iteration based sequential learning algorithm to learn an action value function for each LTC, based on which the optimal tap positions can be directly determined. Numerical simulations on the IEEE 13-bus and 123-bus distribution test feeders validated the effectiveness of the proposed algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge