Penny Sweetser

Large Language Models and Video Games: A Preliminary Scoping Review

Mar 05, 2024

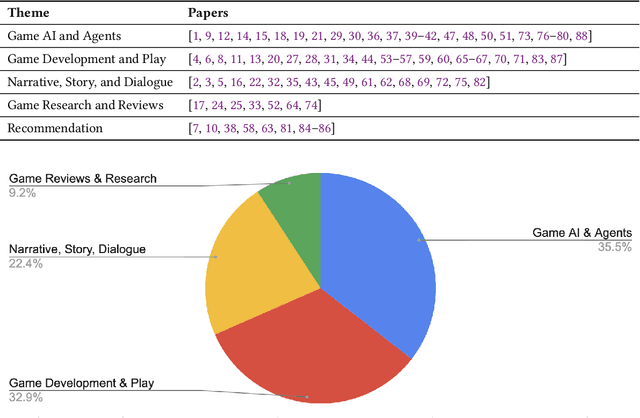

Abstract:Large language models (LLMs) hold interesting potential for the design, development, and research of video games. Building on the decades of prior research on generative AI in games, many researchers have sped to investigate the power and potential of LLMs for games. Given the recent spike in LLM-related research in games, there is already a wealth of relevant research to survey. In order to capture a snapshot of the state of LLM research in games, and to help lay the foundation for future work, we carried out an initial scoping review of relevant papers published so far. In this paper, we review 76 papers published between 2022 to early 2024 on LLMs and video games, with key focus areas in game AI, game development, narrative, and game research and reviews. Our paper provides an early state of the field and lays the groundwork for future research and reviews on this topic.

Atari-5: Distilling the Arcade Learning Environment down to Five Games

Oct 05, 2022

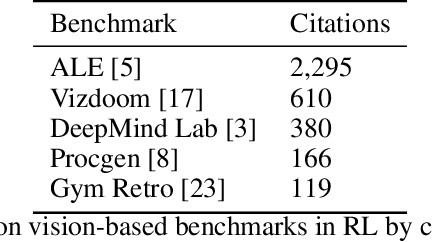

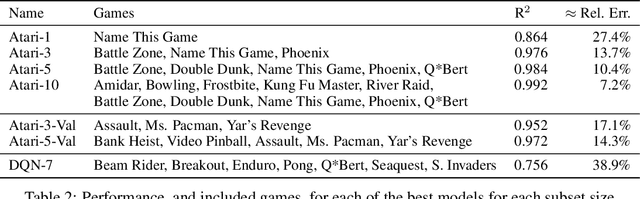

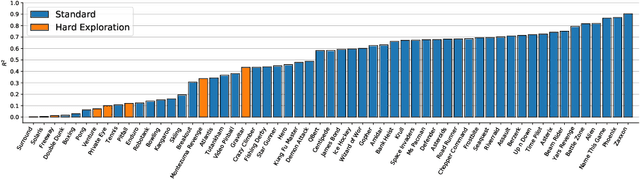

Abstract:The Arcade Learning Environment (ALE) has become an essential benchmark for assessing the performance of reinforcement learning algorithms. However, the computational cost of generating results on the entire 57-game dataset limits ALE's use and makes the reproducibility of many results infeasible. We propose a novel solution to this problem in the form of a principled methodology for selecting small but representative subsets of environments within a benchmark suite. We applied our method to identify a subset of five ALE games, called Atari-5, which produces 57-game median score estimates within 10% of their true values. Extending the subset to 10-games recovers 80% of the variance for log-scores for all games within the 57-game set. We show this level of compression is possible due to a high degree of correlation between many of the games in ALE.

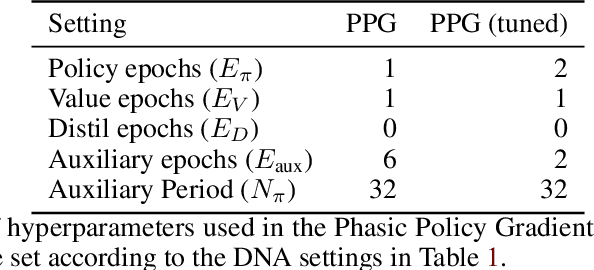

DNA: Proximal Policy Optimization with a Dual Network Architecture

Jun 20, 2022

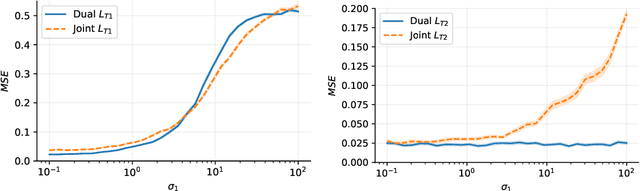

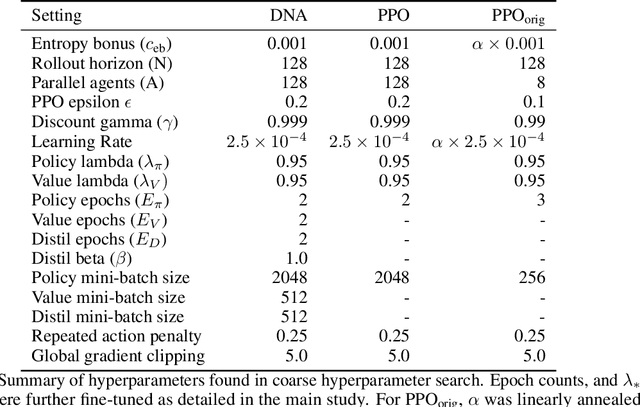

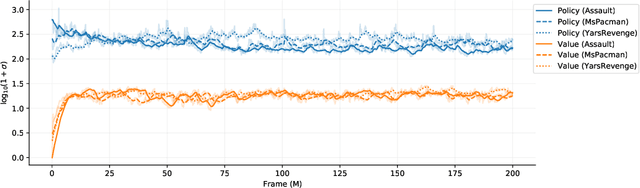

Abstract:This paper explores the problem of simultaneously learning a value function and policy in deep actor-critic reinforcement learning models. We find that the common practice of learning these functions jointly is sub-optimal, due to an order-of-magnitude difference in noise levels between these two tasks. Instead, we show that learning these tasks independently, but with a constrained distillation phase, significantly improves performance. Furthermore, we find that the policy gradient noise levels can be decreased by using a lower \textit{variance} return estimate. Whereas, the value learning noise level decreases with a lower \textit{bias} estimate. Together these insights inform an extension to Proximal Policy Optimization we call \textit{Dual Network Architecture} (DNA), which significantly outperforms its predecessor. DNA also exceeds the performance of the popular Rainbow DQN algorithm on four of the five environments tested, even under more difficult stochastic control settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge