Pegah Fakhari

The detour problem in a stochastic environment: Tolman revisited

Sep 27, 2017

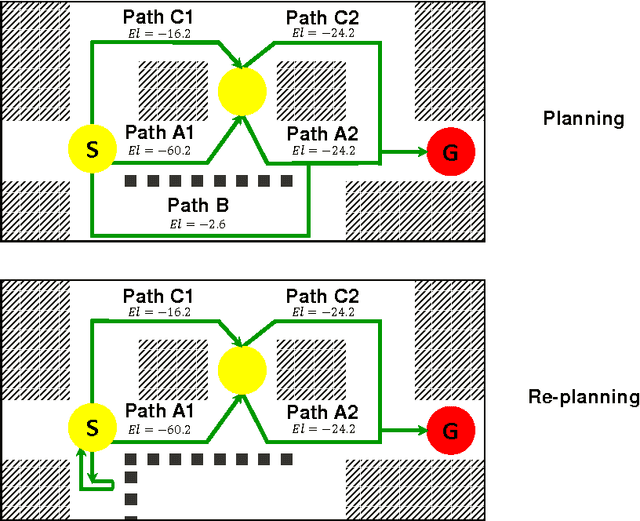

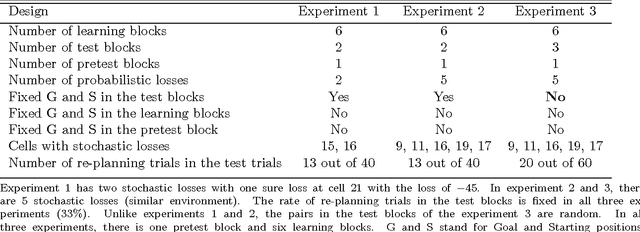

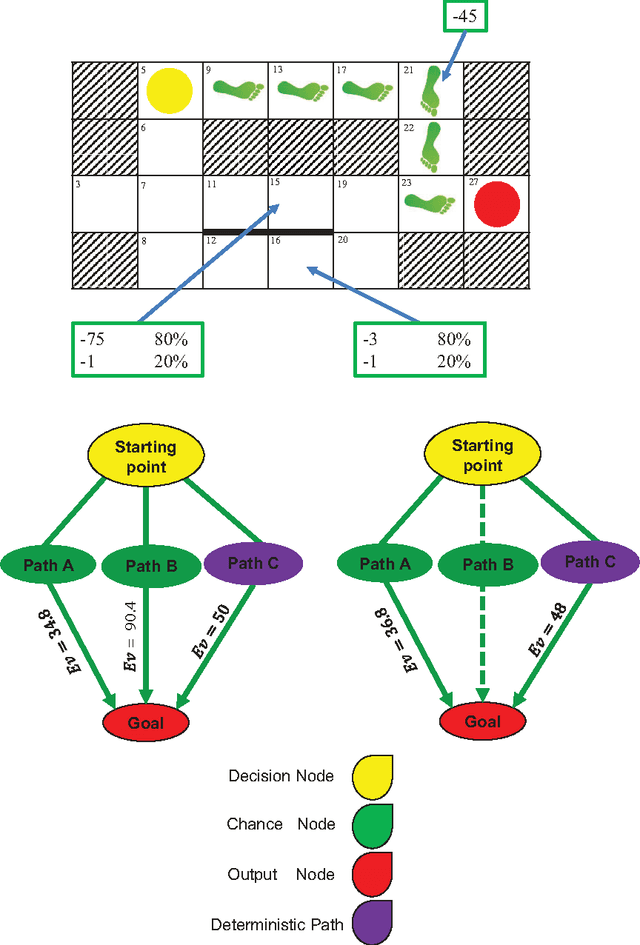

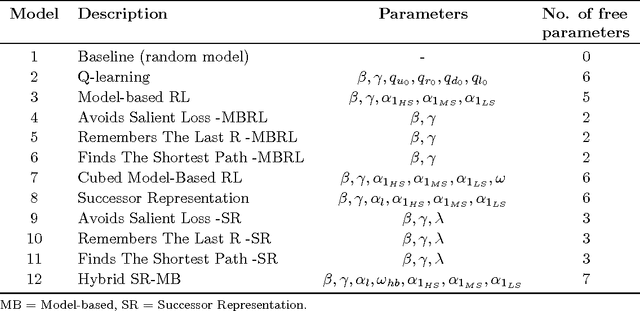

Abstract:We designed a grid world task to study human planning and re-planning behavior in an unknown stochastic environment. In our grid world, participants were asked to travel from a random starting point to a random goal position while maximizing their reward. Because they were not familiar with the environment, they needed to learn its characteristics from experience to plan optimally. Later in the task, we randomly blocked the optimal path to investigate whether and how people adjust their original plans to find a detour. To this end, we developed and compared 12 different models. These models were different on how they learned and represented the environment and how they planned to catch the goal. The majority of our participants were able to plan optimally. We also showed that people were capable of revising their plans when an unexpected event occurred. The result from the model comparison showed that the model-based reinforcement learning approach provided the best account for the data and outperformed heuristics in explaining the behavioral data in the re-planning trials.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge