Pawel Marcin Kaminski

SNR optimization of multi-span fiber optic communication systems employing EDFAs with non-flat gain and noise figure

Jun 07, 2021

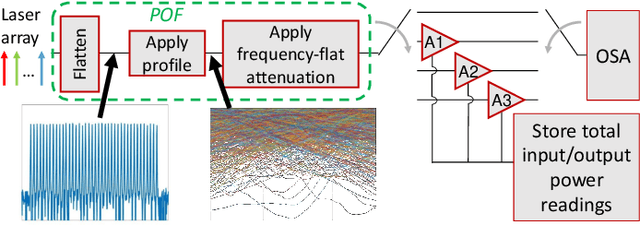

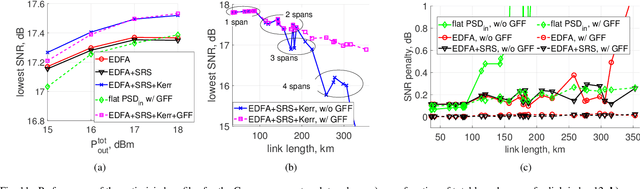

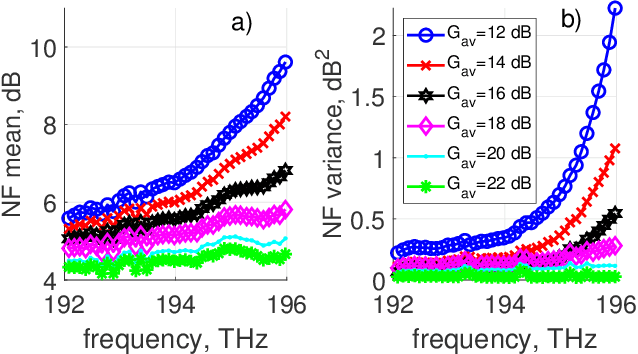

Abstract:Throughput optimization of optical communication systems is a key challenge for current optical networks. The use of gain-flattening filters (GFFs) simplifies the problem at the cost of insertion loss, higher power consumption and potentially poorer performance. In this work, we propose a component wise model of a multi-span transmission system for signal-to-noise (SNR) optimization. A machine-learning based model is trained for the gain and noise figure spectral profile of a C-band amplifier without a GFF. The model is combined with the Gaussian noise model for nonlinearities in optical fibers including stimulated Raman scattering and the implementation penalty spectral profile measured in back-to-back in order to predict the SNR in each channel of a multi-span wavelength division multiplexed system. All basic components in the system model are differentiable and allow for the gradient descent-based optimization of a system of arbitrary configuration in terms of number of spans and length per span. When the input power profile is optimized for flat and maximized received SNR per channel, the minimum performance in an arbitrary 3-span experimental system is improved by up to 8 dB w.r.t. a system with flat input power profile. An SNR flatness down to 1.2 dB is simultaneously achieved. The model and optimization methods are used to optimize the performance of an example core network, and 0.2 dB of gain is shown w.r.t. solutions that do not take into account nonlinearities. The method is also shown to be beneficial for systems with ideal gain flattening, achieving up to 0.3 dB of gain w.r.t. a flat input power profile.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge