Paul B. Kantor

Can We Distinguish Machine Learning from Human Learning?

Oct 08, 2019

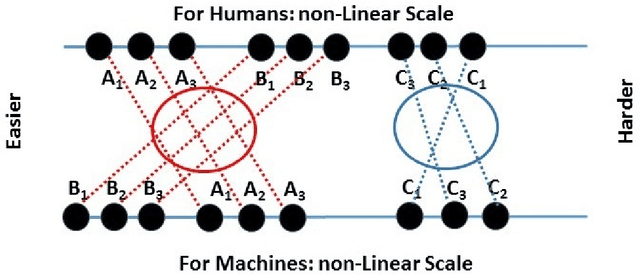

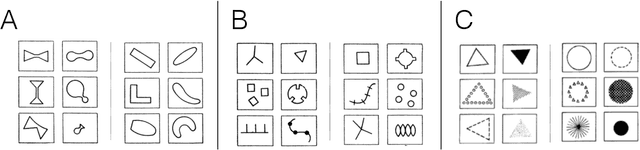

Abstract:What makes a task relatively more or less difficult for a machine compared to a human? Much AI/ML research has focused on expanding the range of tasks that machines can do, with a focus on whether machines can beat humans. Allowing for differences in scale, we can seek interesting (anomalous) pairs of tasks T, T'. We define interesting in this way: The "harder to learn" relation is reversed when comparing human intelligence (HI) to AI. While humans seems to be able to understand problems by formulating rules, ML using neural networks does not rely on constructing rules. We discuss a novel approach where the challenge is to "perform well under rules that have been created by human beings." We suggest that this provides a rigorous and precise pathway for understanding the difference between the two kinds of learning. Specifically, we suggest a large and extensible class of learning tasks, formulated as learning under rules. With these tasks, both the AI and HI will be studied with rigor and precision. The immediate goal is to find interesting groundtruth rule pairs. In the long term, the goal will be to understand, in a generalizable way, what distinguishes interesting pairs from ordinary pairs, and to define saliency behind interesting pairs. This may open new ways of thinking about AI, and provide unexpected insights into human learning.

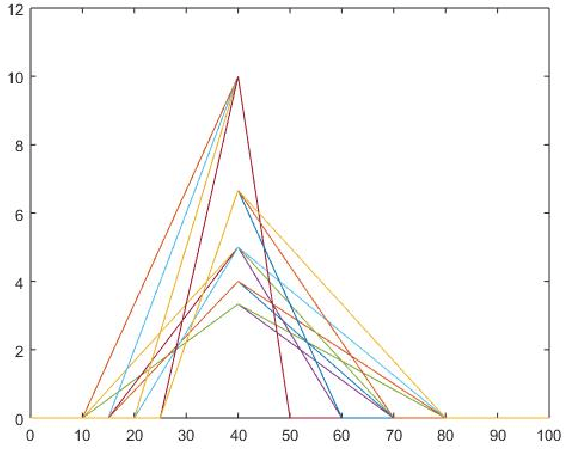

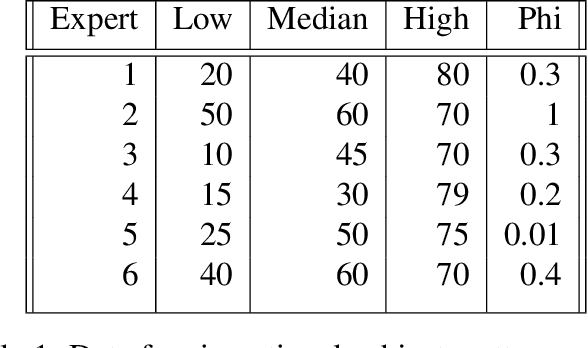

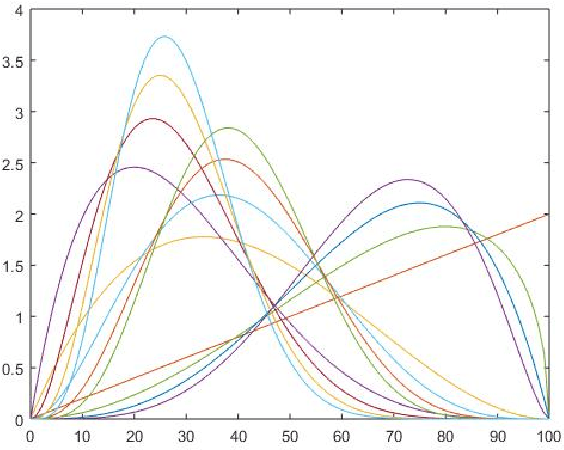

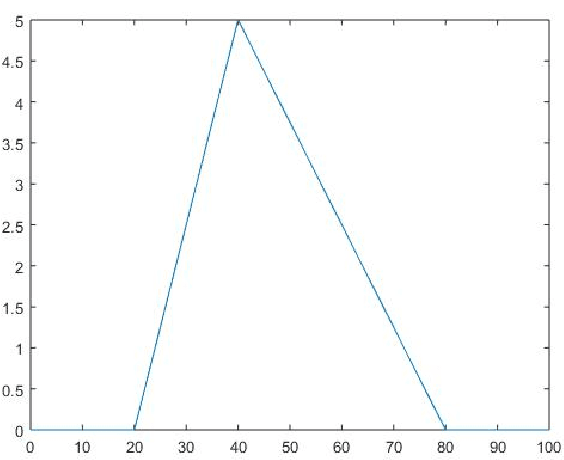

Soft Triangles for Expert Aggregation

Sep 02, 2019

Abstract:We consider the problem of eliciting expert assessments of an uncertain parameter. The context is risk control, where there are, in fact, three uncertain parameters to be estimates. Two of these are probabilities, requiring the that the experts be guided in the concept of "uncertainty about uncertainty." We propose a novel formulation for expert estimates, which relies on the range and the median, rather than the variance and the mean. We discuss the process of elicitation, and provide precise formulas for these new distributions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge