Paolo Maramotti

Tackling Real-World Autonomous Driving using Deep Reinforcement Learning

Jul 05, 2022

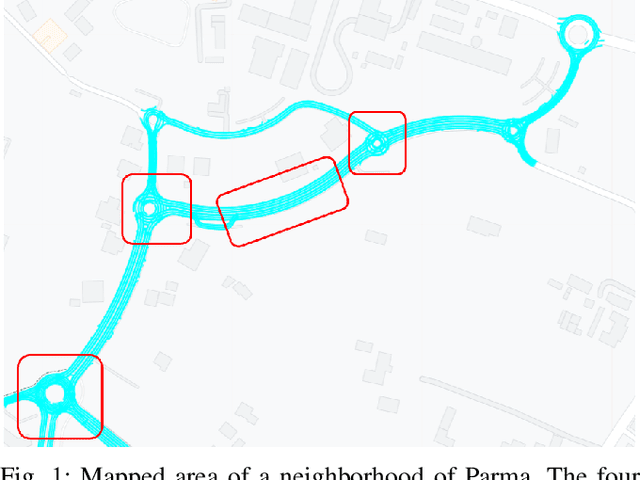

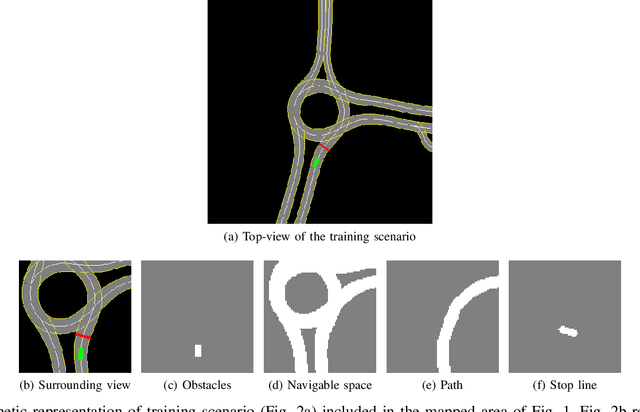

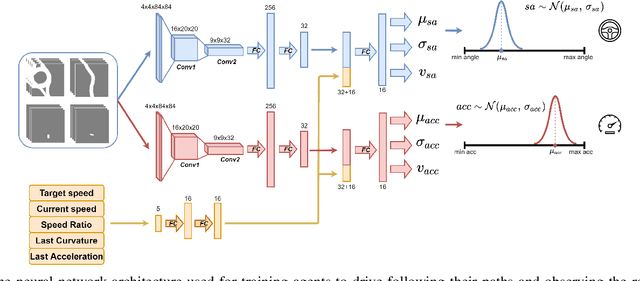

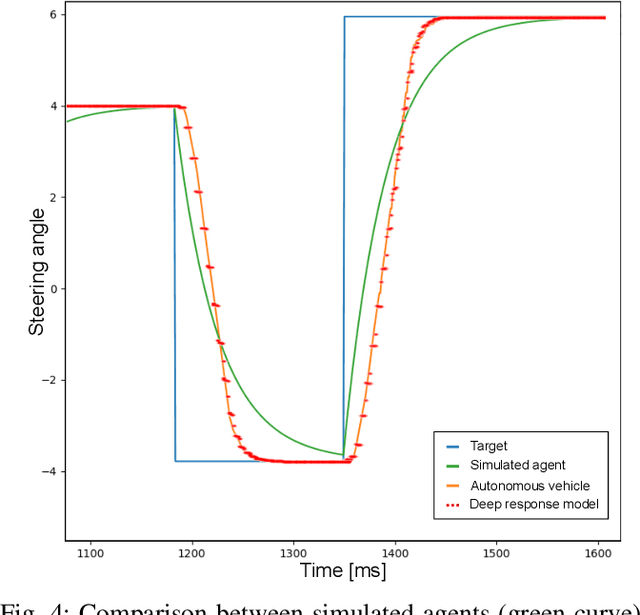

Abstract:In the typical autonomous driving stack, planning and control systems represent two of the most crucial components in which data retrieved by sensors and processed by perception algorithms are used to implement a safe and comfortable self-driving behavior. In particular, the planning module predicts the path the autonomous car should follow taking the correct high-level maneuver, while control systems perform a sequence of low-level actions, controlling steering angle, throttle and brake. In this work, we propose a model-free Deep Reinforcement Learning Planner training a neural network that predicts both acceleration and steering angle, thus obtaining a single module able to drive the vehicle using the data processed by localization and perception algorithms on board of the self-driving car. In particular, the system that was fully trained in simulation is able to drive smoothly and safely in obstacle-free environments both in simulation and in a real-world urban area of the city of Parma, proving that the system features good generalization capabilities also driving in those parts outside the training scenarios. Moreover, in order to deploy the system on board of the real self-driving car and to reduce the gap between simulated and real-world performances, we also develop a module represented by a tiny neural network able to reproduce the real vehicle dynamic behavior during the training in simulation.

* Oral Presentation at Intelligent Vehicles Symposium 2022

End-to-End Intersection Handling using Multi-Agent Deep Reinforcement Learning

May 01, 2021

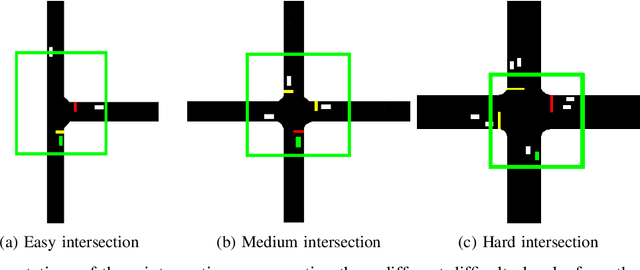

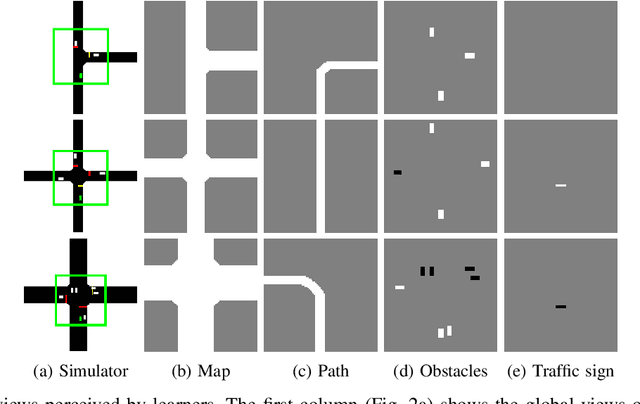

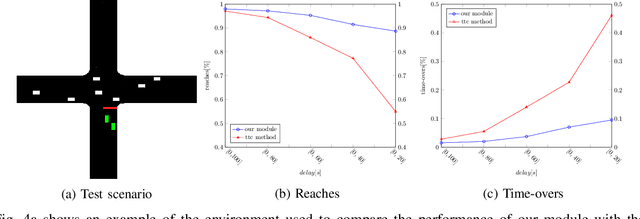

Abstract:Navigating through intersections is one of the main challenging tasks for an autonomous vehicle. However, for the majority of intersections regulated by traffic lights, the problem could be solved by a simple rule-based method in which the autonomous vehicle behavior is closely related to the traffic light states. In this work, we focus on the implementation of a system able to navigate through intersections where only traffic signs are provided. We propose a multi-agent system using a continuous, model-free Deep Reinforcement Learning algorithm used to train a neural network for predicting both the acceleration and the steering angle at each time step. We demonstrate that agents learn both the basic rules needed to handle intersections by understanding the priorities of other learners inside the environment, and to drive safely along their paths. Moreover, a comparison between our system and a rule-based method proves that our model achieves better results especially with dense traffic conditions. Finally, we test our system on real world scenarios using real recorded traffic data, proving that our module is able to generalize both to unseen environments and to different traffic conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge