P. Clark Di Leoni

Reconstruction of turbulent data with deep generative models for semantic inpainting from TURB-Rot database

Jun 16, 2020

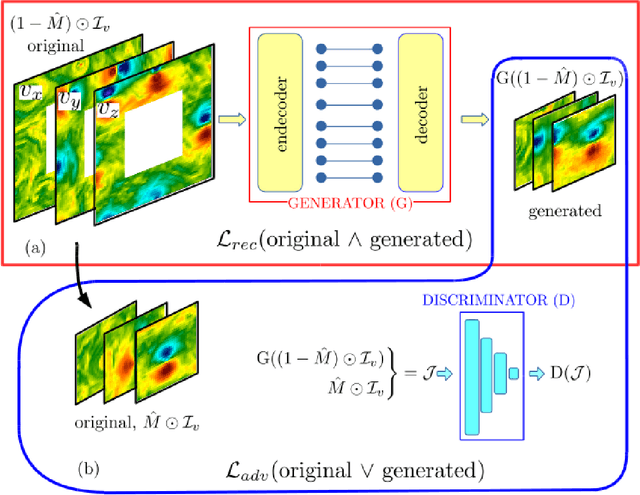

Abstract:We study the applicability of tools developed by the computer vision community for features learning and semantic image inpainting to perform data reconstruction of fluid turbulence configurations. The aim is twofold. First, we explore on a quantitative basis, the capability of Convolutional Neural Networks embedded in a Deep Generative Adversarial Model (Deep-GAN) to generate missing data in turbulence, a paradigmatic high dimensional chaotic system. In particular, we investigate their use in reconstructing two-dimensional damaged snapshots extracted from a large database of numerical configurations of 3d turbulence in the presence of rotation, a case with multi-scale random features where both large-scale organised structures and small-scale highly intermittent and non-Gaussian fluctuations are present. Second, following a reverse engineering approach, we aim to rank the input flow properties (features) in terms of their qualitative and quantitative importance to obtain a better set of reconstructed fields. We present two approaches both based on Context Encoders. The first one infers the missing data via a minimization of the L2 pixel-wise reconstruction loss, plus a small adversarial penalisation. The second searches for the closest encoding of the corrupted flow configuration from a previously trained generator. Finally, we present a comparison with a different data assimilation tool, based on Nudging, an equation-informed unbiased protocol, well known in the numerical weather prediction community. The TURB-Rot database, \url{http://smart-turb.roma2.infn.it}, of roughly 300K 2d turbulent images is released and details on how to download it are given.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge