Omid Aghazadeh

Large Scale, Large Margin Classification using Indefinite Similarity Measures

May 27, 2014

Abstract:Despite the success of the popular kernelized support vector machines, they have two major limitations: they are restricted to Positive Semi-Definite (PSD) kernels, and their training complexity scales at least quadratically with the size of the data. Many natural measures of similarity between pairs of samples are not PSD e.g. invariant kernels, and those that are implicitly or explicitly defined by latent variable models. In this paper, we investigate scalable approaches for using indefinite similarity measures in large margin frameworks. In particular we show that a normalization of similarity to a subset of the data points constitutes a representation suitable for linear classifiers. The result is a classifier which is competitive to kernelized SVM in terms of accuracy, despite having better training and test time complexities. Experimental results demonstrate that on CIFAR-10 dataset, the model equipped with similarity measures invariant to rigid and non-rigid deformations, can be made more than 5 times sparser while being more accurate than kernelized SVM using RBF kernels.

Human Pose Estimation from RGB Input Using Synthetic Training Data

May 27, 2014

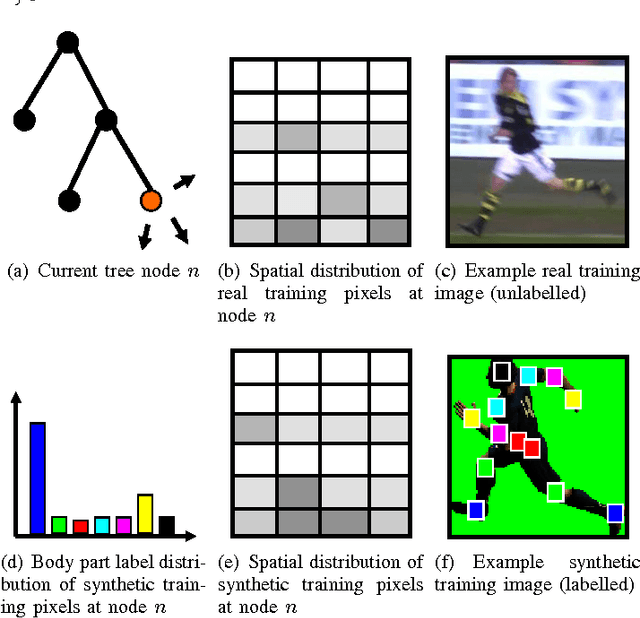

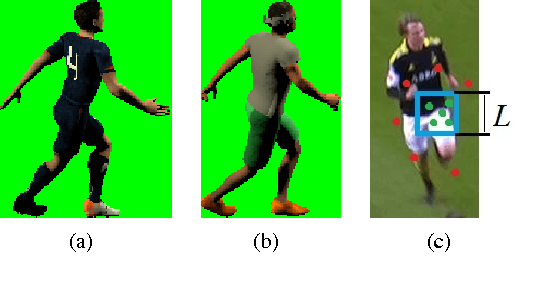

Abstract:We address the problem of estimating the pose of humans using RGB image input. More specifically, we are using a random forest classifier to classify pixels into joint-based body part categories, much similar to the famous Kinect pose estimator [11], [12]. However, we are using pure RGB input, i.e. no depth. Since the random forest requires a large number of training examples, we are using computer graphics generated, synthetic training data. In addition, we assume that we have access to a large number of real images with bounding box labels, extracted for example by a pedestrian detector or a tracking system. We propose a new objective function for random forest training that uses the weakly labeled data from the target domain to encourage the learner to select features that generalize from the synthetic source domain to the real target domain. We demonstrate on a publicly available dataset [6] that the proposed objective function yields a classifier that significantly outperforms a baseline classifier trained using the standard entropy objective [10].

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge