Olivier Therrien

SSS3D: Fast Neural Architecture Search For Efficient Three-Dimensional Semantic Segmentation

Apr 21, 2023

Abstract:We present SSS3D, a fast multi-objective NAS framework designed to find computationally efficient 3D semantic scene segmentation networks. It uses RandLA-Net, an off-the-shelf point-based network, as a super-network to enable weight sharing and reduce search time by 99.67% for single-stage searches. SSS3D has a complex search space composed of sampling and architectural parameters that can form 2.88 * 10^17 possible networks. To further reduce search time, SSS3D splits the complete search space and introduces a two-stage search that finds optimal subnetworks in 54% of the time required by single-stage searches.

FMAS: Fast Multi-Objective SuperNet Architecture Search for Semantic Segmentation

Mar 28, 2023

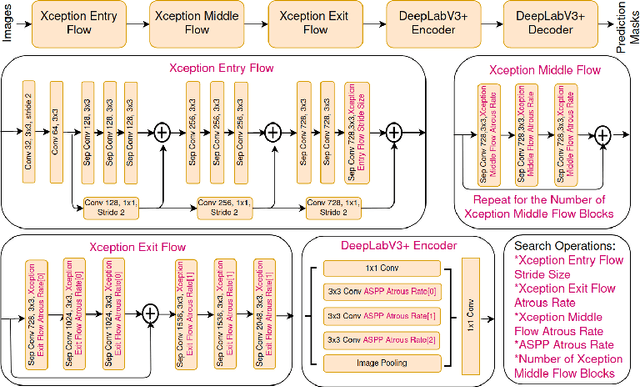

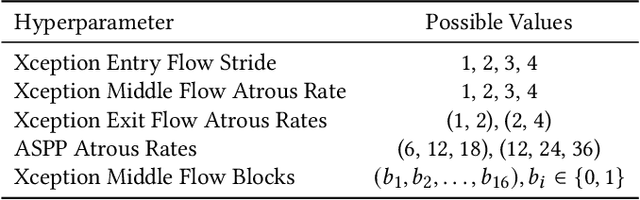

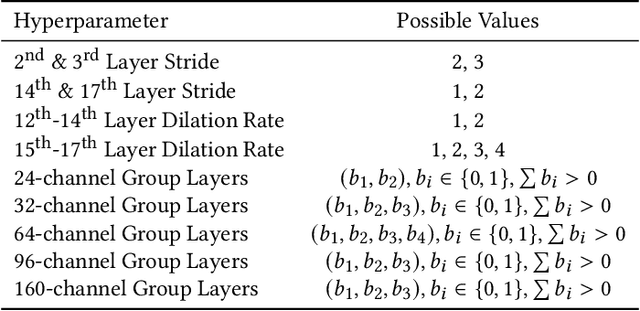

Abstract:We present FMAS, a fast multi-objective neural architecture search framework for semantic segmentation. FMAS subsamples the structure and pre-trained parameters of DeepLabV3+, without fine-tuning, dramatically reducing training time during search. To further reduce candidate evaluation time, we use a subset of the validation dataset during the search. Only the final, Pareto non-dominated, candidates are ultimately fine-tuned using the complete training set. We evaluate FMAS by searching for models that effectively trade accuracy and computational cost on the PASCAL VOC 2012 dataset. FMAS finds competitive designs quickly, e.g., taking just 0.5 GPU days to discover a DeepLabV3+ variant that reduces FLOPs and parameters by 10$\%$ and 20$\%$ respectively, for less than 3$\%$ increased error. We also search on an edge device called GAP8 and use its latency as the metric. FMAS is capable of finding 2.2$\times$ faster network with 7.61$\%$ MIoU loss.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge