Olivier Mangin

The HRC Model Set for Human-Robot Collaboration Research

Jan 17, 2018

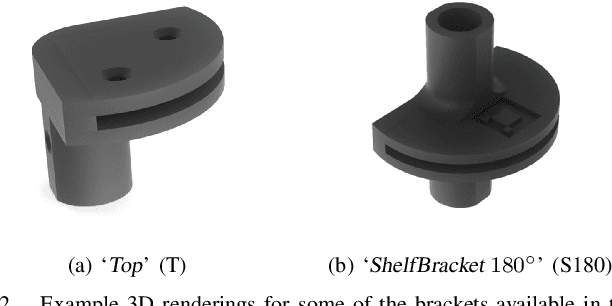

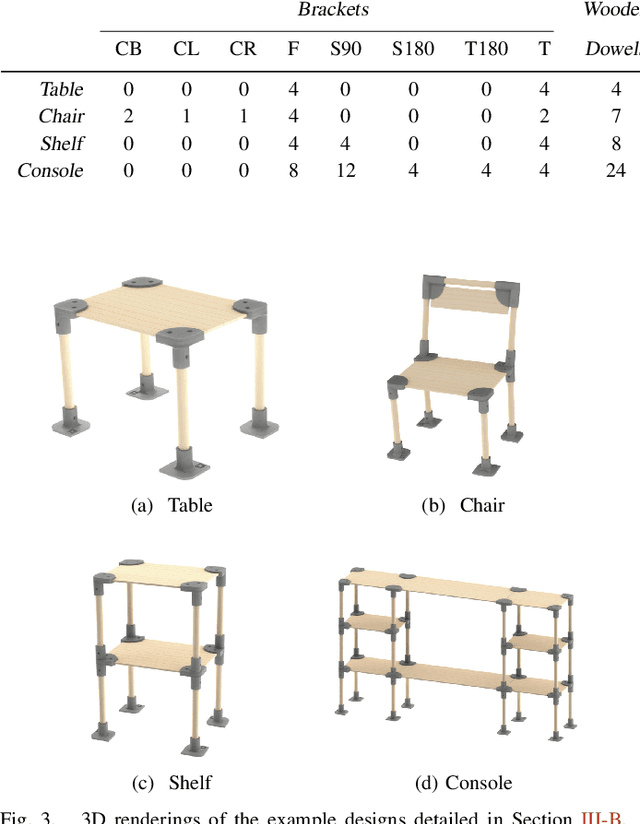

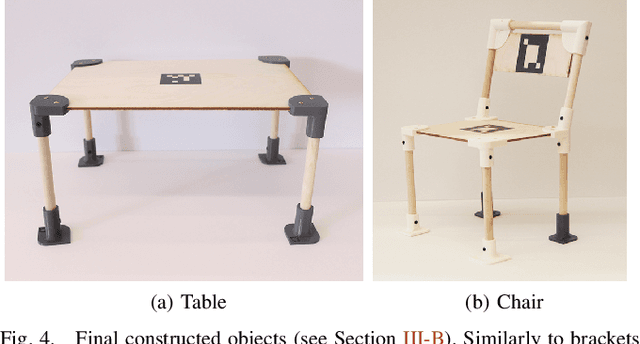

Abstract:In this paper, we present a model set for designing human-robot collaboration (HRC) experiments. It targets a common scenario in HRC, which is the collaborative assembly of furniture, and it consists of a combination of standard components and custom designs. With this work, we aim at reducing the amount of work required to set up and reproduce HRC experiments, and we provide a unified framework to facilitate the comparison and integration of contributions to the field. The model set is designed to be modular, extendable, and easy to distribute. Importantly, it covers the majority of relevant research in HRC, and it allows tuning of a number of experimental variables that are particularly valuable to the field. Additionally, we provide a set of software libraries for perception, control and interaction, with the goal of encouraging other researchers to proactively contribute to our work.

How to be Helpful? Implementing Supportive Behaviors for Human-Robot Collaboration

Oct 30, 2017

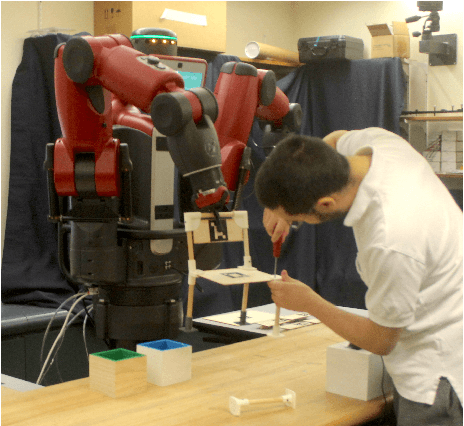

Abstract:The field of Human-Robot Collaboration (HRC) has seen a considerable amount of progress in the recent years. Although genuinely collaborative platforms are far from being deployed in real-world scenarios, advances in control and perception algorithms have progressively popularized robots in manufacturing settings, where they work side by side with human peers to achieve shared tasks. Unfortunately, little progress has been made toward the development of systems that are proactive in their collaboration, and autonomously take care of some of the chores that compose most of the collaboration tasks. In this work, we present a collaborative system capable of assisting the human partner with a variety of supportive behaviors in spite of its limited perceptual and manipulation capabilities and incomplete model of the task. Our framework leverages information from a high-level, hierarchical model of the task. The model, that is shared between the human and robot, enables transparent synchronization between the peers and understanding of each other's plan. More precisely, we derive a partially observable Markov model from the high-level task representation. We then use an online solver to compute a robot policy, that is robust to unexpected observations such as inaccuracies of perception, failures in object manipulations, as well as discovers hidden user preferences. We demonstrate that the system is capable of robustly providing support to the human in a furniture construction task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge