Ola Huse Ramstad

Local learning through propagation delays in spiking neural networks

Oct 27, 2022Abstract:We propose a novel local learning rule for spiking neural networks in which spike propagation times undergo activity-dependent plasticity. Our plasticity rule aligns pre-synaptic spike times to produce a stronger and more rapid response. Inputs are encoded by latency coding and outputs decoded by matching similar patterns of output spiking activity. We demonstrate the use of this method in a three-layer feedfoward network with inputs from a database of handwritten digits. Networks consistently improve their classification accuracy after training, and training with this method also allowed networks to generalize to an input class unseen during training. Our proposed method takes advantage of the ability of spiking neurons to support many different time-locked sequences of spikes, each of which can be activated by different input activations. The proof-of-concept shown here demonstrates the great potential for local delay learning to expand the memory capacity and generalizability of spiking neural networks.

Evolving spiking neuron cellular automata and networks to emulate in vitro neuronal activity

Oct 15, 2021

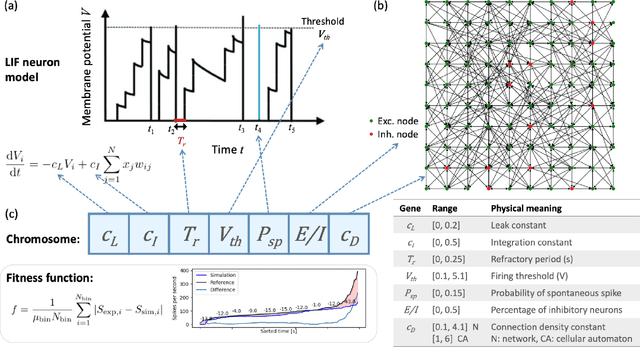

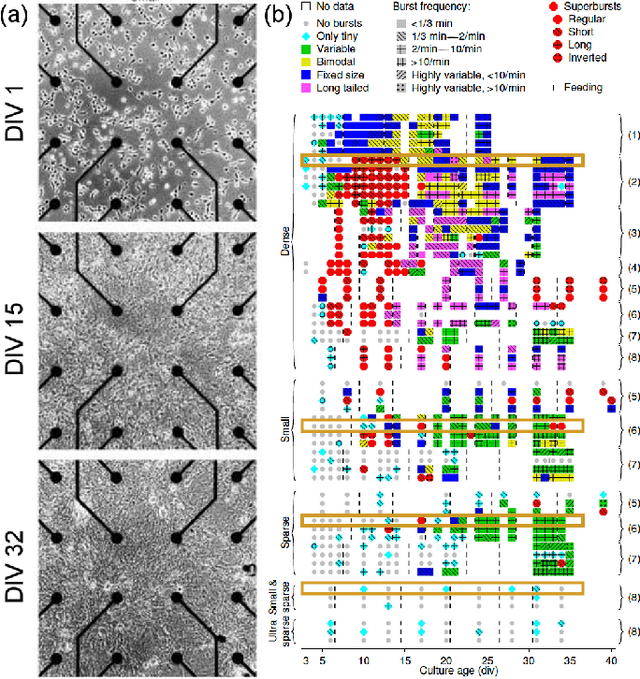

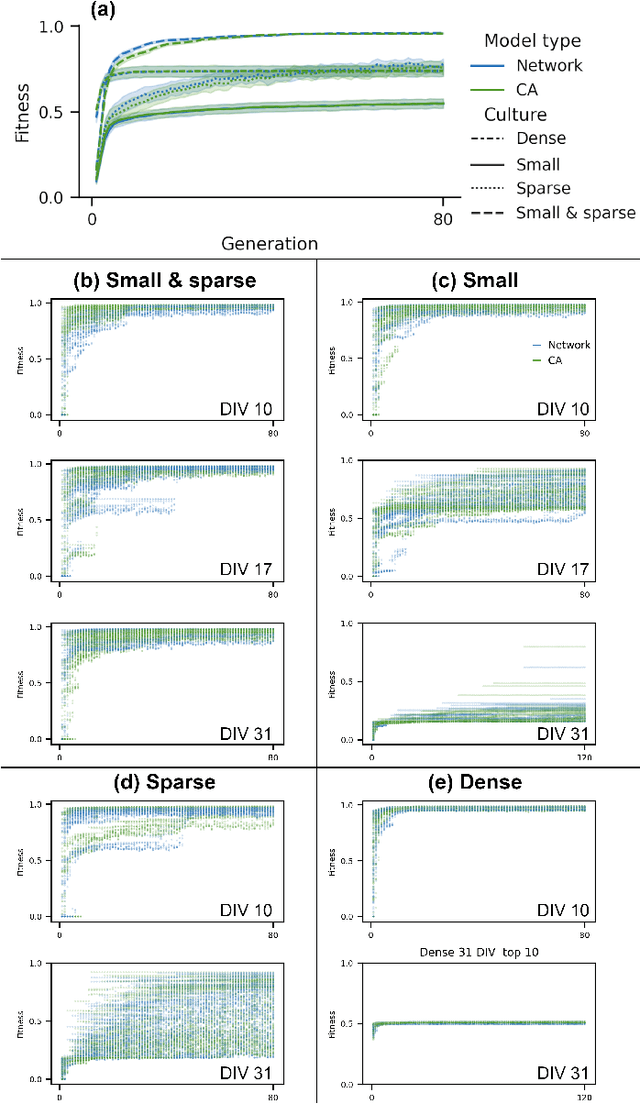

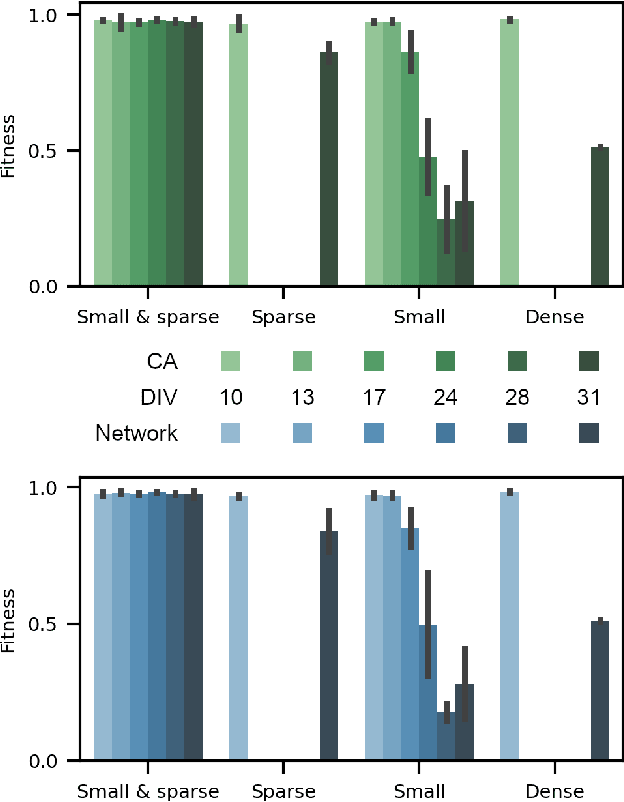

Abstract:Neuro-inspired models and systems have great potential for applications in unconventional computing. Often, the mechanisms of biological neurons are modeled or mimicked in simulated or physical systems in an attempt to harness some of the computational power of the brain. However, the biological mechanisms at play in neural systems are complicated and challenging to capture and engineer; thus, it can be simpler to turn to a data-driven approach to transfer features of neural behavior to artificial substrates. In the present study, we used an evolutionary algorithm (EA) to produce spiking neural systems that emulate the patterns of behavior of biological neurons in vitro. The aim of this approach was to develop a method of producing models capable of exhibiting complex behavior that may be suitable for use as computational substrates. Our models were able to produce a level of network-wide synchrony and showed a range of behaviors depending on the target data used for their evolution, which was from a range of neuronal culture densities and maturities. The genomes of the top-performing models indicate the excitability and density of connections in the model play an important role in determining the complexity of the produced activity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge