Ohyun Jo

LeMoF: Level-guided Multimodal Fusion for Heterogeneous Clinical Data

Jan 15, 2026Abstract:Multimodal clinical prediction is widely used to integrate heterogeneous data such as Electronic Health Records (EHR) and biosignals. However, existing methods tend to rely on static modality integration schemes and simple fusion strategies. As a result, they fail to fully exploit modality-specific representations. In this paper, we propose Level-guided Modal Fusion (LeMoF), a novel framework that selectively integrates level-guided representations within each modality. Each level refers to a representation extracted from a different layer of the encoder. LeMoF explicitly separates and learns global modality-level predictions from level-specific discriminative representations. This design enables LeMoF to achieve a balanced performance between prediction stability and discriminative capability even in heterogeneous clinical environments. Experiments on length of stay prediction using Intensive Care Unit (ICU) data demonstrate that LeMoF consistently outperforms existing state-of-the-art multimodal fusion techniques across various encoder configurations. We also confirmed that level-wise integration is a key factor in achieving robust predictive performance across various clinical conditions.

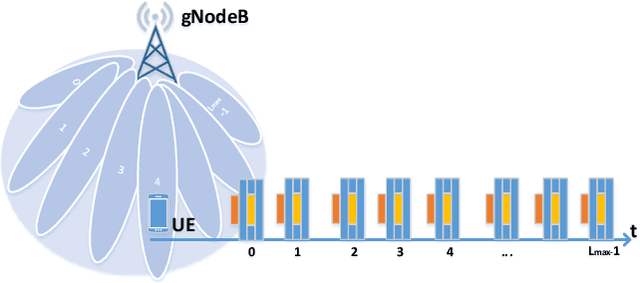

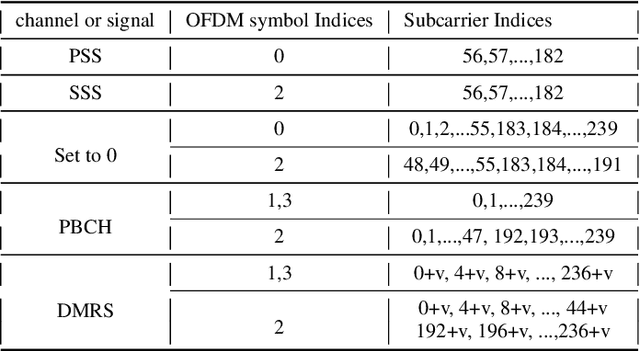

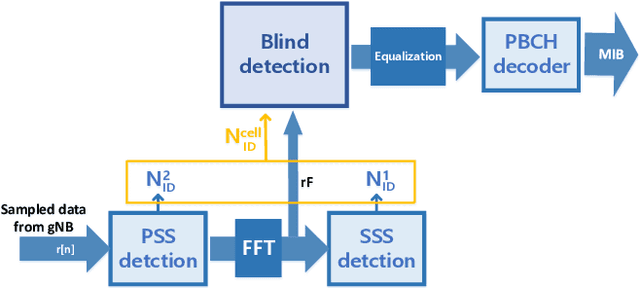

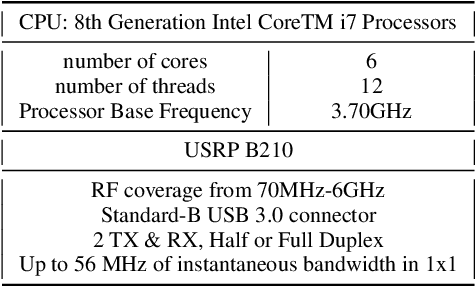

Exploitation of Channel-Learning for Enhancing 5G Blind Beam Index Detection

Dec 07, 2020

Abstract:Proliferation of 5G devices and services has driven the demand for wide-scale enhancements ranging from data rate, reliability, and compatibility to sustain the ever increasing growth of the telecommunication industry. In this regard, this work investigates how machine learning technology can improve the performance of 5G cell and beam index search in practice. The cell search is an essential function for a User Equipment (UE) to be initially associated with a base station, and is also important to further maintain the wireless connection. Unlike the former generation cellular systems, the 5G UE faces with an additional challenge to detect suitable beams as well as the cell identities in the cell search procedures. Herein, we propose and implement new channel-learning schemes to enhance the performance of 5G beam index detection. The salient point lies in the use of machine learning models and softwarization for practical implementations in a system level. We develop the proposed channel-learning scheme including algorithmic procedures and corroborative system structure for efficient beam index detection. We also implement a real-time operating 5G testbed based on the off-the-shelf Software Defined Radio (SDR) platform and conduct intensive experiments with commercial 5G base stations. The experimental results indicate that the proposed channel-learning schemes outperform the conventional correlation-based scheme in real 5G channel environments.

Q-NAV: NAV Setting Method based on Reinforcement Learning in Underwater Wireless Networks

May 21, 2020

Abstract:The demand on the underwater communications is extremely increasing in searching for underwater resources, marine expedition, or environmental researches, yet there are many problems with the wireless communications because of the characteristics of the underwater environments. Especially, with the underwater wireless networks, there happen inevitable delay time and spacial inequality due to the distances between the nodes. To solve these problems, this paper suggests a new solution based on ALOHA-Q. The suggested method use random NAV value. and Environments take reward through communications success or fail. After then, The environments setting NAV value from reward. This model minimizes usage of energy and computing resources under the underwater wireless networks, and learns and setting NAV values through intense learning. The results of the simulations show that NAV values can be environmentally adopted and select best value to the circumstances, so the problems which are unnecessary delay times and spacial inequality can be solved. Result of simulations, NAV time decreasing 17.5% compared with original NAV.

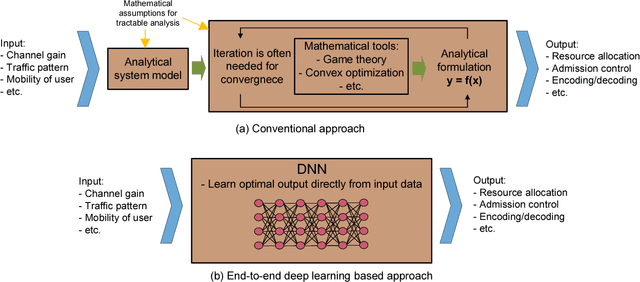

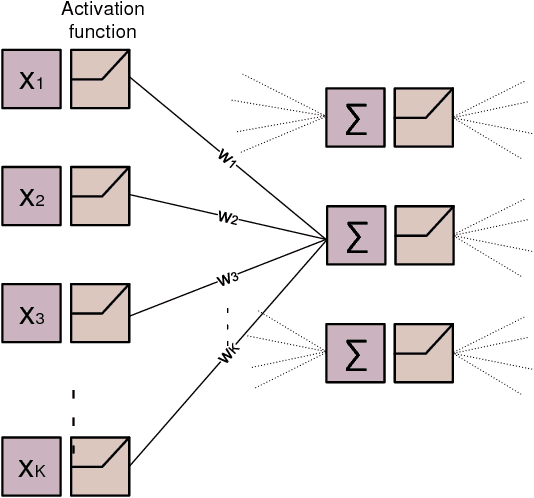

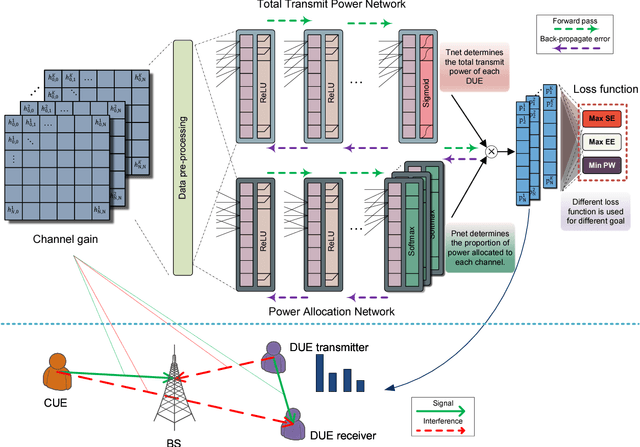

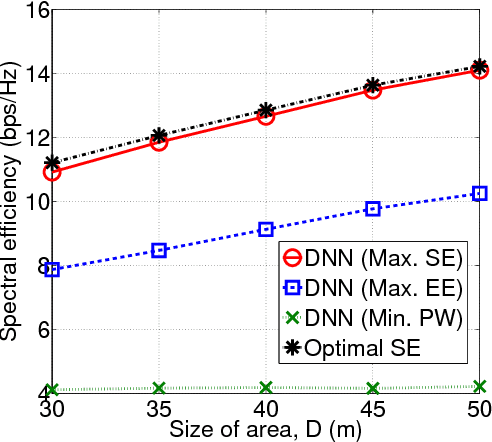

Application of End-to-End Deep Learning in Wireless Communications Systems

Aug 07, 2018

Abstract:Deep learning is a potential paradigm changer for the design of wireless communications systems (WCS), from conventional handcrafted schemes based on sophisticated mathematical models with assumptions to autonomous schemes based on the end-to-end deep learning using a large number of data. In this article, we present a basic concept of the deep learning and its application to WCS by investigating the resource allocation (RA) scheme based on a deep neural network (DNN) where multiple goals with various constraints can be satisfied through the end-to-end deep learning. Especially, the optimality and feasibility of the DNN based RA are verified through simulation. Then, we discuss the technical challenges regarding the application of deep learning in WCS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge