Norbert Broeker

IMS, Stuttgart University

The Use of Instrumentation in Grammar Engineering

Nov 30, 2000

Abstract:This paper explores the usefulness of a technique from software engineering, code instrumentation, for the development of large-scale natural language grammars. Information about the usage of grammar rules in test and corpus sentences is used to improve grammar and testsuite, as well as adapting a grammar to a specific genre. Results show that less than half of a large-coverage grammar for German is actually tested by two large testsuites, and that 10--30% of testing time is redundant. This methodology applied can be seen as a re-use of grammar writing knowledge for testsuite compilation.

* 7 pages, LaTeX2e, correction includes bibliography

Improving Testsuites via Instrumentation

May 10, 2000

Abstract:This paper explores the usefulness of a technique from software engineering, namely code instrumentation, for the development of large-scale natural language grammars. Information about the usage of grammar rules in test sentences is used to detect untested rules, redundant test sentences, and likely causes of overgeneration. Results show that less than half of a large-coverage grammar for German is actually tested by two large testsuites, and that 10-30% of testing time is redundant. The methodology applied can be seen as a re-use of grammar writing knowledge for testsuite compilation.

* 6 pages, LaTeX2e

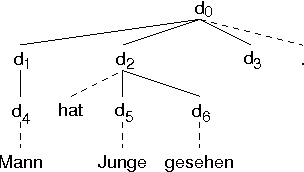

Separating Surface Order and Syntactic Relations in a Dependency Grammar

Aug 25, 1998

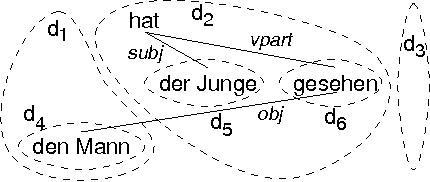

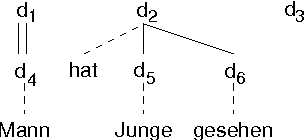

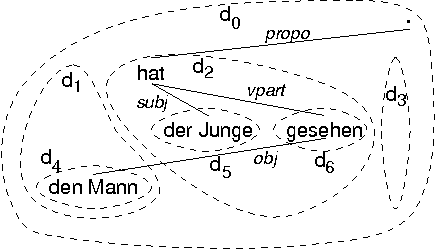

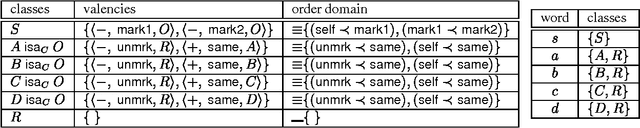

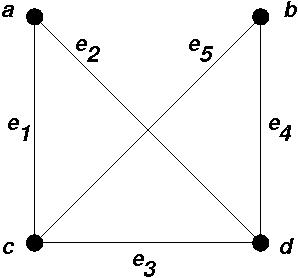

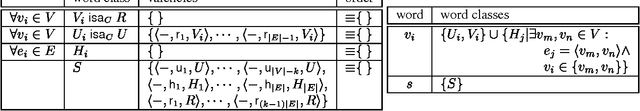

Abstract:This paper proposes decoupling the dependency tree from word order, such that surface ordering is not determined by traversing the dependency tree. We develop the notion of a \emph{word order domain structure}, which is linked but structurally dissimilar to the syntactic dependency tree. The proposal results in a lexicalized, declarative, and formally precise description of word order; features which lack previous proposals for dependency grammars. Contrary to other lexicalized approaches to word order, our proposal does not require lexical ambiguities for ordering alternatives.

* 7 pages, LaTeX2e

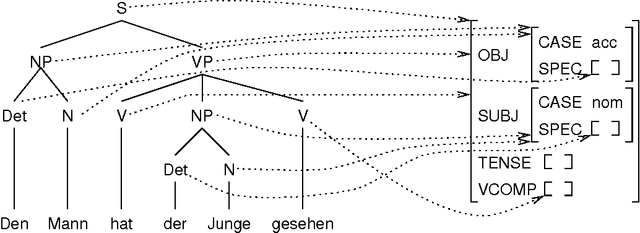

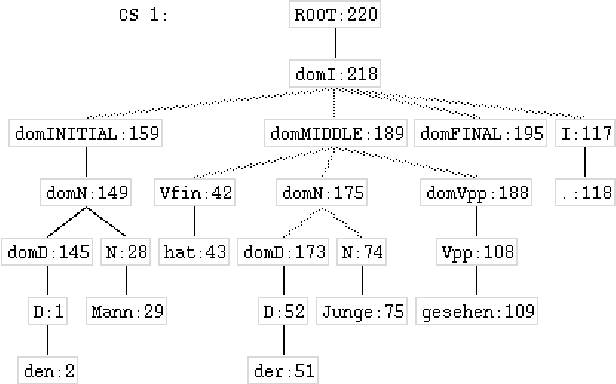

How to define a context-free backbone for DGs: Implementing a DG in the LFG formalism

Aug 21, 1998

Abstract:This paper presents a multidimensional Dependency Grammar (DG), which decouples the dependency tree from word order, such that surface ordering is not determined by traversing the dependency tree. We develop the notion of a \emph{word order domain structure}, which is linked but structurally dissimilar to the syntactic dependency tree. We then discuss the implementation of such a DG using constructs from a unification-based phrase-structure approach, namely Lexical-Functional Grammar (LFG). Particular attention is given to the analysis of discontinuities in DG in terms of LFG's functional uncertainty.

* 10 pages, LaTeX2e

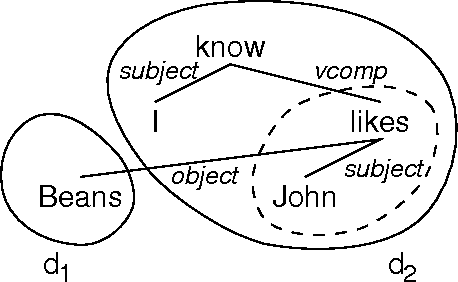

A Projection Architecture for Dependency Grammar and How it Compares to LFG

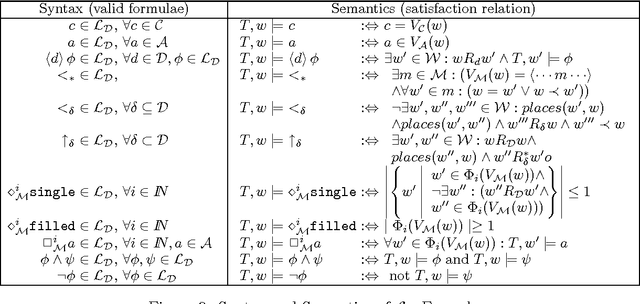

Jul 24, 1998Abstract:This paper explores commonalities and differences between \dachs, a variant of Dependency Grammar, and Lexical-Functional Grammar. \dachs\ is based on traditional linguistic insights, but on modern mathematical tools, aiming to integrate different knowledge systems (from syntax and semantics) via their coupling to an abstract syntactic primitive, the dependency relation. These knowledge systems correspond rather closely to projections in LFG. We will investigate commonalities arising from the usage of the projection approach in both theories, and point out differences due to the incompatible linguistic premises. The main difference to LFG lies in the motivation and status of the dimensions, and the information coded there. We will argue that LFG confounds different information in one projection, preventing it to achieve a good separation of alternatives and calling the motivation of the projection into question.

Message-Passing Protocols for Real-World Parsing -- An Object-Oriented Model and its Preliminary Evaluation

Sep 23, 1997

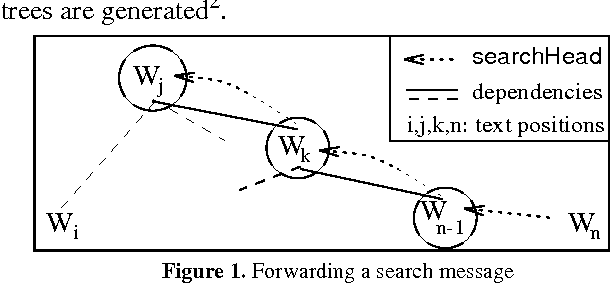

Abstract:We argue for a performance-based design of natural language grammars and their associated parsers in order to meet the constraints imposed by real-world NLP. Our approach incorporates declarative and procedural knowledge about language and language use within an object-oriented specification framework. We discuss several message-passing protocols for parsing and provide reasons for sacrificing completeness of the parse in favor of efficiency based on a preliminary empirical evaluation.

* 12 pages, uses epsfig.sty

The Complexity of Recognition of Linguistically Adequate Dependency Grammars

Sep 08, 1997

Abstract:Results of computational complexity exist for a wide range of phrase structure-based grammar formalisms, while there is an apparent lack of such results for dependency-based formalisms. We here adapt a result on the complexity of ID/LP-grammars to the dependency framework. Contrary to previous studies on heavily restricted dependency grammars, we prove that recognition (and thus, parsing) of linguistically adequate dependency grammars is NP-complete.

* 8 pages, requires LaTeX2e, epsfig, latexsym, amsmath

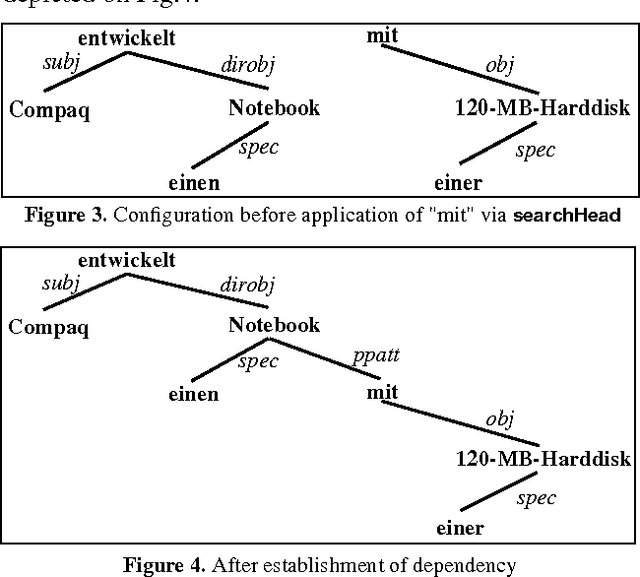

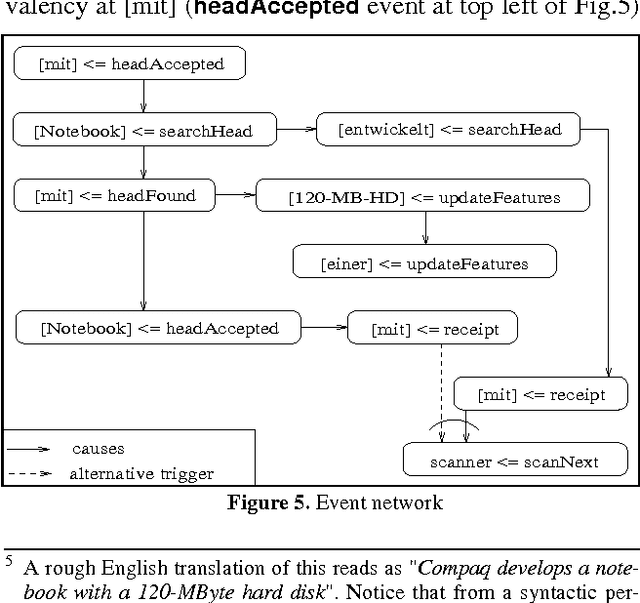

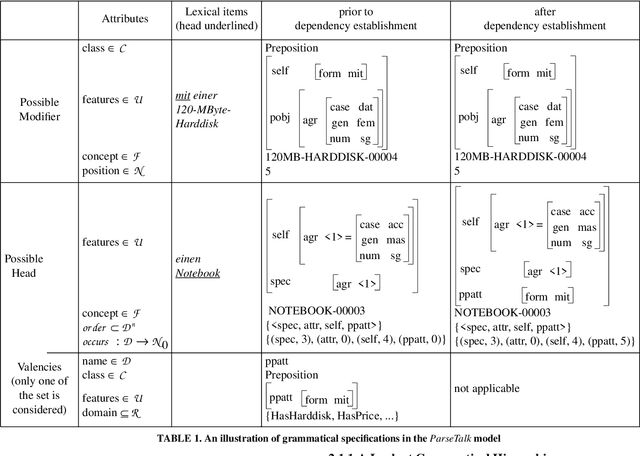

Concurrent Lexicalized Dependency Parsing: A Behavioral View on ParseTalk Events

Oct 24, 1994

Abstract:The behavioral specification of an object-oriented grammar model is considered. The model is based on full lexicalization, head-orientation via valency constraints and dependency relations, inheritance as a means for non-redundant lexicon specification, and concurrency of computation. The computation model relies upon the actor paradigm, with concurrency entering through asynchronous message passing between actors. In particular, we here elaborate on principles of how the global behavior of a lexically distributed grammar and its corresponding parser can be specified in terms of event type networks and event networks, resp.

* 68kB, 5pages Postscript

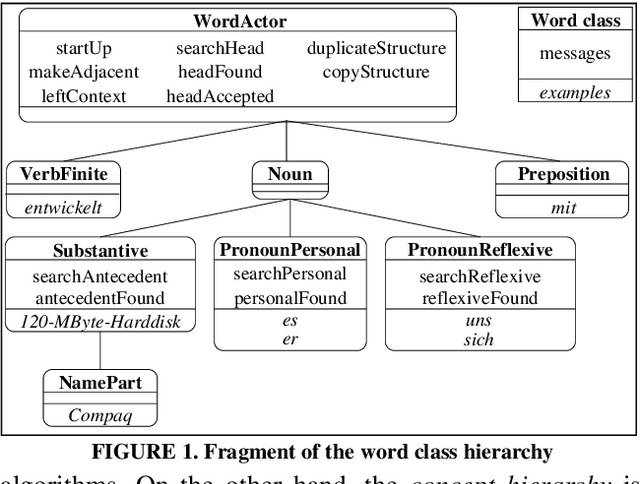

Concurrent Lexicalized Dependency Parsing: The ParseTalk Model

Oct 24, 1994

Abstract:A grammar model for concurrent, object-oriented natural language parsing is introduced. Complete lexical distribution of grammatical knowledge is achieved building upon the head-oriented notions of valency and dependency, while inheritance mechanisms are used to capture lexical generalizations. The underlying concurrent computation model relies upon the actor paradigm. We consider message passing protocols for establishing dependency relations and ambiguity handling.

* 90kB, 7pages Postscript

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge