Noah H. Paulson

Toward Learning Latent-Variable Representations of Microstructures by Optimizing in Spatial Statistics Space

Feb 16, 2024

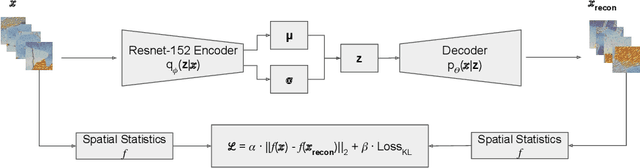

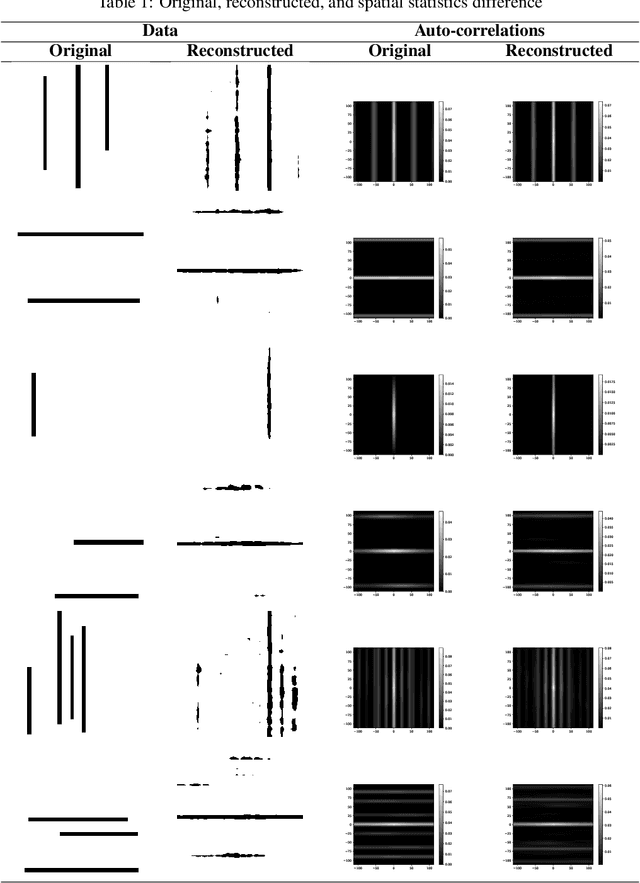

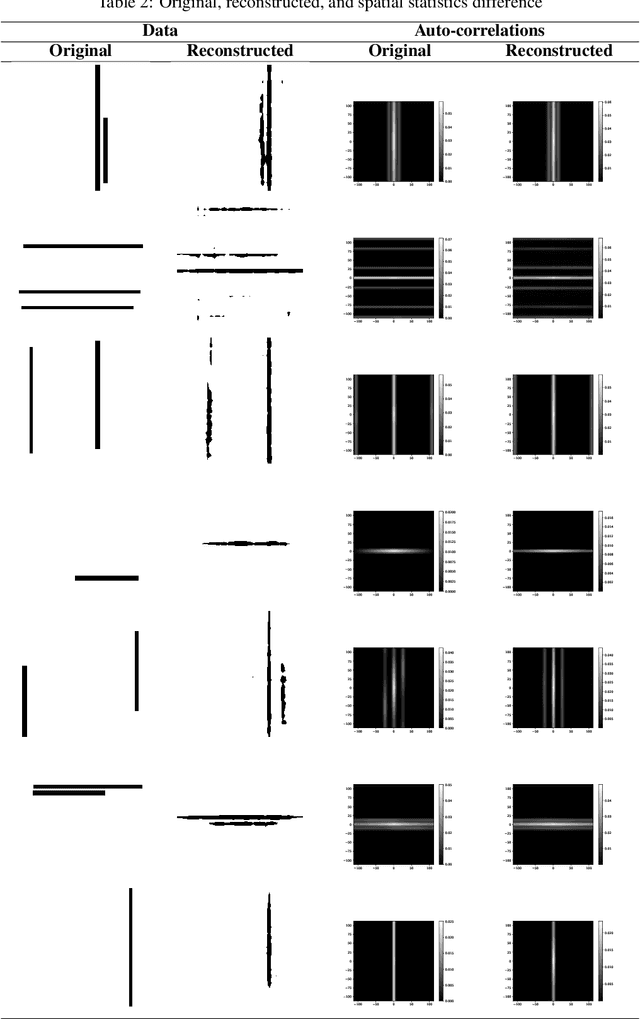

Abstract:In Materials Science, material development involves evaluating and optimizing the internal structures of the material, generically referred to as microstructures. Microstructures structure is stochastic, analogously to image textures. A particular microstructure can be well characterized by its spatial statistics, analogously to image texture being characterized by the response to a Fourier-like filter bank. Material design would benefit from low-dimensional representation of microstructures Paulson et al. (2017). In this work, we train a Variational Autoencoders (VAE) to produce reconstructions of textures that preserve the spatial statistics of the original texture, while not necessarily reconstructing the same image in data space. We accomplish this by adding a differentiable term to the cost function in order to minimize the distance between the original and the reconstruction in spatial statistics space. Our experiments indicate that it is possible to train a VAE that minimizes the distance in spatial statistics space between the original and the reconstruction of synthetic images. In future work, we will apply the same techniques to microstructures, with the goal of obtaining low-dimensional representations of material microstructures.

Prognosis of Multivariate Battery State of Performance and Health via Transformers

Sep 18, 2023

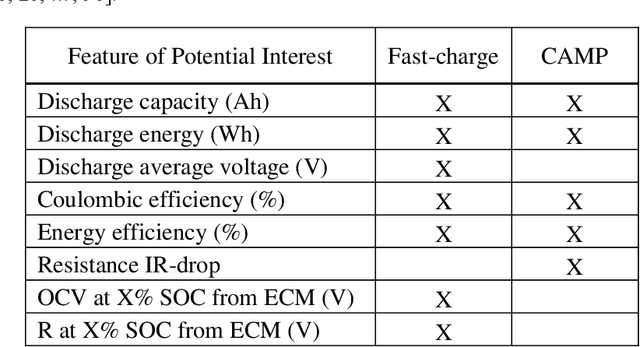

Abstract:Batteries are an essential component in a deeply decarbonized future. Understanding battery performance and "useful life" as a function of design and use is of paramount importance to accelerating adoption. Historically, battery state of health (SOH) was summarized by a single parameter, the fraction of a battery's capacity relative to its initial state. A more useful approach, however, is a comprehensive characterization of its state and complexities, using an interrelated set of descriptors including capacity, energy, ionic and electronic impedances, open circuit voltages, and microstructure metrics. Indeed, predicting across an extensive suite of properties as a function of battery use is a "holy grail" of battery science; it can provide unprecedented insights toward the design of better batteries with reduced experimental effort, and de-risking energy storage investments that are necessary to meet CO2 reduction targets. In this work, we present a first step in that direction via deep transformer networks for the prediction of 28 battery state of health descriptors using two cycling datasets representing six lithium-ion cathode chemistries (LFP, NMC111, NMC532, NMC622, HE5050, and 5Vspinel), multiple electrolyte/anode compositions, and different charge-discharge scenarios. The accuracy of these predictions versus battery life (with an unprecedented mean absolute error of 19 cycles in predicting end of life for an LFP fast-charging dataset) illustrates the promise of deep learning towards providing deeper understanding and control of battery health.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge