Niranjan Sitapure

Exploring Different Time-series-Transformer (TST) Architectures: A Case Study in Battery Life Prediction for Electric Vehicles (EVs)

Aug 07, 2023

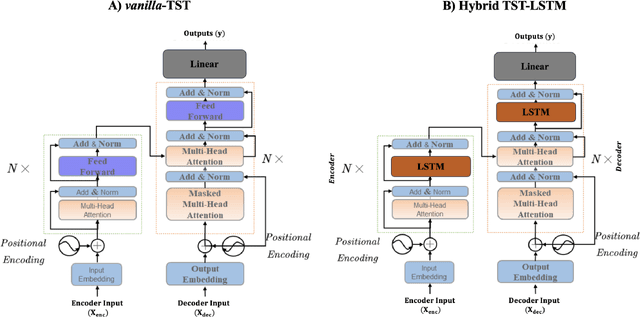

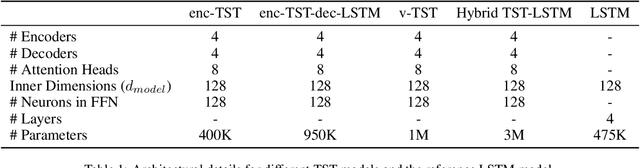

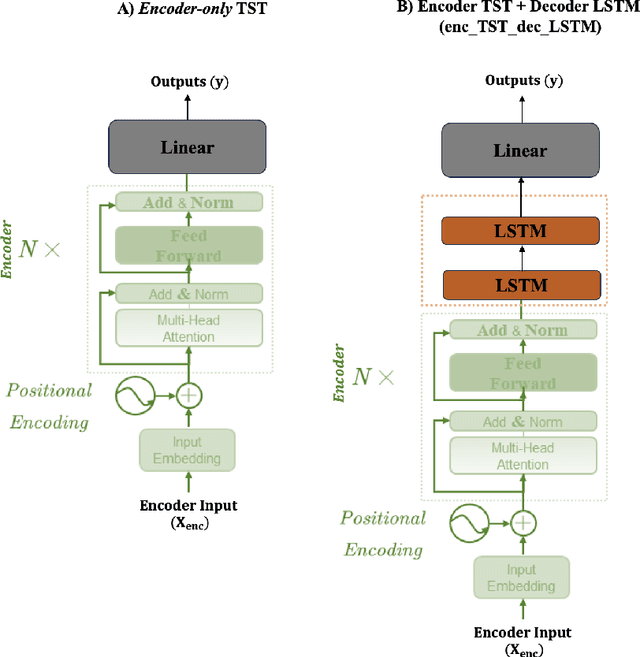

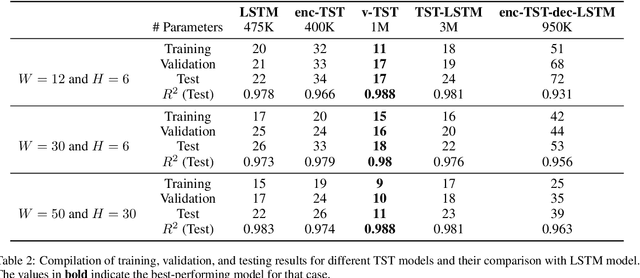

Abstract:In recent years, battery technology for electric vehicles (EVs) has been a major focus, with a significant emphasis on developing new battery materials and chemistries. However, accurately predicting key battery parameters, such as state-of-charge (SOC) and temperature, remains a challenge for constructing advanced battery management systems (BMS). Existing battery models do not comprehensively cover all parameters affecting battery performance, including non-battery-related factors like ambient temperature, cabin temperature, elevation, and regenerative braking during EV operation. Due to the difficulty of incorporating these auxiliary parameters into traditional models, a data-driven approach is suggested. Time-series-transformers (TSTs), leveraging multiheaded attention and parallelization-friendly architecture, are explored alongside LSTM models. Novel TST architectures, including encoder TST + decoder LSTM and a hybrid TST-LSTM, are also developed and compared against existing models. A dataset comprising 72 driving trips in a BMW i3 (60 Ah) is used to address battery life prediction in EVs, aiming to create accurate TST models that incorporate environmental, battery, vehicle driving, and heating circuit data to predict SOC and battery temperature for future time steps.

Introducing Hybrid Modeling with Time-series-Transformers: A Comparative Study of Series and Parallel Approach in Batch Crystallization

Jul 25, 2023Abstract:Most existing digital twins rely on data-driven black-box models, predominantly using deep neural recurrent, and convolutional neural networks (DNNs, RNNs, and CNNs) to capture the dynamics of chemical systems. However, these models have not seen the light of day, given the hesitance of directly deploying a black-box tool in practice due to safety and operational issues. To tackle this conundrum, hybrid models combining first-principles physics-based dynamics with machine learning (ML) models have increased in popularity as they are considered a 'best of both worlds' approach. That said, existing simple DNN models are not adept at long-term time-series predictions and utilizing contextual information on the trajectory of the process dynamics. Recently, attention-based time-series transformers (TSTs) that leverage multi-headed attention mechanism and positional encoding to capture long-term and short-term changes in process states have shown high predictive performance. Thus, a first-of-a-kind, TST-based hybrid framework has been developed for batch crystallization, demonstrating improved accuracy and interpretability compared to traditional black-box models. Specifically, two different configurations (i.e., series and parallel) of TST-based hybrid models are constructed and compared, which show a normalized-mean-square-error (NMSE) in the range of $[10, 50]\times10^{-4}$ and an $R^2$ value over 0.99. Given the growing adoption of digital twins, next-generation attention-based hybrid models are expected to play a crucial role in shaping the future of chemical manufacturing.

CrystalGPT: Enhancing system-to-system transferability in crystallization prediction and control using time-series-transformers

May 31, 2023Abstract:For prediction and real-time control tasks, machine-learning (ML)-based digital twins are frequently employed. However, while these models are typically accurate, they are custom-designed for individual systems, making system-to-system (S2S) transferability difficult. This occurs even when substantial similarities exist in the process dynamics across different chemical systems. To address this challenge, we developed a novel time-series-transformer (TST) framework that exploits the powerful transfer learning capabilities inherent in transformer algorithms. This was demonstrated using readily available process data obtained from different crystallizers operating under various operational scenarios. Using this extensive dataset, we trained a TST model (CrystalGPT) to exhibit remarkable S2S transferability not only across all pre-established systems, but also to an unencountered system. CrystalGPT achieved a cumulative error across all systems, which is eight times superior to that of existing ML models. Additionally, we coupled CrystalGPT with a predictive controller to reduce the variance in setpoint tracking to just 1%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge