Nikos Kostagiolas

Locality-preserving Directions for Interpreting the Latent Space of Satellite Image GANs

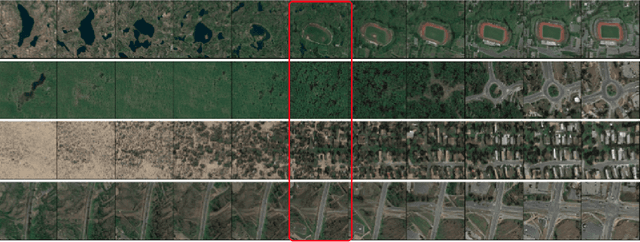

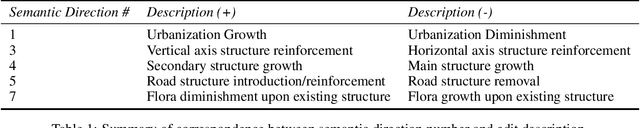

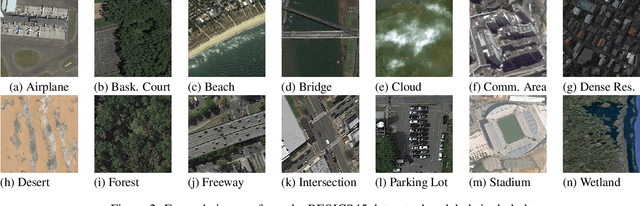

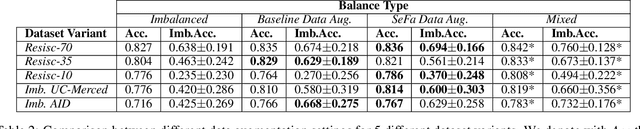

Sep 26, 2023Abstract:We present a locality-aware method for interpreting the latent space of wavelet-based Generative Adversarial Networks (GANs), that can well capture the large spatial and spectral variability that is characteristic to satellite imagery. By focusing on preserving locality, the proposed method is able to decompose the weight-space of pre-trained GANs and recover interpretable directions that correspond to high-level semantic concepts (such as urbanization, structure density, flora presence) - that can subsequently be used for guided synthesis of satellite imagery. In contrast to typically used approaches that focus on capturing the variability of the weight-space in a reduced dimensionality space (i.e., based on Principal Component Analysis, PCA), we show that preserving locality leads to vectors with different angles, that are more robust to artifacts and can better preserve class information. Via a set of quantitative and qualitative examples, we further show that the proposed approach can outperform both baseline geometric augmentations, as well as global, PCA-based approaches for data synthesis in the context of data augmentation for satellite scene classification.

Unsupervised Discovery of Semantic Concepts in Satellite Imagery with Style-based Wavelet-driven Generative Models

Aug 03, 2022

Abstract:In recent years, considerable advancements have been made in the area of Generative Adversarial Networks (GANs), particularly with the advent of style-based architectures that address many key shortcomings - both in terms of modeling capabilities and network interpretability. Despite these improvements, the adoption of such approaches in the domain of satellite imagery is not straightforward. Typical vision datasets used in generative tasks are well-aligned and annotated, and exhibit limited variability. In contrast, satellite imagery exhibits great spatial and spectral variability, wide presence of fine, high-frequency details, while the tedious nature of annotating satellite imagery leads to annotation scarcity - further motivating developments in unsupervised learning. In this light, we present the first pre-trained style- and wavelet-based GAN model that can readily synthesize a wide gamut of realistic satellite images in a variety of settings and conditions - while also preserving high-frequency information. Furthermore, we show that by analyzing the intermediate activations of our network, one can discover a multitude of interpretable semantic directions that facilitate the guided synthesis of satellite images in terms of high-level concepts (e.g., urbanization) without using any form of supervision. Via a set of qualitative and quantitative experiments we demonstrate the efficacy of our framework, in terms of suitability for downstream tasks (e.g., data augmentation), quality of synthetic imagery, as well as generalization capabilities to unseen datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge