Nikolaos Lykousas

The potential of LLM-generated reports in DevSecOps

Oct 02, 2024

Abstract:Alert fatigue is a common issue faced by software teams using the DevSecOps paradigm. The overwhelming number of warnings and alerts generated by security and code scanning tools, particularly in smaller teams where resources are limited, leads to desensitization and diminished responsiveness to security warnings, potentially exposing systems to vulnerabilities. This paper explores the potential of LLMs in generating actionable security reports that emphasize the financial impact and consequences of detected security issues, such as credential leaks, if they remain unaddressed. A survey conducted among developers indicates that LLM-generated reports significantly enhance the likelihood of immediate action on security issues by providing clear, comprehensive, and motivating insights. Integrating these reports into DevSecOps workflows can mitigate attention saturation and alert fatigue, ensuring that critical security warnings are addressed effectively.

Large-scale analysis of grooming in modern social networks

Apr 16, 2020

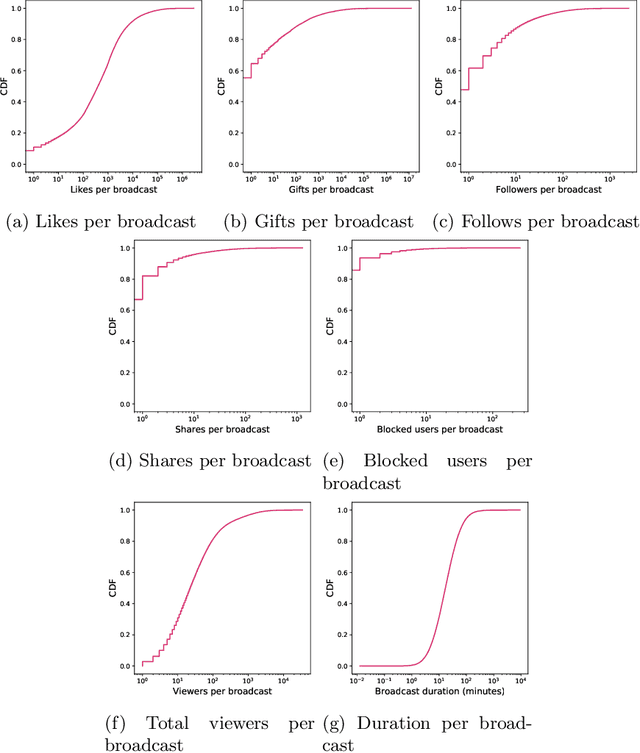

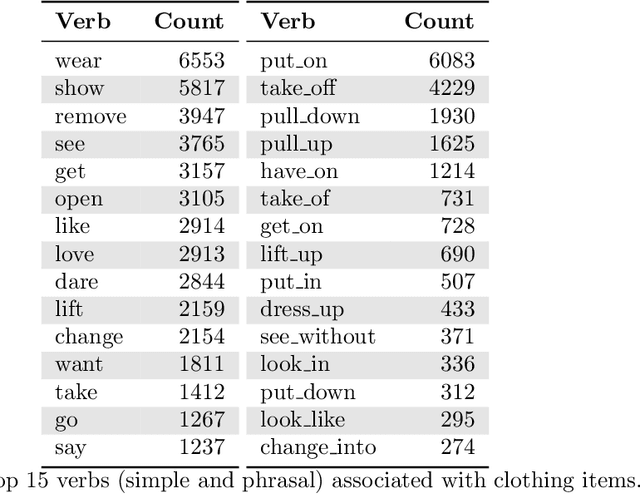

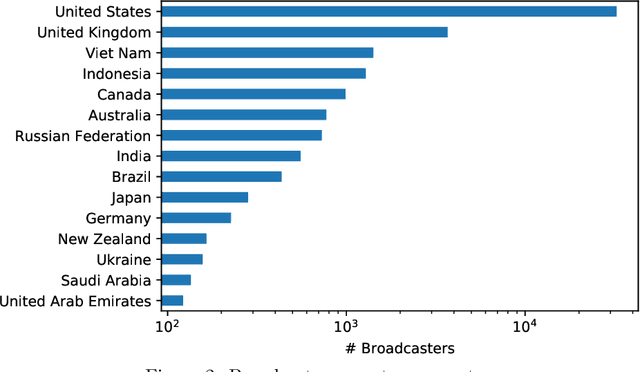

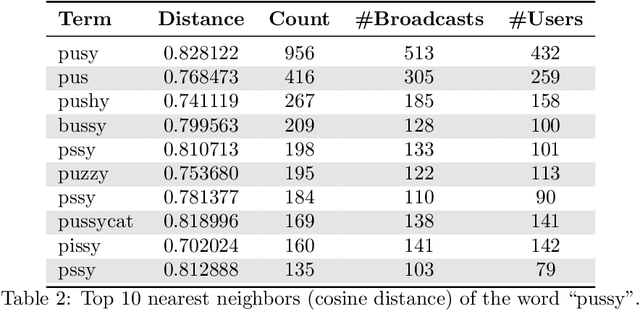

Abstract:Social networks are evolving to engage their users more by providing them with more functionalities. One of the most attracting ones is streaming. Users may broadcast part of their daily lives to thousands of others world-wide and interact with them in real-time. Unfortunately, this feature is reportedly exploited for grooming. In this work, we provide the first in-depth analysis of this problem for social live streaming services. More precisely, using a dataset that we collected, we identify predatory behaviours and grooming on chats that bypassed the moderation mechanisms of the LiveMe, the service under investigation. Beyond the traditional text approaches, we also investigate the relevance of emojis in this context, as well as the user interactions through the gift mechanisms of LiveMe. Finally, our analysis indicates the possibility of grooming towards minors, showing the extent of the problem in such platforms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge