Nijat Mehdiyev

Assessing the Business Process Modeling Competences of Large Language Models

Jan 29, 2026Abstract:The creation of Business Process Model and Notation (BPMN) models is a complex and time-consuming task requiring both domain knowledge and proficiency in modeling conventions. Recent advances in large language models (LLMs) have significantly expanded the possibilities for generating BPMN models directly from natural language, building upon earlier text-to-process methods with enhanced capabilities in handling complex descriptions. However, there is a lack of systematic evaluations of LLM-generated process models. Current efforts either use LLM-as-a-judge approaches or do not consider established dimensions of model quality. To this end, we introduce BEF4LLM, a novel LLM evaluation framework comprising four perspectives: syntactic quality, pragmatic quality, semantic quality, and validity. Using BEF4LLM, we conduct a comprehensive analysis of open-source LLMs and benchmark their performance against human modeling experts. Results indicate that LLMs excel in syntactic and pragmatic quality, while humans outperform in semantic aspects; however, the differences in scores are relatively modest, highlighting LLMs' competitive potential despite challenges in validity and semantic quality. The insights highlight current strengths and limitations of using LLMs for BPMN modeling and guide future model development and fine-tuning. Addressing these areas is essential for advancing the practical deployment of LLMs in business process modeling.

From Theory to Practice: Real-World Use Cases on Trustworthy LLM-Driven Process Modeling, Prediction and Automation

Jun 04, 2025Abstract:Traditional Business Process Management (BPM) struggles with rigidity, opacity, and scalability in dynamic environments while emerging Large Language Models (LLMs) present transformative opportunities alongside risks. This paper explores four real-world use cases that demonstrate how LLMs, augmented with trustworthy process intelligence, redefine process modeling, prediction, and automation. Grounded in early-stage research projects with industrial partners, the work spans manufacturing, modeling, life-science, and design processes, addressing domain-specific challenges through human-AI collaboration. In manufacturing, an LLM-driven framework integrates uncertainty-aware explainable Machine Learning (ML) with interactive dialogues, transforming opaque predictions into auditable workflows. For process modeling, conversational interfaces democratize BPMN design. Pharmacovigilance agents automate drug safety monitoring via knowledge-graph-augmented LLMs. Finally, sustainable textile design employs multi-agent systems to navigate regulatory and environmental trade-offs. We intend to examine tensions between transparency and efficiency, generalization and specialization, and human agency versus automation. By mapping these trade-offs, we advocate for context-sensitive integration prioritizing domain needs, stakeholder values, and iterative human-in-the-loop workflows over universal solutions. This work provides actionable insights for researchers and practitioners aiming to operationalize LLMs in critical BPM environments.

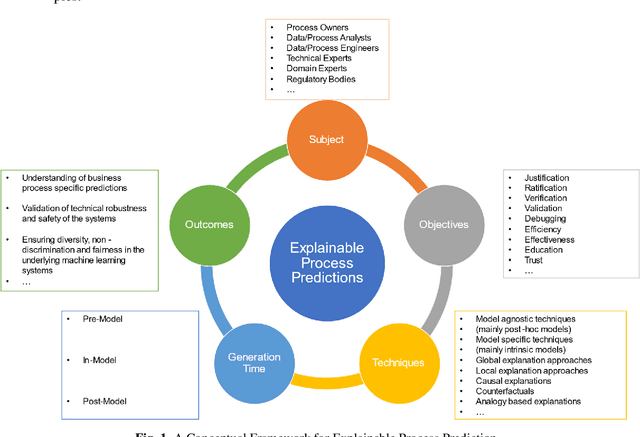

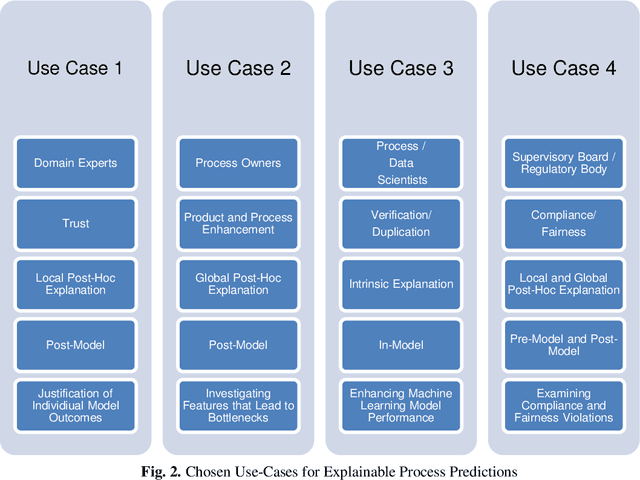

Interpretable and Explainable Machine Learning Methods for Predictive Process Monitoring: A Systematic Literature Review

Dec 29, 2023Abstract:This paper presents a systematic literature review (SLR) on the explainability and interpretability of machine learning (ML) models within the context of predictive process mining, using the PRISMA framework. Given the rapid advancement of artificial intelligence (AI) and ML systems, understanding the "black-box" nature of these technologies has become increasingly critical. Focusing specifically on the domain of process mining, this paper delves into the challenges of interpreting ML models trained with complex business process data. We differentiate between intrinsically interpretable models and those that require post-hoc explanation techniques, providing a comprehensive overview of the current methodologies and their applications across various application domains. Through a rigorous bibliographic analysis, this research offers a detailed synthesis of the state of explainability and interpretability in predictive process mining, identifying key trends, challenges, and future directions. Our findings aim to equip researchers and practitioners with a deeper understanding of how to develop and implement more trustworthy, transparent, and effective intelligent systems for predictive process analytics.

Quantifying and Explaining Machine Learning Uncertainty in Predictive Process Monitoring: An Operations Research Perspective

Apr 13, 2023

Abstract:This paper introduces a comprehensive, multi-stage machine learning methodology that effectively integrates information systems and artificial intelligence to enhance decision-making processes within the domain of operations research. The proposed framework adeptly addresses common limitations of existing solutions, such as the neglect of data-driven estimation for vital production parameters, exclusive generation of point forecasts without considering model uncertainty, and lacking explanations regarding the sources of such uncertainty. Our approach employs Quantile Regression Forests for generating interval predictions, alongside both local and global variants of SHapley Additive Explanations for the examined predictive process monitoring problem. The practical applicability of the proposed methodology is substantiated through a real-world production planning case study, emphasizing the potential of prescriptive analytics in refining decision-making procedures. This paper accentuates the imperative of addressing these challenges to fully harness the extensive and rich data resources accessible for well-informed decision-making.

Communicating Uncertainty in Machine Learning Explanations: A Visualization Analytics Approach for Predictive Process Monitoring

Apr 12, 2023Abstract:As data-driven intelligent systems advance, the need for reliable and transparent decision-making mechanisms has become increasingly important. Therefore, it is essential to integrate uncertainty quantification and model explainability approaches to foster trustworthy business and operational process analytics. This study explores how model uncertainty can be effectively communicated in global and local post-hoc explanation approaches, such as Partial Dependence Plots (PDP) and Individual Conditional Expectation (ICE) plots. In addition, this study examines appropriate visualization analytics approaches to facilitate such methodological integration. By combining these two research directions, decision-makers can not only justify the plausibility of explanation-driven actionable insights but also validate their reliability. Finally, the study includes expert interviews to assess the suitability of the proposed approach and designed interface for a real-world predictive process monitoring problem in the manufacturing domain.

Local Post-Hoc Explanations for Predictive Process Monitoring in Manufacturing

Sep 22, 2020

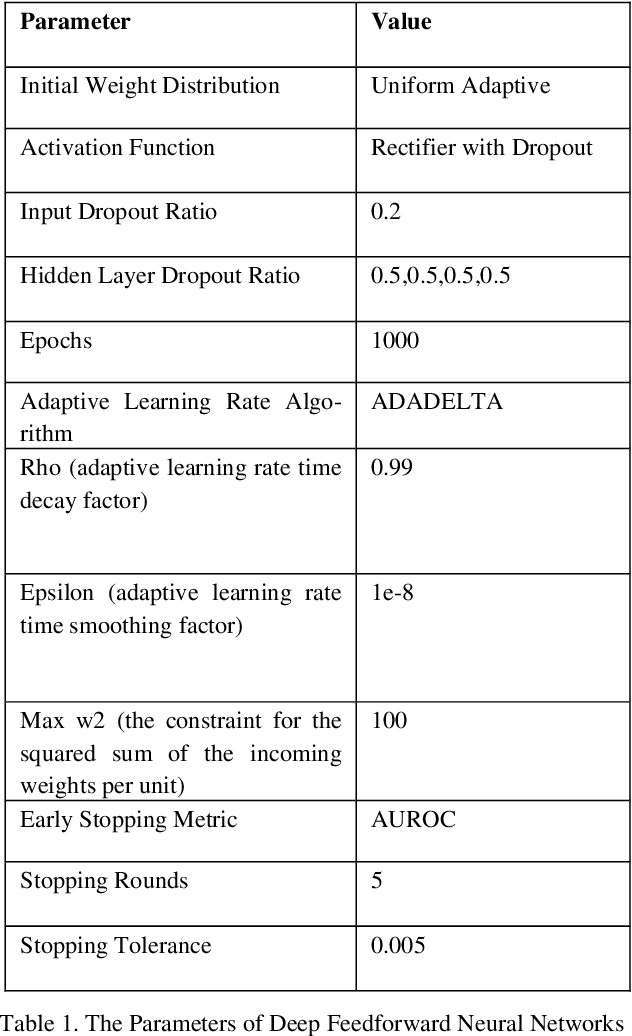

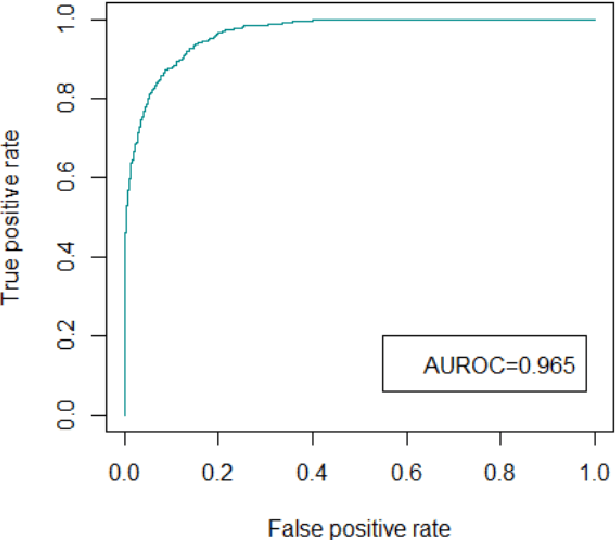

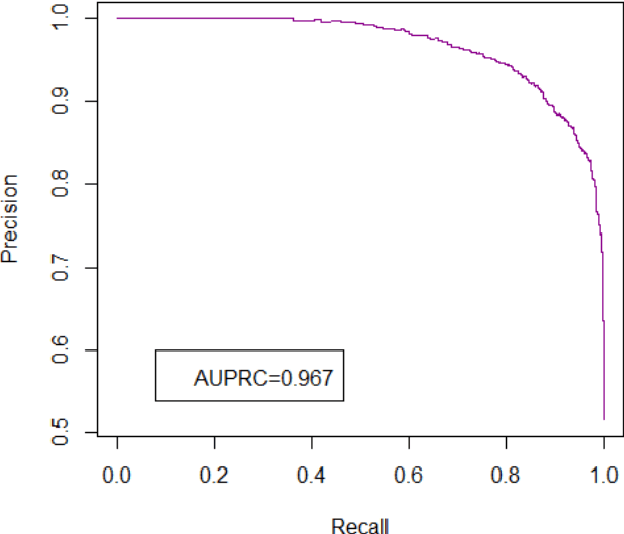

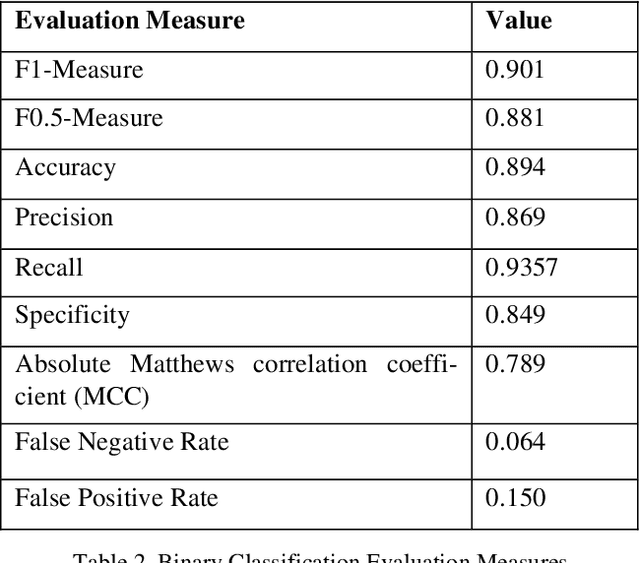

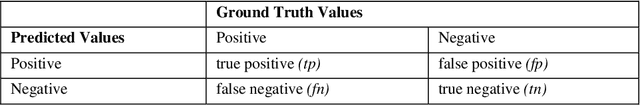

Abstract:This study proposes an innovative explainable process prediction solution to facilitate the data-driven decision making for process planning in manufacturing. After integrating the top-floor and shop-floor data obtained from various enterprise information systems especially from Manufacturing Execution Systems, a deep neural network was applied to predict the process outcomes. Since we aim to operationalize the delivered predictive insights by embedding them into decision making processes, it is essential to generate the relevant explanations for domain experts. To this end, two local post-hoc explanation approaches, Shapley Values and Individual Conditional Expectation (ICE) plots, are applied which are expected to enhance the decision-making capabilities by enabling experts to examine explanations from different perspectives. After assessing the predictive strength of the adopted deep neural networks with relevant binary classification evaluation measures, a discussion of the generated explanations is provided. Lastly, a brief discussion of ongoing activities in the scope of current emerging application and some aspects of future implementation plan concludes the study.

Explainable Artificial Intelligence for Process Mining: A General Overview and Application of a Novel Local Explanation Approach for Predictive Process Monitoring

Sep 12, 2020

Abstract:The contemporary process-aware information systems possess the capabilities to record the activities generated during the process execution. To leverage these process specific fine-granular data, process mining has recently emerged as a promising research discipline. As an important branch of process mining, predictive business process management, pursues the objective to generate forward-looking, predictive insights to shape business processes. In this study, we propose a conceptual framework sought to establish and promote understanding of decision-making environment, underlying business processes and nature of the user characteristics for developing explainable business process prediction solutions. Consequently, with regard to the theoretical and practical implications of the framework, this study proposes a novel local post-hoc explanation approach for a deep learning classifier that is expected to facilitate the domain experts in justifying the model decisions. In contrary to alternative popular perturbation-based local explanation approaches, this study defines the local regions from the validation dataset by using the intermediate latent space representations learned by the deep neural networks. To validate the applicability of the proposed explanation method, the real-life process log data delivered by the Volvo IT Belgium's incident management system are used.The adopted deep learning classifier achieves a good performance with the Area Under the ROC Curve of 0.94. The generated local explanations are also visualized and presented with relevant evaluation measures that are expected to increase the users' trust in the black-box-model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge