Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Nicolas Vercheval

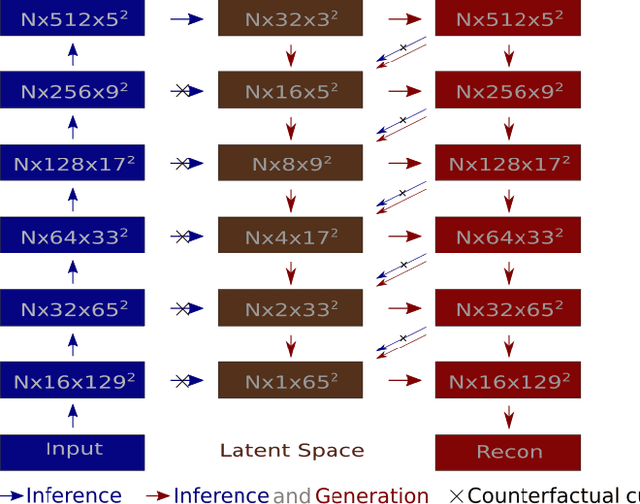

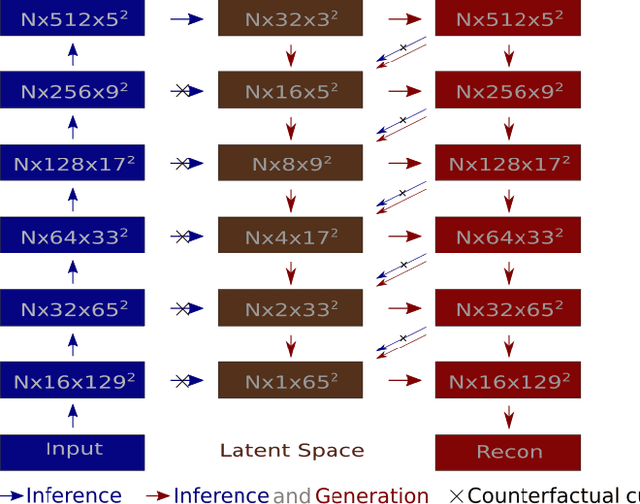

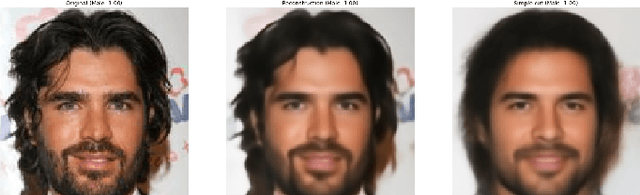

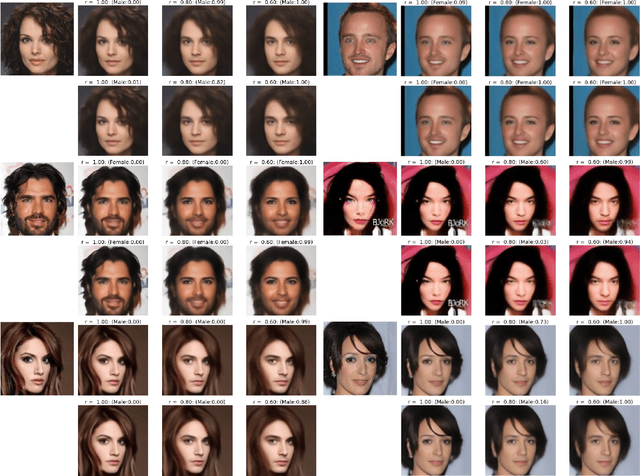

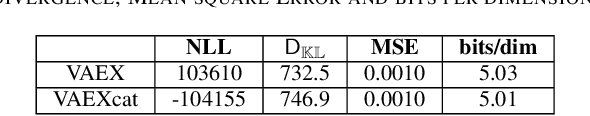

Hierarchical Variational Autoencoder for Visual Counterfactuals

Feb 01, 2021Figures and Tables:

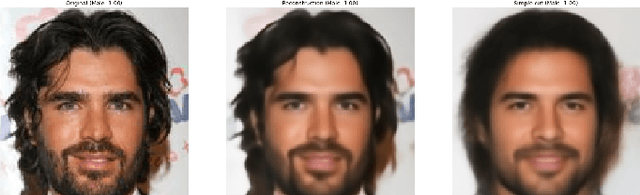

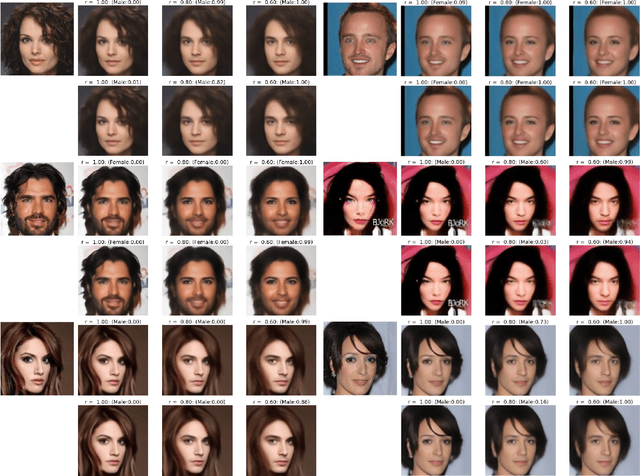

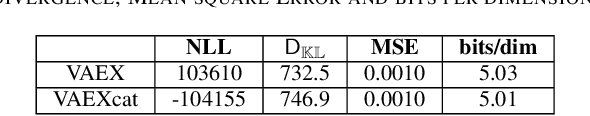

Abstract:Conditional Variational Auto Encoders (VAE) are gathering significant attention as an Explainable Artificial Intelligence (XAI) tool. The codes in the latent space provide a theoretically sound way to produce counterfactuals, i.e. alterations resulting from an intervention on a targeted semantic feature. To be applied on real images more complex models are needed, such as Hierarchical CVAE. This comes with a challenge as the naive conditioning is no longer effective. In this paper we show how relaxing the effect of the posterior leads to successful counterfactuals and we introduce VAEX an Hierarchical VAE designed for this approach that can visually audit a classifier in applications.

* 5 pages, 3 figures

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge