Nick Merrill

The Entoptic Field Camera as Metaphor-Driven Research-through-Design with AI Technologies

Jan 23, 2023

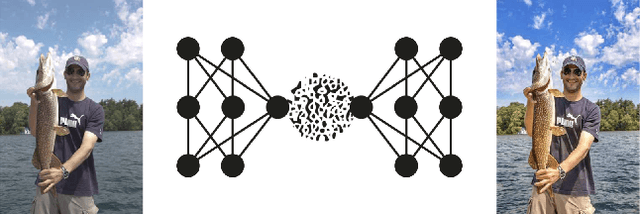

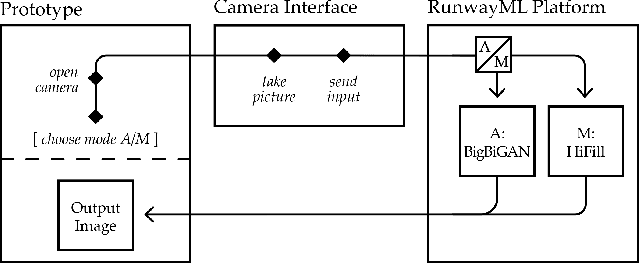

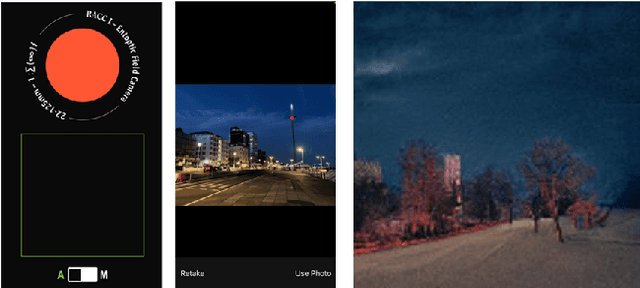

Abstract:Artificial intelligence (AI) technologies are widely deployed in smartphone photography; and prompt-based image synthesis models have rapidly become commonplace. In this paper, we describe a Research-through-Design (RtD) project which explores this shift in the means and modes of image production via the creation and use of the Entoptic Field Camera. Entoptic phenomena usually refer to perceptions of floaters or bright blue dots stemming from the physiological interplay of the eye and brain. We use the term entoptic as a metaphor to investigate how the material interplay of data and models in AI technologies shapes human experiences of reality. Through our case study using first-person design and a field study, we offer implications for critical, reflective, more-than-human and ludic design to engage AI technologies; the conceptualisation of an RtD research space which contributes to AI literacy discourses; and outline a research trajectory concerning materiality and design affordances of AI technologies.

Seeing Like a Toolkit: How Toolkits Envision the Work of AI Ethics

Feb 17, 2022

Abstract:Numerous toolkits have been developed to support ethical AI development. However, toolkits, like all tools, encode assumptions in their design about what work should be done and how. In this paper, we conduct a qualitative analysis of 27 AI ethics toolkits to critically examine how the work of ethics is imagined and how it is supported by these toolkits. Specifically, we examine the discourses toolkits rely on when talking about ethical issues, who they imagine should do the work of ethics, and how they envision the work practices involved in addressing ethics. We find that AI ethics toolkits largely frame the work of AI ethics to be technical work for individual technical practitioners, despite calls for engaging broader sets of stakeholders in grappling with social aspects of AI ethics, and without contending with the organizational and political implications of AI ethics work in practice. Among all toolkits, we identify a mismatch between the imagined work of ethics and the support the toolkits provide for doing that work. We identify a lack of guidance around how to navigate organizational power dynamics as they relate to performing ethical work. We use these omissions to chart future work for researchers and designers of AI ethics toolkits.

Machine Learning Uncertainty as a Design Material: A Post-Phenomenological Inquiry

Jan 11, 2021

Abstract:Design research is important for understanding and interrogating how emerging technologies shape human experience. However, design research with Machine Learning (ML) is relatively underdeveloped. Crucially, designers have not found a grasp on ML uncertainty as a design opportunity rather than an obstacle. The technical literature points to data and model uncertainties as two main properties of ML. Through post-phenomenology, we position uncertainty as one defining material attribute of ML processes which mediate human experience. To understand ML uncertainty as a design material, we investigate four design research case studies involving ML. We derive three provocative concepts: thingly uncertainty: ML-driven artefacts have uncertain, variable relations to their environments; pattern leakage: ML uncertainty can lead to patterns shaping the world they are meant to represent; and futures creep: ML technologies texture human relations to time with uncertainty. Finally, we outline design research trajectories and sketch a post-phenomenological approach to human-ML relations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge