Neil Chulpongsatorn

Augmented Math: Authoring AR-Based Explorable Explanations by Augmenting Static Math Textbooks

Jul 30, 2023

Abstract:We introduce Augmented Math, a machine learning-based approach to authoring AR explorable explanations by augmenting static math textbooks without programming. To augment a static document, our system first extracts mathematical formulas and figures from a given document using optical character recognition (OCR) and computer vision. By binding and manipulating these extracted contents, the user can see the interactive animation overlaid onto the document through mobile AR interfaces. This empowers non-technical users, such as teachers or students, to transform existing math textbooks and handouts into on-demand and personalized explorable explanations. To design our system, we first analyzed existing explorable math explanations to identify common design strategies. Based on the findings, we developed a set of augmentation techniques that can be automatically generated based on the extracted content, which are 1) dynamic values, 2) interactive figures, 3) relationship highlights, 4) concrete examples, and 5) step-by-step hints. To evaluate our system, we conduct two user studies: preliminary user testing and expert interviews. The study results confirm that our system allows more engaging experiences for learning math concepts.

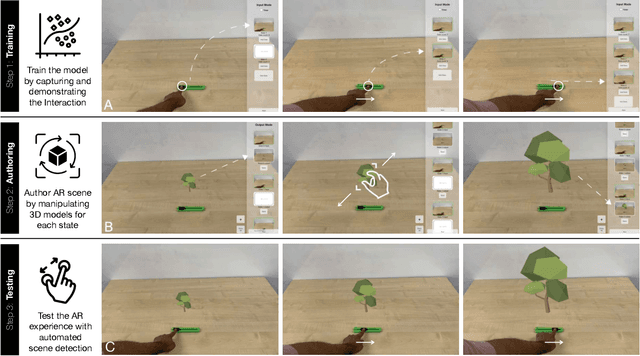

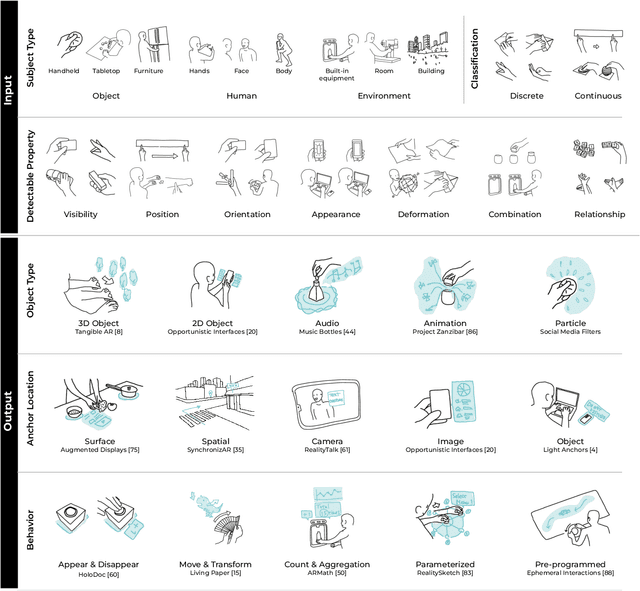

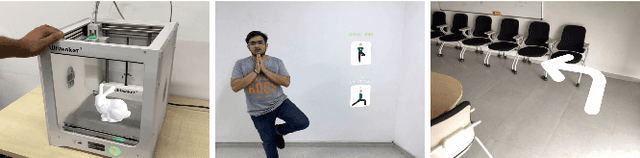

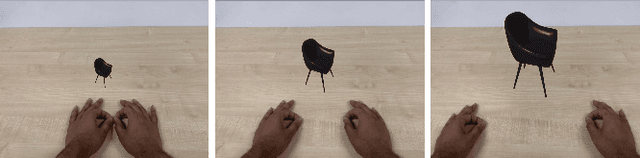

Teachable Reality: Prototyping Tangible Augmented Reality with Everyday Objects by Leveraging Interactive Machine Teaching

Feb 21, 2023

Abstract:This paper introduces Teachable Reality, an augmented reality (AR) prototyping tool for creating interactive tangible AR applications with arbitrary everyday objects. Teachable Reality leverages vision-based interactive machine teaching (e.g., Teachable Machine), which captures real-world interactions for AR prototyping. It identifies the user-defined tangible and gestural interactions using an on-demand computer vision model. Based on this, the user can easily create functional AR prototypes without programming, enabled by a trigger-action authoring interface. Therefore, our approach allows the flexibility, customizability, and generalizability of tangible AR applications that can address the limitation of current marker-based approaches. We explore the design space and demonstrate various AR prototypes, which include tangible and deformable interfaces, context-aware assistants, and body-driven AR applications. The results of our user study and expert interviews confirm that our approach can lower the barrier to creating functional AR prototypes while also allowing flexible and general-purpose prototyping experiences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge