Nebojša Malešević

Body movement to sound interface with vector autoregressive hierarchical hidden Markov models

Oct 26, 2016

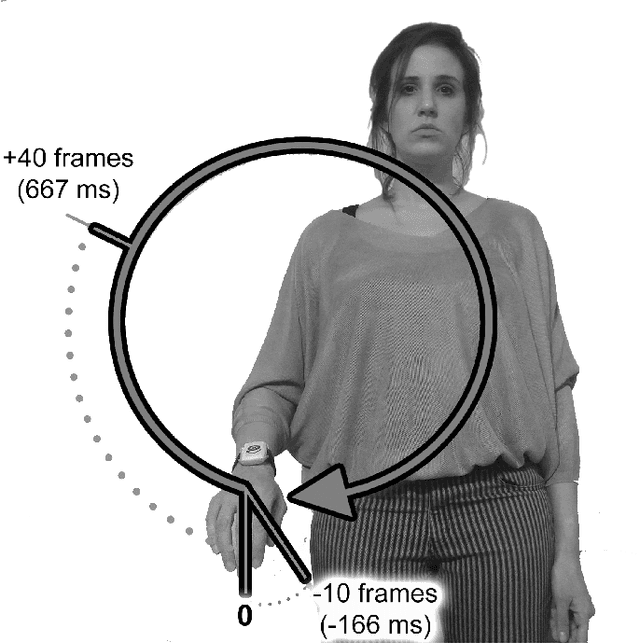

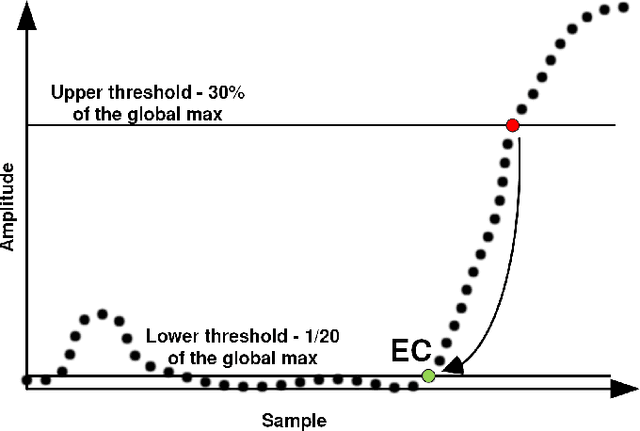

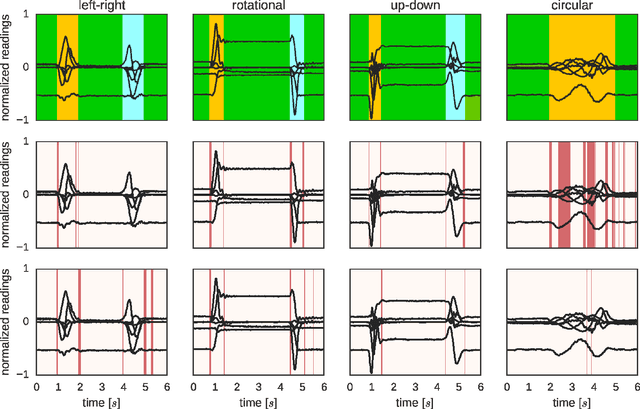

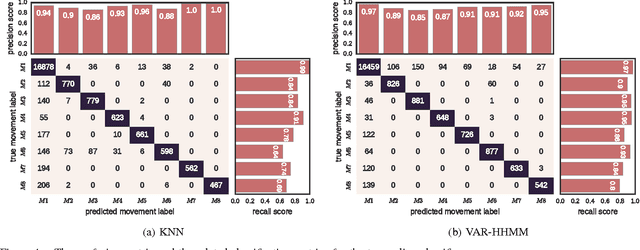

Abstract:Interfacing a kinetic action of a person to an action of a machine system is an important research topic in many application areas. One of the key factors for intimate human-machine interaction is the ability of the control algorithm to detect and classify different user commands with shortest possible latency, thus making a highly correlated link between cause and effect. In our research, we focused on the task of mapping user kinematic actions into sound samples. The presented methodology relies on the wireless sensor nodes equipped with inertial measurement units and the real-time algorithm dedicated for early detection and classification of a variety of movements/gestures performed by a user. The core algorithm is based on the approximate Bayesian inference of Vector Autoregressive Hierarchical Hidden Markov Models (VAR-HHMM), where models database is derived from the set of motion gestures. The performance of the algorithm was compared with an online version of the K-nearest neighbours (KNN) algorithm, where we used offline expert based classification as the benchmark. In almost all of the evaluation metrics (e.g. confusion matrix, recall and precision scores) the VAR-HHMM algorithm outperformed KNN. Furthermore, the VAR-HHMM algorithm, in some cases, achieved faster movement onset detection compared with the offline standard. The proposed concept, although envisioned for movement-to-sound application, could be implemented in other human-machine interfaces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge