Nazanin Fouladgar

SSET: Swapping-Sliding Explanation for Time Series Classifiers in Affect Detection

Oct 16, 2024

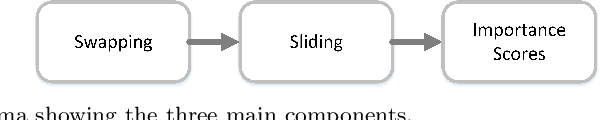

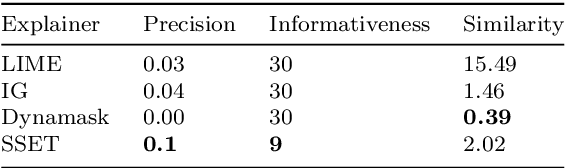

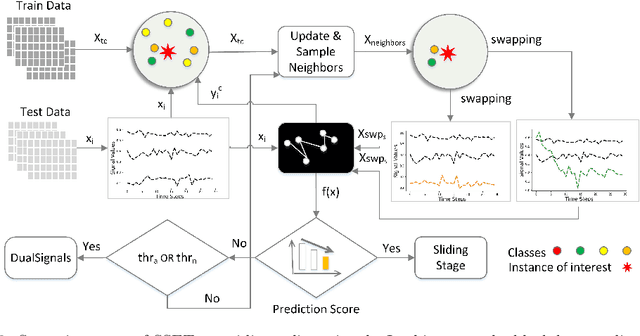

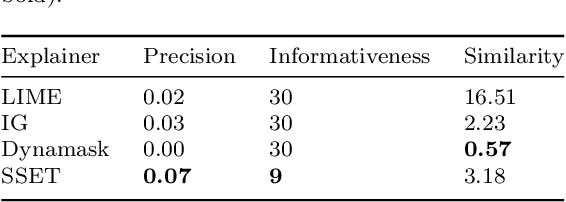

Abstract:Local explanation of machine learning (ML) models has recently received significant attention due to its ability to reduce ambiguities about why the models make specific decisions. Extensive efforts have been invested to address explainability for different data types, particularly images. However, the work on multivariate time series data is limited. A possible reason is that the conflation of time and other variables in time series data can cause the generated explanations to be incomprehensible to humans. In addition, some efforts on time series fall short of providing accurate explanations as they either ignore a context in the time domain or impose differentiability requirements on the ML models. Such restrictions impede their ability to provide valid explanations in real-world applications and non-differentiable ML settings. In this paper, we propose a swapping--sliding decision explanation for multivariate time series classifiers, called SSET. The proposal consists of swapping and sliding stages, by which salient sub-sequences causing significant drops in the prediction score are presented as explanations. In the former stage, the important variables are detected by swapping the series of interest with close train data from target classes. In the latter stage, the salient observations of these variables are explored by sliding a window over each time step. Additionally, the model measures the importance of different variables over time in a novel way characterized by multiple factors. We leverage SSET on affect detection domain where evaluations are performed on two real-world physiological time series datasets, WESAD and MAHNOB-HCI, and a deep convolutional classifier, CN-Waterfall. This classifier has shown superior performance to prior models to detect human affective states. Comparing SSET with several benchmarks, including LIME, integrated gradients, and Dynamask, we found..

XAI-P-T: A Brief Review of Explainable Artificial Intelligence from Practice to Theory

Dec 17, 2020

Abstract:In this work, we report the practical and theoretical aspects of Explainable AI (XAI) identified in some fundamental literature. Although there is a vast body of work on representing the XAI backgrounds, most of the corpuses pinpoint a discrete direction of thoughts. Providing insights into literature in practice and theory concurrently is still a gap in this field. This is important as such connection facilitates a learning process for the early stage XAI researchers and give a bright stand for the experienced XAI scholars. Respectively, we first focus on the categories of black-box explanation and give a practical example. Later, we discuss how theoretically explanation has been grounded in the body of multidisciplinary fields. Finally, some directions of future works are presented.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge