Nasser L. Azad

Longitudinal Dynamic versus Kinematic Models for Car-following Control Using Deep Reinforcement Learning

May 07, 2019

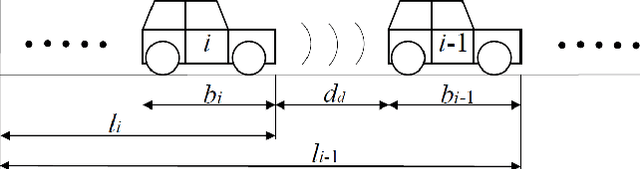

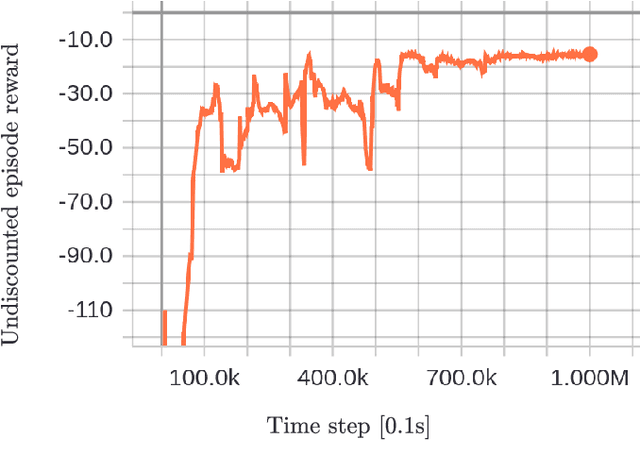

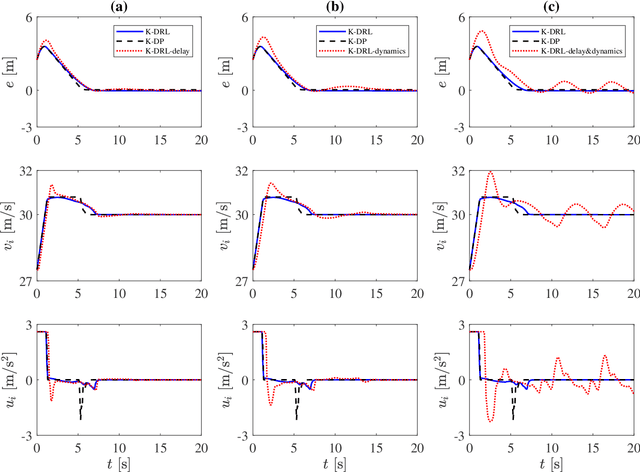

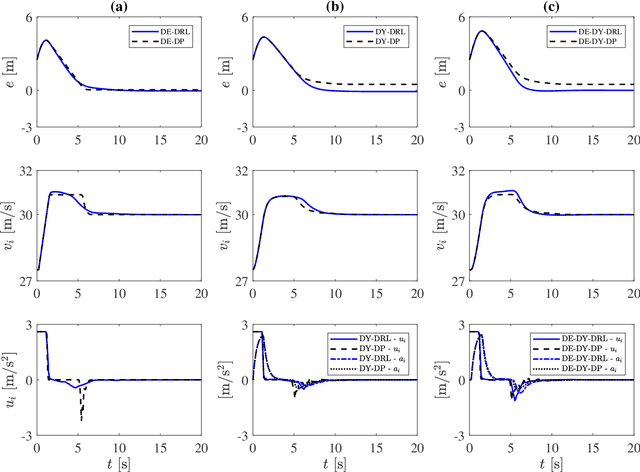

Abstract:The majority of current studies on autonomous vehicle control via deep reinforcement learning (DRL) utilize point-mass kinematic models, neglecting vehicle dynamics which includes acceleration delay and acceleration command dynamics. The acceleration delay, which results from sensing and actuation delays, results in delayed execution of the control inputs. The acceleration command dynamics dictates that the actual vehicle acceleration does not rise up to the desired command acceleration instantaneously due to friction and road grades. In this work, we investigate the feasibility of applying DRL controllers trained using vehicle kinematic models to more realistic driving control with vehicle dynamics. We consider a particular longitudinal car-following control, i.e., Adaptive Cruise Control, problem solved via DRL using a point-mass kinematic model. When such a controller is applied to car following with vehicle dynamics, we observe significantly degraded car-following performance. Therefore, we redesign the DRL framework to accommodate the acceleration delay and acceleration command dynamics by adding the delayed control inputs and the actual vehicle acceleration to the reinforcement learning environment state. The training results show that the redesigned DRL controller results in near-optimal control performance of car following with vehicle dynamics considered when compared with dynamic programming solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge