Nashwa El-Bendary

Scheduling and Communication Schemes for Decentralized Federated Learning

Nov 27, 2023Abstract:Federated learning (FL) is a distributed machine learning paradigm in which a large number of clients coordinate with a central server to learn a model without sharing their own training data. One central server is not enough, due to problems of connectivity with clients. In this paper, a decentralized federated learning (DFL) model with the stochastic gradient descent (SGD) algorithm has been introduced, as a more scalable approach to improve the learning performance in a network of agents with arbitrary topology. Three scheduling policies for DFL have been proposed for communications between the clients and the parallel servers, and the convergence, accuracy, and loss have been tested in a totally decentralized mplementation of SGD. The experimental results show that the proposed scheduling polices have an impact both on the speed of convergence and in the final global model.

Ant Colony based Feature Selection Heuristics for Retinal Vessel Segmentation

Mar 07, 2014

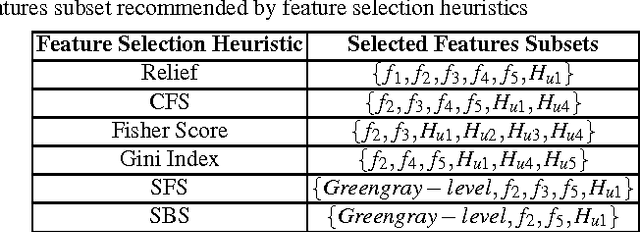

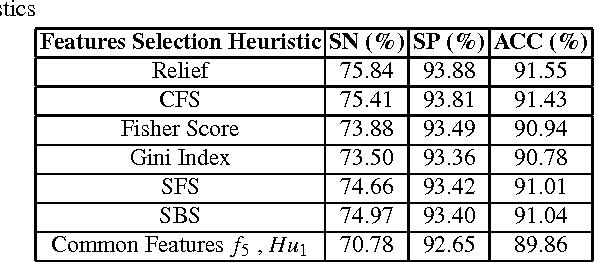

Abstract:Features selection is an essential step for successful data classification, since it reduces the data dimensionality by removing redundant features. Consequently, that minimizes the classification complexity and time in addition to maximizing its accuracy. In this article, a comparative study considering six features selection heuristics is conducted in order to select the best relevant features subset. The tested features vector consists of fourteen features that are computed for each pixel in the field of view of retinal images in the DRIVE database. The comparison is assessed in terms of sensitivity, specificity, and accuracy measurements of the recommended features subset resulted by each heuristic when applied with the ant colony system. Experimental results indicated that the features subset recommended by the relief heuristic outperformed the subsets recommended by the other experienced heuristics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge