Narek Davtyan

LoRAS: An oversampling approach for imbalanced datasets

Aug 23, 2019

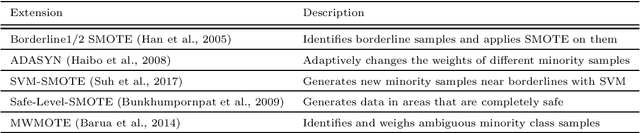

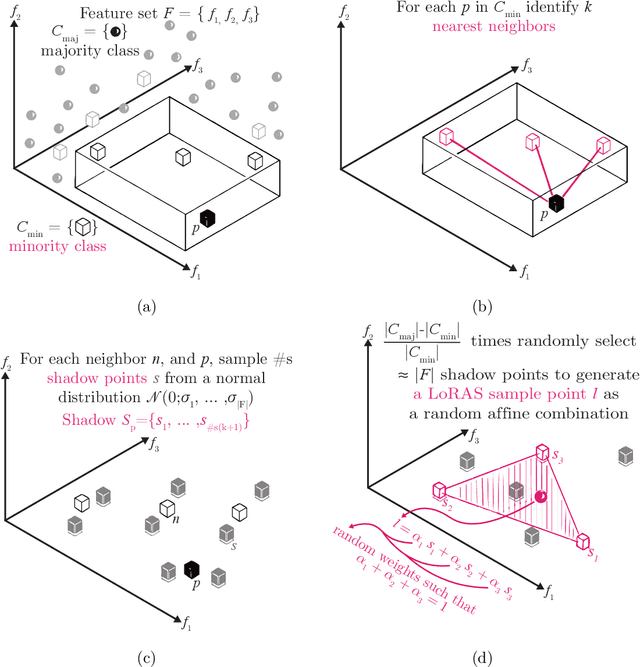

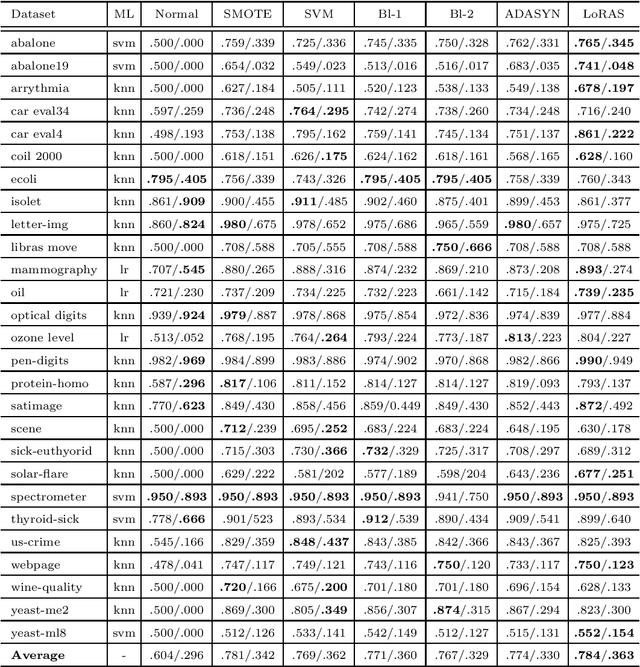

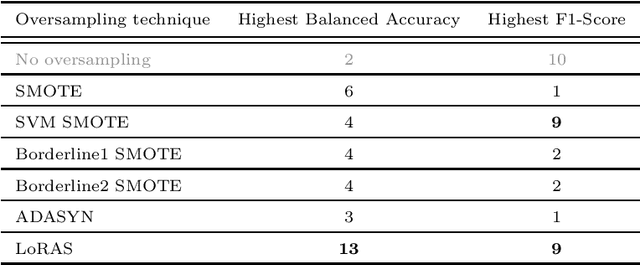

Abstract:The Synthetic Minority Oversampling TEchnique (SMOTE) is widely-used for the analysis of imbalanced datasets. It is known that SMOTE frequently over-generalizes the minority class, leading to misclassifications for the majority class, and effecting the overall balance of the model. In this article, we present an approach that overcomes this limitation of SMOTE, employing Localized Random Affine Shadowsampling (LoRAS) to oversample from an approximated data manifold of the minority class. We benchmarked our LoRAS algorithm with 28 publicly available datasets and show that that drawing samples from an approximated data manifold of the minority class is the key to successful oversampling. We compared the performance of LoRAS, SMOTE, and several SMOTE extensions and observed that for imbalanced datasets LoRAS, on average generates better Machine Learning (ML) models in terms of F1-score and Balanced Accuracy. Moreover, to explain the success of the algorithm, we have constructed a mathematical framework to prove that LoRAS is a more effective oversampling technique since it provides a better estimate to mean of the underlying local data distribution of the minority class data space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge