N. B. Puhan

Deep Convolutional Generative Adversarial Network Based Food Recognition Using Partially Labeled Data

Dec 26, 2018

Abstract:Traditional machine learning algorithms using hand-crafted feature extraction techniques (such as local binary pattern) have limited accuracy because of high variation in images of the same class (or intra-class variation) for food recognition task. In recent works, convolutional neural networks (CNN) have been applied to this task with better results than all previously reported methods. However, they perform best when trained with large amount of annotated (labeled) food images. This is problematic when obtained in large volume, because they are expensive, laborious and impractical. Our work aims at developing an efficient deep CNN learning-based method for food recognition alleviating these limitations by using partially labeled training data on generative adversarial networks (GANs). We make new enhancements to the unsupervised training architecture introduced by Goodfellow et al. (2014), which was originally aimed at generating new data by sampling a dataset. In this work, we make modifications to deep convolutional GANs to make them robust and efficient for classifying food images. Experimental results on benchmarking datasets show the superiority of our proposed method as compared to the current-state-of-the-art methodologies even when trained with partially labeled training data.

Cross-spectral Periocular Recognition: A Survey

Dec 04, 2018

Abstract:Among many biometrics such as face, iris, fingerprint and others, periocular region has the advantages over other biometrics because it is non-intrusive and serves as a balance between iris or eye region (very stringent, small area) and the whole face region (very relaxed large area). Research have shown that this is the region which does not get affected much because of various poses, aging, expression, facial changes and other artifacts, which otherwise would change to a large variation. Active research has been carried out on this topic since past few years due to its obvious advantages over face and iris biometrics in unconstrained and uncooperative scenarios. Many researchers have explored periocular biometrics involving both visible (VIS) and infra-red (IR) spectrum images. For a system to work for 24/7 (such as in surveillance scenarios), the registration process may depend on the day time VIS periocular images (or any mug shot image) and the testing or recognition process may occur in the night time involving only IR periocular images. This gives rise to a challenging research problem called the cross-spectral matching of images where VIS images are used for registration or as gallery images and IR images are used for testing or recognition process and vice versa. After intensive research of more than two decades on face and iris biometrics in cross-spectral domain, a number of researchers have now focused their work on matching heterogeneous (cross-spectral) periocular images. Though a number of surveys have been made on existing periocular biometric research, no study has been done on its cross-spectral aspect. This paper analyses and reviews current state-of-the-art techniques in cross-spectral periocular recognition including various methodologies, databases, their protocols and current-state-of-the-art recognition performances.

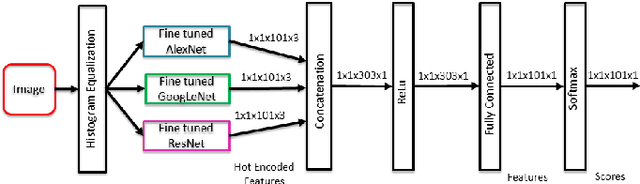

FoodNet: Recognizing Foods Using Ensemble of Deep Networks

Sep 27, 2017

Abstract:In this work we propose a methodology for an automatic food classification system which recognizes the contents of the meal from the images of the food. We developed a multi-layered deep convolutional neural network (CNN) architecture that takes advantages of the features from other deep networks and improves the efficiency. Numerous classical handcrafted features and approaches are explored, among which CNNs are chosen as the best performing features. Networks are trained and fine-tuned using preprocessed images and the filter outputs are fused to achieve higher accuracy. Experimental results on the largest real-world food recognition database ETH Food-101 and newly contributed Indian food image database demonstrate the effectiveness of the proposed methodology as compared to many other benchmark deep learned CNN frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge