Myrthe L. Tielman

"Even explanations will not help in trusting [this] fundamentally biased system": A Predictive Policing Case-Study

Apr 15, 2025Abstract:In today's society, where Artificial Intelligence (AI) has gained a vital role, concerns regarding user's trust have garnered significant attention. The use of AI systems in high-risk domains have often led users to either under-trust it, potentially causing inadequate reliance or over-trust it, resulting in over-compliance. Therefore, users must maintain an appropriate level of trust. Past research has indicated that explanations provided by AI systems can enhance user understanding of when to trust or not trust the system. However, the utility of presentation of different explanations forms still remains to be explored especially in high-risk domains. Therefore, this study explores the impact of different explanation types (text, visual, and hybrid) and user expertise (retired police officers and lay users) on establishing appropriate trust in AI-based predictive policing. While we observed that the hybrid form of explanations increased the subjective trust in AI for expert users, it did not led to better decision-making. Furthermore, no form of explanations helped build appropriate trust. The findings of our study emphasize the importance of re-evaluating the use of explanations to build [appropriate] trust in AI based systems especially when the system's use is questionable. Finally, we synthesize potential challenges and policy recommendations based on our results to design for appropriate trust in high-risk based AI-based systems.

A Systematic Review on Fostering Appropriate Trust in Human-AI Interaction

Nov 08, 2023

Abstract:Appropriate Trust in Artificial Intelligence (AI) systems has rapidly become an important area of focus for both researchers and practitioners. Various approaches have been used to achieve it, such as confidence scores, explanations, trustworthiness cues, or uncertainty communication. However, a comprehensive understanding of the field is lacking due to the diversity of perspectives arising from various backgrounds that influence it and the lack of a single definition for appropriate trust. To investigate this topic, this paper presents a systematic review to identify current practices in building appropriate trust, different ways to measure it, types of tasks used, and potential challenges associated with it. We also propose a Belief, Intentions, and Actions (BIA) mapping to study commonalities and differences in the concepts related to appropriate trust by (a) describing the existing disagreements on defining appropriate trust, and (b) providing an overview of the concepts and definitions related to appropriate trust in AI from the existing literature. Finally, the challenges identified in studying appropriate trust are discussed, and observations are summarized as current trends, potential gaps, and research opportunities for future work. Overall, the paper provides insights into the complex concept of appropriate trust in human-AI interaction and presents research opportunities to advance our understanding on this topic.

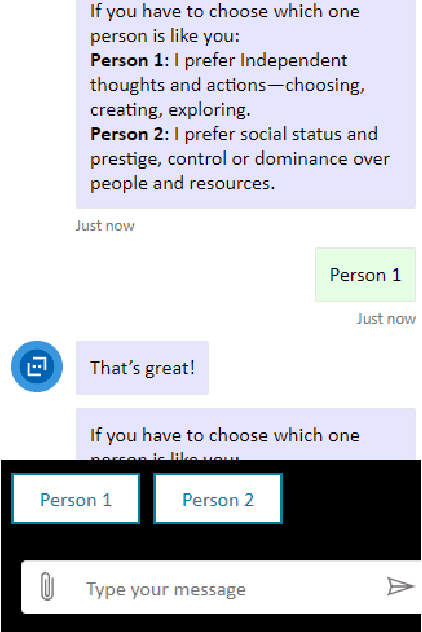

AI Alignment Dialogues: An Interactive Approach to AI Alignment in Support Agents

Jan 16, 2023Abstract:AI alignment is about ensuring AI systems only pursue goals and activities that are beneficial to humans. Most of the current approach to AI alignment is to learn what humans value from their behavioural data. This paper proposes a different way of looking at the notion of alignment, namely by introducing AI Alignment Dialogues: dialogues with which users and agents try to achieve and maintain alignment via interaction. We argue that alignment dialogues have a number of advantages in comparison to data-driven approaches, especially for behaviour support agents, which aim to support users in achieving their desired future behaviours rather than their current behaviours. The advantages of alignment dialogues include allowing the users to directly convey higher-level concepts to the agent, and making the agent more transparent and trustworthy. In this paper we outline the concept and high-level structure of alignment dialogues. Moreover, we conducted a qualitative focus group user study from which we developed a model that describes how alignment dialogues affect users, and created design suggestions for AI alignment dialogues. Through this we establish foundations for AI alignment dialogues and shed light on what requires further development and research.

Exploring Effectiveness of Explanations for Appropriate Trust: Lessons from Cognitive Psychology

Oct 05, 2022

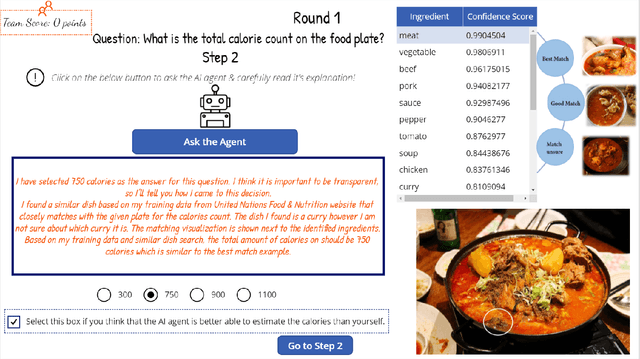

Abstract:The rapid development of Artificial Intelligence (AI) requires developers and designers of AI systems to focus on the collaboration between humans and machines. AI explanations of system behavior and reasoning are vital for effective collaboration by fostering appropriate trust, ensuring understanding, and addressing issues of fairness and bias. However, various contextual and subjective factors can influence an AI system explanation's effectiveness. This work draws inspiration from findings in cognitive psychology to understand how effective explanations can be designed. We identify four components to which explanation designers can pay special attention: perception, semantics, intent, and user & context. We illustrate the use of these four explanation components with an example of estimating food calories by combining text with visuals, probabilities with exemplars, and intent communication with both user and context in mind. We propose that the significant challenge for effective AI explanations is an additional step between explanation generation using algorithms not producing interpretable explanations and explanation communication. We believe this extra step will benefit from carefully considering the four explanation components outlined in our work, which can positively affect the explanation's effectiveness.

More Similar Values, More Trust? -- the Effect of Value Similarity on Trust in Human-Agent Interaction

May 19, 2021

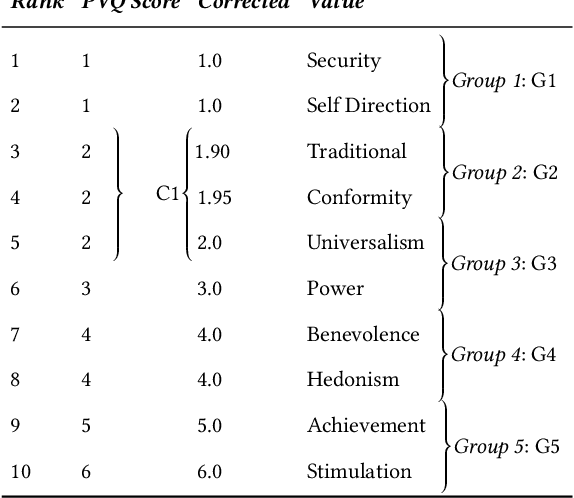

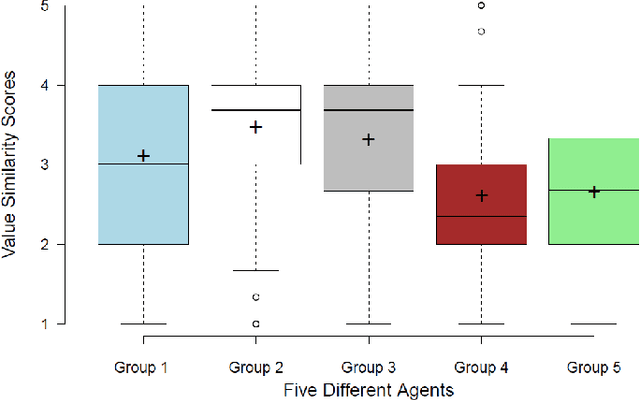

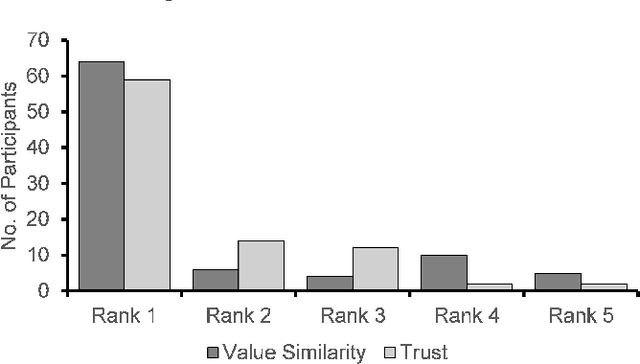

Abstract:As AI systems are increasingly involved in decision making, it also becomes important that they elicit appropriate levels of trust from their users. To achieve this, it is first important to understand which factors influence trust in AI. We identify that a research gap exists regarding the role of personal values in trust in AI. Therefore, this paper studies how human and agent Value Similarity (VS) influences a human's trust in that agent. To explore this, 89 participants teamed up with five different agents, which were designed with varying levels of value similarity to that of the participants. In a within-subjects, scenario-based experiment, agents gave suggestions on what to do when entering the building to save a hostage. We analyzed the agent's scores on subjective value similarity, trust and qualitative data from open-ended questions. Our results show that agents rated as having more similar values also scored higher on trust, indicating a positive effect between the two. With this result, we add to the existing understanding of human-agent trust by providing insight into the role of value-similarity.

* 4th AAAI/ACM Conference on AI, Ethics, and Society

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge