Muhammad Yaseen

What is YOLOv9: An In-Depth Exploration of the Internal Features of the Next-Generation Object Detector

Sep 12, 2024Abstract:This study provides a comprehensive analysis of the YOLOv9 object detection model, focusing on its architectural innovations, training methodologies, and performance improvements over its predecessors. Key advancements, such as the Generalized Efficient Layer Aggregation Network GELAN and Programmable Gradient Information PGI, significantly enhance feature extraction and gradient flow, leading to improved accuracy and efficiency. By incorporating Depthwise Convolutions and the lightweight C3Ghost architecture, YOLOv9 reduces computational complexity while maintaining high precision. Benchmark tests on Microsoft COCO demonstrate its superior mean Average Precision mAP and faster inference times, outperforming YOLOv8 across multiple metrics. The model versatility is highlighted by its seamless deployment across various hardware platforms, from edge devices to high performance GPUs, with built in support for PyTorch and TensorRT integration. This paper provides the first in depth exploration of YOLOv9s internal features and their real world applicability, establishing it as a state of the art solution for real time object detection across industries, from IoT devices to large scale industrial applications.

What is YOLOv8: An In-Depth Exploration of the Internal Features of the Next-Generation Object Detector

Aug 28, 2024

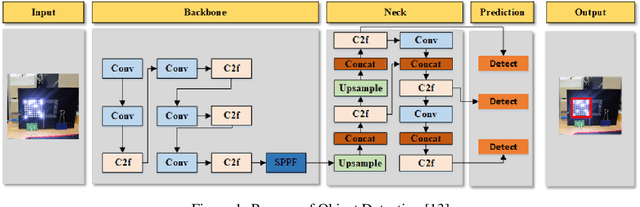

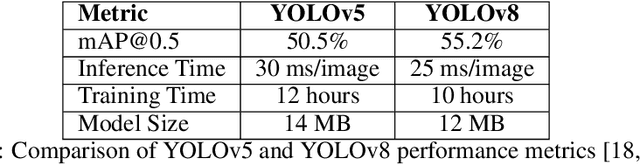

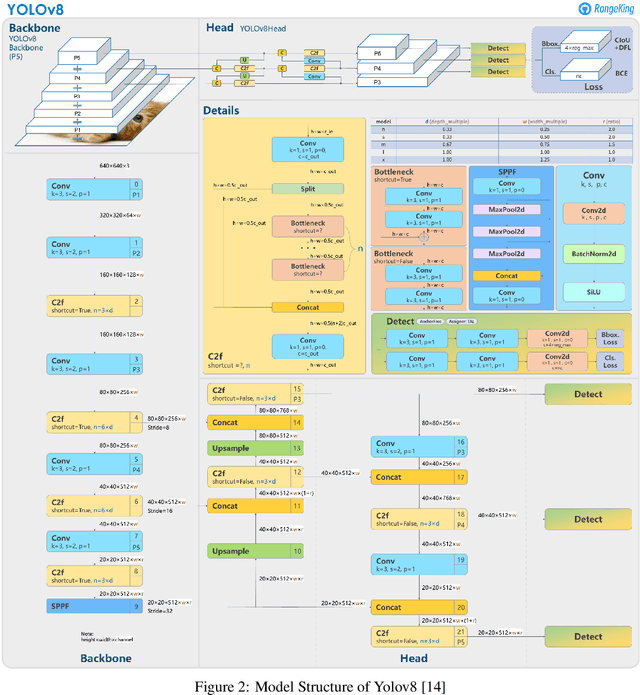

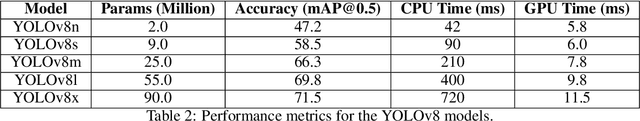

Abstract:This study presents a detailed analysis of the YOLOv8 object detection model, focusing on its architecture, training techniques, and performance improvements over previous iterations like YOLOv5. Key innovations, including the CSPNet backbone for enhanced feature extraction, the FPN+PAN neck for superior multi-scale object detection, and the transition to an anchor-free approach, are thoroughly examined. The paper reviews YOLOv8's performance across benchmarks like Microsoft COCO and Roboflow 100, highlighting its high accuracy and real-time capabilities across diverse hardware platforms. Additionally, the study explores YOLOv8's developer-friendly enhancements, such as its unified Python package and CLI, which streamline model training and deployment. Overall, this research positions YOLOv8 as a state-of-the-art solution in the evolving object detection field.

Preventing Clean Label Poisoning using Gaussian Mixture Loss

Feb 10, 2020

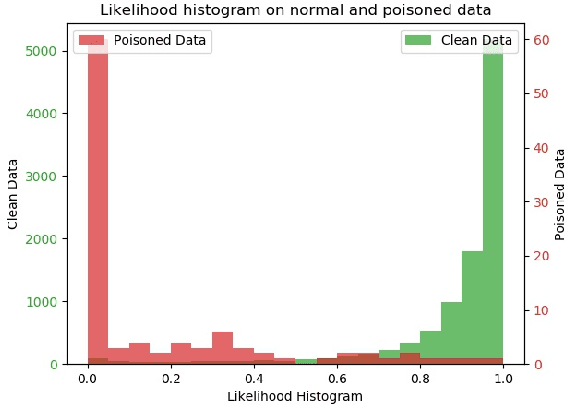

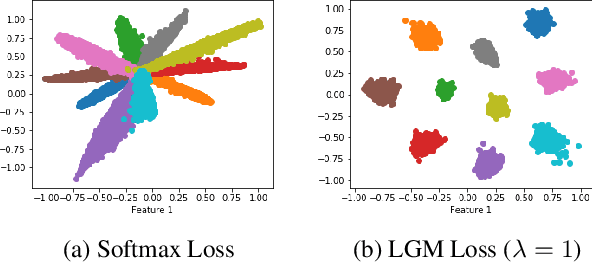

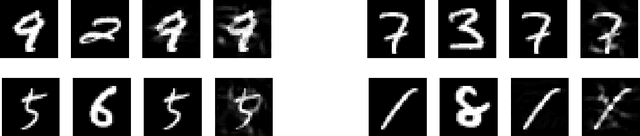

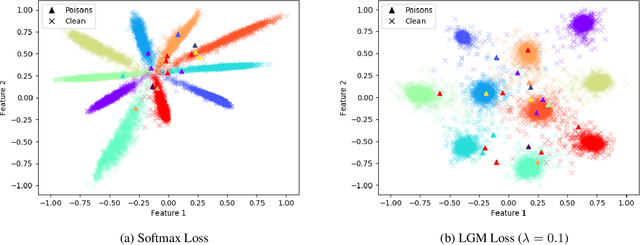

Abstract:Since 2014 when Szegedy et al. showed that carefully designed perturbations of the input can lead Deep Neural Networks (DNNs) to wrongly classify its label, there has been an ongoing research to make DNNs more robust to such malicious perturbations. In this work, we consider a poisoning attack called Clean Labeling poisoning attack (CLPA). The goal of CLPA is to inject seemingly benign instances which can drastically change decision boundary of the DNNs due to which subsequent queries at test time can be mis-classified. We argue that a strong defense against CLPA can be embedded into the model during the training by imposing features of the network to follow a Large Margin Gaussian Mixture distribution in the penultimate layer. By having such a prior knowledge, we can systematically evaluate how unusual the example is, given the label it is claiming to be. We demonstrate our builtin defense via experiments on MNIST and CIFAR datasets. We train two models on each dataset: one trained via softmax, another via LGM. We show that using LGM can substantially reduce the effectiveness of CLPA while having no additional overhead of data sanitization. The code to reproduce our results is available online.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge