Morgane Dumont

Comparison of Discrete Choice Models and Artificial Neural Networks in Presence of Missing Variables

Nov 06, 2018

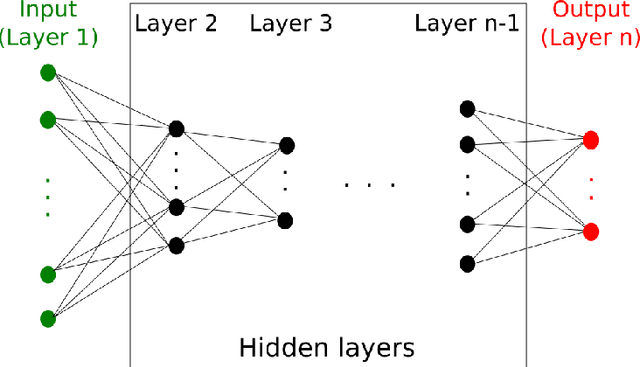

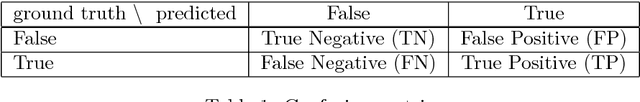

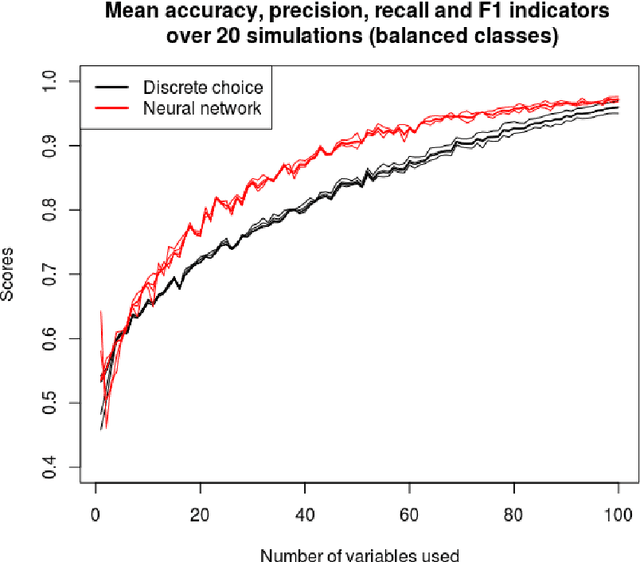

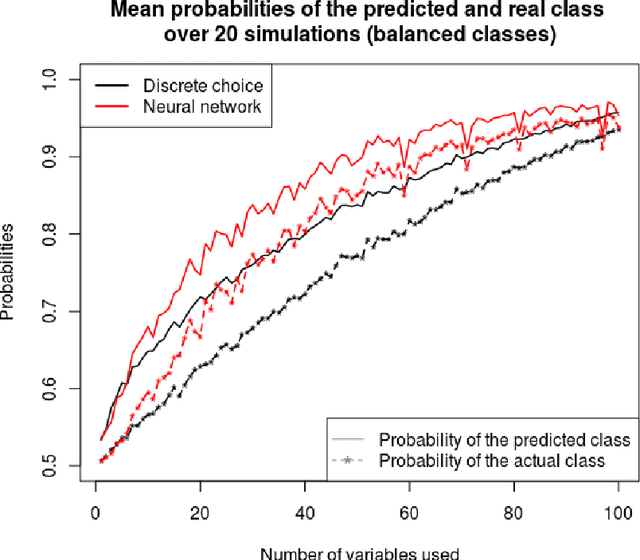

Abstract:Classification, the process of assigning a label (or class) to an observation given its features, is a common task in many applications. Nonetheless in most real-life applications, the labels can not be fully explained by the observed features. Indeed there can be many factors hidden to the modellers. The unexplained variation is then treated as some random noise which is handled differently depending on the method retained by the practitioner. This work focuses on two simple and widely used supervised classification algorithms: discrete choice models and artificial neural networks in the context of binary classification. Through various numerical experiments involving continuous or discrete explanatory features, we present a comparison of the retained methods' performance in presence of missing variables. The impact of the distribution of the two classes in the training data is also investigated. The outcomes of those experiments highlight the fact that artificial neural networks outperforms the discrete choice models, except when the distribution of the classes in the training data is highly unbalanced. Finally, this work provides some guidelines for choosing the right classifier with respect to the training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge