Mohammad Shiri

Supervised Contrastive Vision Transformer for Breast Histopathological Image Classification

Apr 18, 2024Abstract:Invasive ductal carcinoma (IDC) is the most prevalent form of breast cancer. Breast tissue histopathological examination is critical in diagnosing and classifying breast cancer. Although existing methods have shown promising results, there is still room for improvement in the classification accuracy and generalization of IDC using histopathology images. We present a novel approach, Supervised Contrastive Vision Transformer (SupCon-ViT), for improving the classification of invasive ductal carcinoma in terms of accuracy and generalization by leveraging the inherent strengths and advantages of both transfer learning, i.e., pre-trained vision transformer, and supervised contrastive learning. Our results on a benchmark breast cancer dataset demonstrate that SupCon-Vit achieves state-of-the-art performance in IDC classification, with an F1-score of 0.8188, precision of 0.7692, and specificity of 0.8971, outperforming existing methods. In addition, the proposed model demonstrates resilience in scenarios with minimal labeled data, making it highly efficient in real-world clinical settings where labelled data is limited. Our findings suggest that supervised contrastive learning in conjunction with pre-trained vision transformers appears to be a viable strategy for an accurate classification of IDC, thus paving the way for a more efficient and reliable diagnosis of breast cancer through histopathological image analysis.

Highly Scalable Task Grouping for Deep Multi-Task Learning in Prediction of Epigenetic Events

Sep 24, 2022

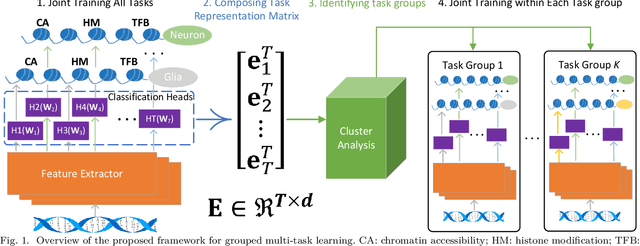

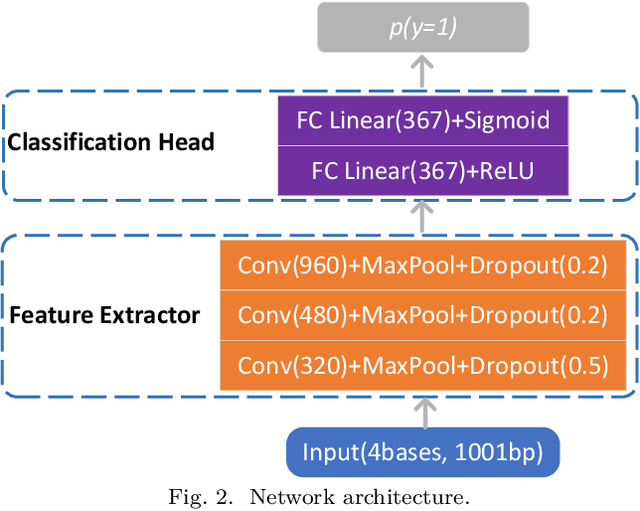

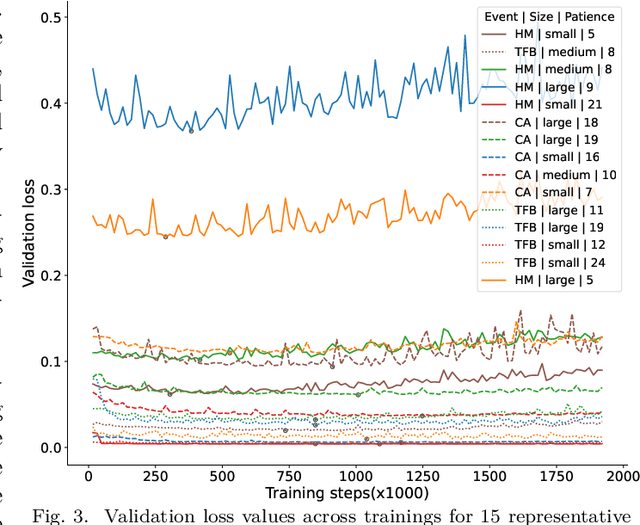

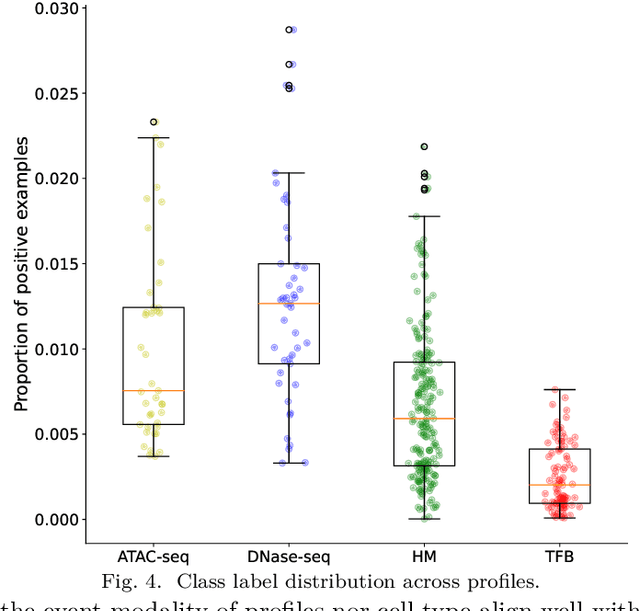

Abstract:Deep neural networks trained for predicting cellular events from DNA sequence have become emerging tools to help elucidate the biological mechanism underlying the associations identified in genome-wide association studies. To enhance the training, multi-task learning (MTL) has been commonly exploited in previous works where trained networks were needed for multiple profiles differing in either event modality or cell type. All existing works adopted a simple MTL framework where all tasks share a single feature extraction network. Such a strategy even though effective to certain extent leads to substantial negative transfer, meaning the existence of large portion of tasks for which models obtained through MTL perform worse than those by single task learning. There have been methods developed to address such negative transfer in other domains, such as computer vision. However, these methods are generally difficult to scale up to handle large amount of tasks. In this paper, we propose a highly scalable task grouping framework to address negative transfer by only jointly training tasks that are potentially beneficial to each other. The proposed method exploits the network weights associated with task specific classification heads that can be cheaply obtained by one-time joint training of all tasks. Our results using a dataset consisting of 367 epigenetic profiles demonstrate the effectiveness of the proposed approach and its superiority over baseline methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge