Mohamed Shahawy

HiveNAS: Neural Architecture Search using Artificial Bee Colony Optimization

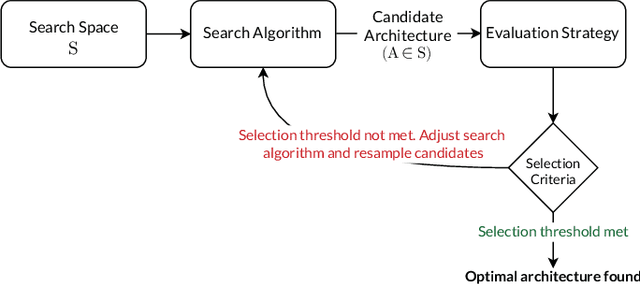

Nov 18, 2022Abstract:The traditional Neural Network-development process requires substantial expert knowledge and relies heavily on intuition and trial-and-error. Neural Architecture Search (NAS) frameworks were introduced to robustly search for network topologies, as well as facilitate the automated development of Neural Networks. While some optimization approaches -- such as Genetic Algorithms -- have been extensively explored in the NAS context, other Metaheuristic Optimization algorithms have not yet been evaluated. In this paper, we propose HiveNAS, the first Artificial Bee Colony-based NAS framework.

A Review on Plastic Artificial Neural Networks: Exploring the Intersection between Neural Architecture Search and Continual Learning

Jun 11, 2022

Abstract:Despite the significant advances achieved in Artificial Neural Networks (ANNs), their design process remains notoriously tedious, depending primarily on intuition, experience and trial-and-error. This human-dependent process is often time-consuming and prone to errors. Furthermore, the models are generally bound to their training contexts, with no considerations of changes to their surrounding environments. Continual adaptability and automation of neural networks is of paramount importance to several domains where model accessibility is limited after deployment (e.g IoT devices, self-driving vehicles, etc). Additionally, even accessible models require frequent maintenance post-deployment to overcome issues such as Concept/Data Drift, which can be cumbersome and restrictive. The current state of the art on adaptive ANNs is still a premature area of research; nevertheless, Neural Architecture Search (NAS), a form of AutoML, and Continual Learning (CL) have recently gained an increasing momentum in the Deep Learning research field, aiming to provide more robust and adaptive ANN development frameworks. This study is the first extensive review on the intersection between AutoML and CL, outlining research directions for the different methods that can facilitate full automation and lifelong plasticity in ANNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge