Mohamed Lechiakh

Auditing health-related recommendations in social media: A Case Study of Abortion on YouTube

Apr 11, 2024

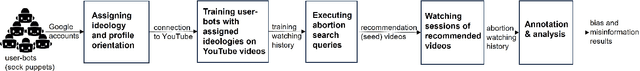

Abstract:Recommendation algorithms (RS) used by social media, like YouTube, significantly shape our information consumption across various domains, especially in healthcare. Hence, algorithmic auditing becomes crucial to uncover their potential bias and misinformation, particularly in the context of controversial topics like abortion. We introduce a simple yet effective sock puppet auditing approach to investigate how YouTube recommends abortion-related videos to individuals with different backgrounds. Our framework allows for efficient auditing of RS, regardless of the complexity of the underlying algorithms

FEBR: Expert-Based Recommendation Framework for beneficial and personalized content

Jul 17, 2021

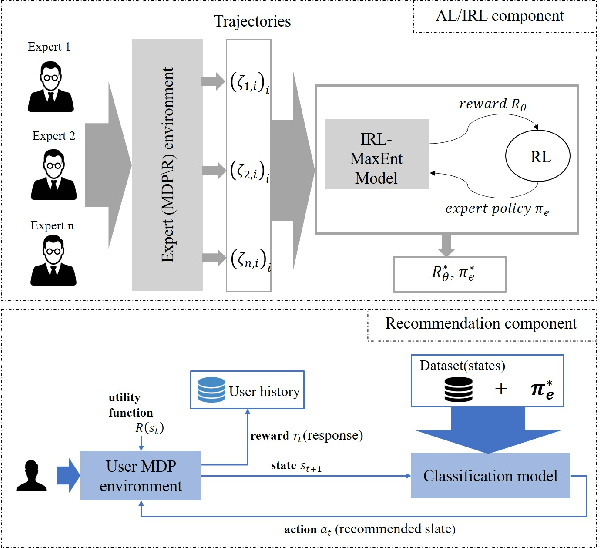

Abstract:So far, most research on recommender systems focused on maintaining long-term user engagement and satisfaction, by promoting relevant and personalized content. However, it is still very challenging to evaluate the quality and the reliability of this content. In this paper, we propose FEBR (Expert-Based Recommendation Framework), an apprenticeship learning framework to assess the quality of the recommended content on online platforms. The framework exploits the demonstrated trajectories of an expert (assumed to be reliable) in a recommendation evaluation environment, to recover an unknown utility function. This function is used to learn an optimal policy describing the expert's behavior, which is then used in the framework to provide high-quality and personalized recommendations. We evaluate the performance of our solution through a user interest simulation environment (using RecSim). We simulate interactions under the aforementioned expert policy for videos recommendation, and compare its efficiency with standard recommendation methods. The results show that our approach provides a significant gain in terms of content quality, evaluated by experts and watched by users, while maintaining almost the same watch time as the baseline approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge