Minda Li

Evaluating ChatGPT-3.5 Efficiency in Solving Coding Problems of Different Complexity Levels: An Empirical Analysis

Nov 12, 2024

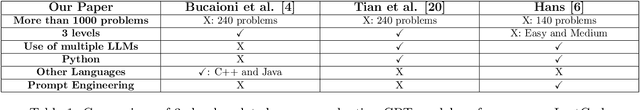

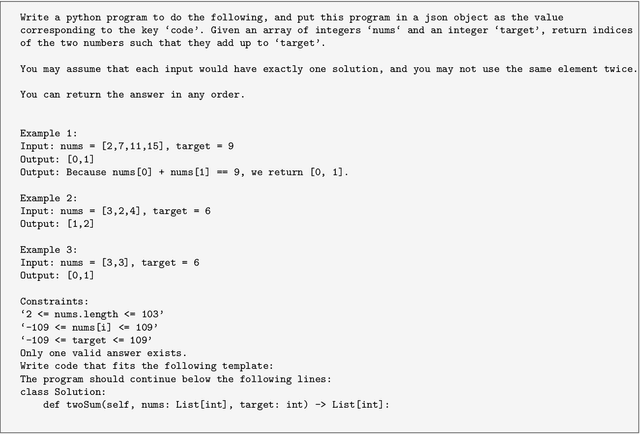

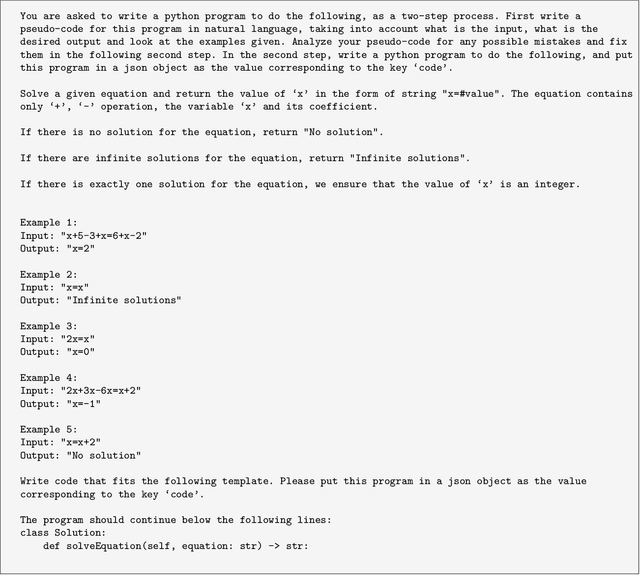

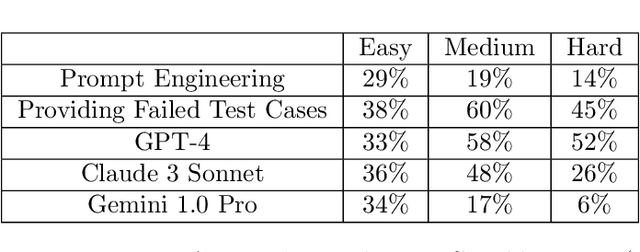

Abstract:ChatGPT and other large language models (LLMs) promise to revolutionize software development by automatically generating code from program specifications. We assess the performance of ChatGPT's GPT-3.5-turbo model on LeetCode, a popular platform with algorithmic coding challenges for technical interview practice, across three difficulty levels: easy, medium, and hard. We test three main hypotheses. First, ChatGPT solves fewer problems as difficulty rises (Hypothesis 1). Second, prompt engineering improves ChatGPT's performance, with greater gains on easier problems and diminishing returns on harder ones (Hypothesis 2). Third, ChatGPT performs better in popular languages like Python, Java, and C++ than in less common ones like Elixir, Erlang, and Racket (Hypothesis 3). To investigate these hypotheses, we conduct automated experiments using Python scripts to generate prompts that instruct ChatGPT to create Python solutions. These solutions are stored and manually submitted on LeetCode to check their correctness. For Hypothesis 1, results show the GPT-3.5-turbo model successfully solves 92% of easy, 79% of medium, and 51% of hard problems. For Hypothesis 2, prompt engineering yields improvements: 14-29% for Chain of Thought Prompting, 38-60% by providing failed test cases in a second feedback prompt, and 33-58% by switching to GPT-4. From a random subset of problems ChatGPT solved in Python, it also solved 78% in Java, 50% in C++, and none in Elixir, Erlang, or Racket. These findings generally validate all three hypotheses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge