Michael Strecke

Physics-Based Rigid Body Object Tracking and Friction Filtering From RGB-D Videos

Sep 27, 2023Abstract:Physics-based understanding of object interactions from sensory observations is an essential capability in augmented reality and robotics. It enables capturing the properties of a scene for simulation and control. In this paper, we propose a novel approach for real-to-sim which tracks rigid objects in 3D from RGB-D images and infers physical properties of the objects. We use a differentiable physics simulation as state-transition model in an Extended Kalman Filter which can model contact and friction for arbitrary mesh-based shapes and in this way estimate physically plausible trajectories. We demonstrate that our approach can filter position, orientation, velocities, and concurrently can estimate the coefficient of friction of the objects. We analyse our approach on various sliding scenarios in synthetic image sequences of single objects and colliding objects. We also demonstrate and evaluate our approach on a real-world dataset. We will make our novel benchmark datasets publicly available to foster future research in this novel problem setting and comparison with our method.

DiffSDFSim: Differentiable Rigid-Body Dynamics With Implicit Shapes

Nov 30, 2021

Abstract:Differentiable physics is a powerful tool in computer vision and robotics for scene understanding and reasoning about interactions. Existing approaches have frequently been limited to objects with simple shape or shapes that are known in advance. In this paper, we propose a novel approach to differentiable physics with frictional contacts which represents object shapes implicitly using signed distance fields (SDFs). Our simulation supports contact point calculation even when the involved shapes are nonconvex. Moreover, we propose ways for differentiating the dynamics for the object shape to facilitate shape optimization using gradient-based methods. In our experiments, we demonstrate that our approach allows for model-based inference of physical parameters such as friction coefficients, mass, forces or shape parameters from trajectory and depth image observations in several challenging synthetic scenarios and a real image sequence.

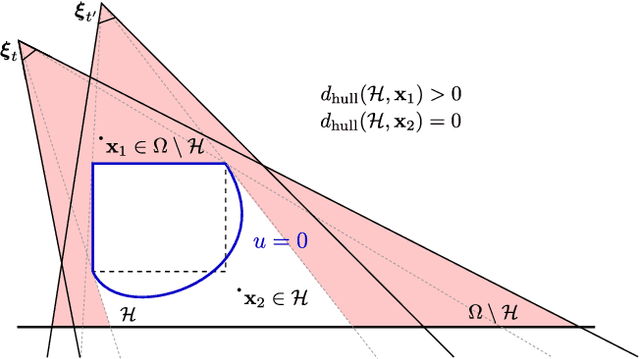

Where Does It End? -- Reasoning About Hidden Surfaces by Object Intersection Constraints

Apr 12, 2020

Abstract:Dynamic scene understanding is an essential capability in robotics and VR/AR. In this paper we propose Co-Section, an optimization-based approach to 3D dynamic scene reconstruction, which infers hidden shape information from intersection constraints. An object-level dynamic SLAM frontend detects, segments, tracks and maps dynamic objects in the scene. Our optimization backend completes the shapes using hull and intersection constraints between the objects. In experiments, we demonstrate our approach on real and synthetic dynamic scene datasets. We also assess the shape completion performance of our method quantitatively. To the best of our knowledge, our approach is the first method to incorporate such physical plausibility constraints on object intersections for shape completion of dynamic objects in an energy minimization framework.

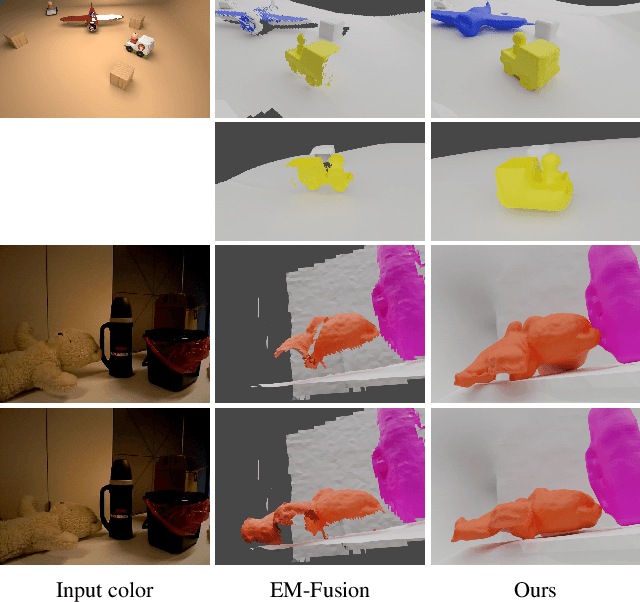

EM-Fusion: Dynamic Object-Level SLAM with Probabilistic Data Association

Apr 26, 2019

Abstract:The majority of approaches for acquiring dense 3D environment maps with RGB-D cameras assumes static environments or rejects moving objects as outliers. The representation and tracking of moving objects, however, has significant potential for applications in robotics or augmented reality. In this paper, we propose a novel approach to dynamic SLAM with dense object-level representations. We represent rigid objects in local volumetric signed distance function (SDF) maps, and formulate multi-object tracking as direct alignment of RGB-D images with the SDF representations. Our main novelty is a probabilistic formulation which naturally leads to strategies for data association and occlusion handling. We analyze our approach in experiments and demonstrate that our approach compares favorably with the state-of-the-art methods in terms of robustness and accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge