Michael McGuire

Automatic Speech Recognition for Non-Native English: Accuracy and Disfluency Handling

Mar 10, 2025Abstract:Automatic speech recognition (ASR) has been an essential component of computer assisted language learning (CALL) and computer assisted language testing (CALT) for many years. As this technology continues to develop rapidly, it is important to evaluate the accuracy of current ASR systems for language learning applications. This study assesses five cutting-edge ASR systems' recognition of non-native accented English speech using recordings from the L2-ARCTIC corpus, featuring speakers from six different L1 backgrounds (Arabic, Chinese, Hindi, Korean, Spanish, and Vietnamese), in the form of both read and spontaneous speech. The read speech consisted of 2,400 single sentence recordings from 24 speakers, while the spontaneous speech included narrative recordings from 22 speakers. Results showed that for read speech, Whisper and AssemblyAI achieved the best accuracy with mean Match Error Rates (MER) of 0.054 and 0.056 respectively, approaching human-level accuracy. For spontaneous speech, RevAI performed best with a mean MER of 0.063. The study also examined how each system handled disfluencies such as filler words, repetitions, and revisions, finding significant variation in performance across systems and disfluency types. While processing speed varied considerably between systems, longer processing times did not necessarily correlate with better accuracy. By detailing the performance of several of the most recent, widely-available ASR systems on non-native English speech, this study aims to help language instructors and researchers understand the strengths and weaknesses of each system and identify which may be suitable for specific use cases.

Lessons from Deploying CropFollow++: Under-Canopy Agricultural Navigation with Keypoints

Apr 26, 2024Abstract:We present a vision-based navigation system for under-canopy agricultural robots using semantic keypoints. Autonomous under-canopy navigation is challenging due to the tight spacing between the crop rows ($\sim 0.75$ m), degradation in RTK-GPS accuracy due to multipath error, and noise in LiDAR measurements from the excessive clutter. Our system, CropFollow++, introduces modular and interpretable perception architecture with a learned semantic keypoint representation. We deployed CropFollow++ in multiple under-canopy cover crop planting robots on a large scale (25 km in total) in various field conditions and we discuss the key lessons learned from this.

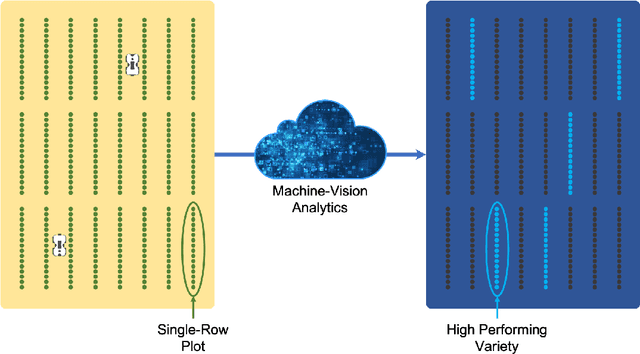

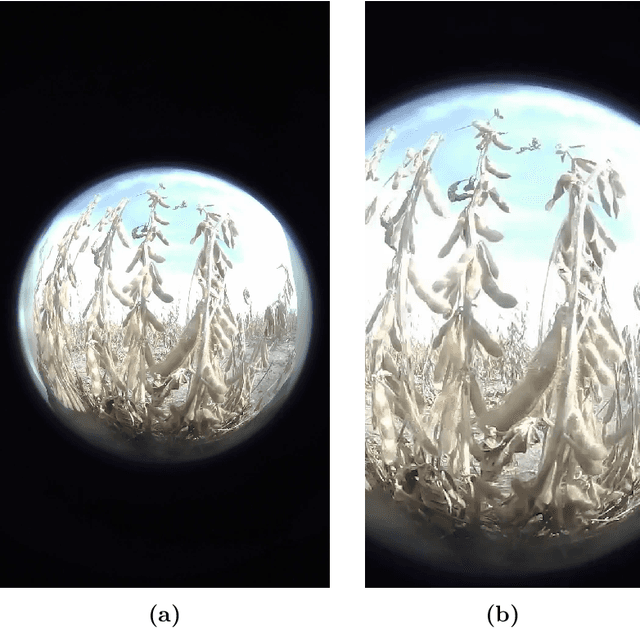

High Throughput Soybean Pod-Counting with In-Field Robotic Data Collection and Machine-Vision Based Data Analysis

May 27, 2021

Abstract:We report promising results for high-throughput on-field soybean pod count with small mobile robots and machine-vision algorithms. Our results show that the machine-vision based soybean pod counts are strongly correlated with soybean yield. While pod counts has a strong correlation with soybean yield, pod counting is extremely labor intensive, and has been difficult to automate. Our results establish that an autonomous robot equipped with vision sensors can autonomously collect soybean data at maturity. Machine-vision algorithms can be used to estimate pod-counts across a large diversity panel planted across experimental units (EUs, or plots) in a high-throughput, automated manner. We report a correlation of 0.67 between our automated pod counts and soybean yield. The data was collected in an experiment consisting of 1463 single-row plots maintained by the University of Illinois soybean breeding program during the 2020 growing season. We also report a correlation of 0.88 between automated pod counts and manual pod counts over a smaller data set of 16 plots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge