Menoua Keshishian

DeepSpeech models show Human-like Performance and Processing of Cochlear Implant Inputs

Jul 30, 2024

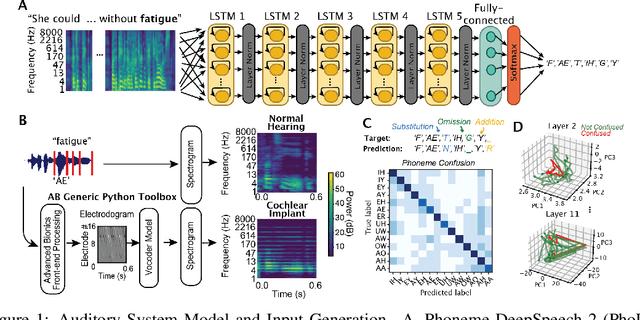

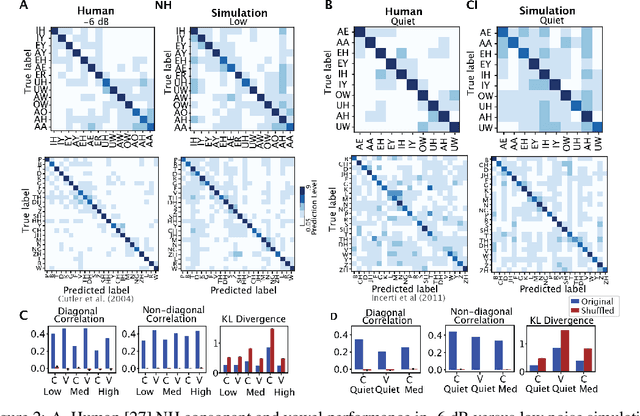

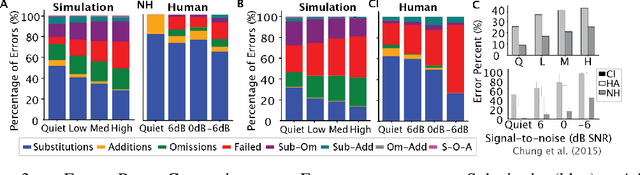

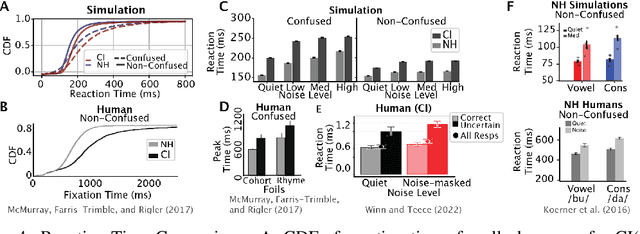

Abstract:Cochlear implants(CIs) are arguably the most successful neural implant, having restored hearing to over one million people worldwide. While CI research has focused on modeling the cochlear activations in response to low-level acoustic features, we hypothesize that the success of these implants is due in large part to the role of the upstream network in extracting useful features from a degraded signal and learned statistics of language to resolve the signal. In this work, we use the deep neural network (DNN) DeepSpeech2, as a paradigm to investigate how natural input and cochlear implant-based inputs are processed over time. We generate naturalistic and cochlear implant-like inputs from spoken sentences and test the similarity of model performance to human performance on analogous phoneme recognition tests. Our model reproduces error patterns in reaction time and phoneme confusion patterns under noise conditions in normal hearing and CI participant studies. We then use interpretability techniques to determine where and when confusions arise when processing naturalistic and CI-like inputs. We find that dynamics over time in each layer are affected by context as well as input type. Dynamics of all phonemes diverge during confusion and comprehension within the same time window, which is temporally shifted backward in each layer of the network. There is a modulation of this signal during processing of CI which resembles changes in human EEG signals in the auditory stream. This reduction likely relates to the reduction of encoded phoneme identity. These findings suggest that we have a viable model in which to explore the loss of speech-related information in time and that we can use it to find population-level encoding signals to target when optimizing cochlear implant inputs to improve encoding of essential speech-related information and improve perception.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge