Melissa D. Aczon

Predicting High-Flow Nasal Cannula Failure in an ICU Using a Recurrent Neural Network with Transfer Learning and Input Data Perseveration: A Retrospective Analysis

Nov 20, 2021

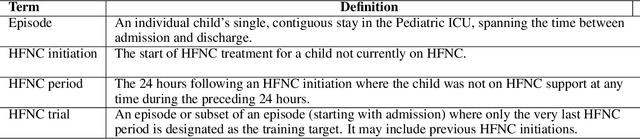

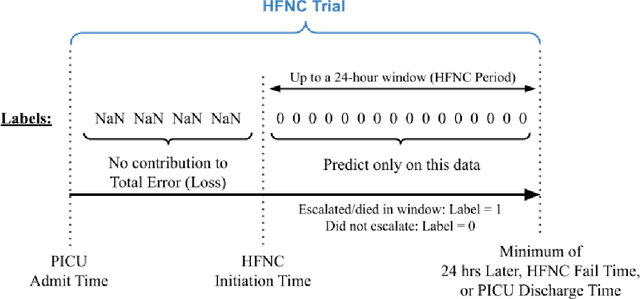

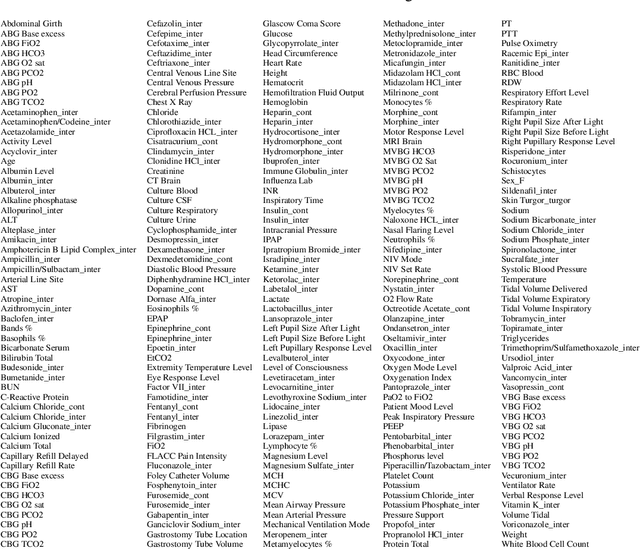

Abstract:High Flow Nasal Cannula (HFNC) provides non-invasive respiratory support for critically ill children who may tolerate it more readily than other Non-Invasive (NIV) techniques. Timely prediction of HFNC failure can provide an indication for increasing respiratory support. This work developed and compared machine learning models to predict HFNC failure. A retrospective study was conducted using EMR of patients admitted to a tertiary pediatric ICU from January 2010 to February 2020. A Long Short-Term Memory (LSTM) model was trained to generate a continuous prediction of HFNC failure. Performance was assessed using the area under the receiver operating curve (AUROC) at various times following HFNC initiation. The sensitivity, specificity, positive and negative predictive values (PPV, NPV) of predictions at two hours after HFNC initiation were also evaluated. These metrics were also computed in a cohort with primarily respiratory diagnoses. 834 HFNC trials [455 training, 173 validation, 206 test] met the inclusion criteria, of which 175 [103, 30, 42] (21.0%) escalated to NIV or intubation. The LSTM models trained with transfer learning generally performed better than the LR models, with the best LSTM model achieving an AUROC of 0.78, vs 0.66 for the LR, two hours after initiation. Machine learning models trained using EMR data were able to identify children at risk for failing HFNC within 24 hours of initiation. LSTM models that incorporated transfer learning, input data perseveration and ensembling showed improved performance than the LR and standard LSTM models.

Interpreting a Recurrent Neural Network Model for ICU Mortality Using Learned Binary Masks

Jun 06, 2019

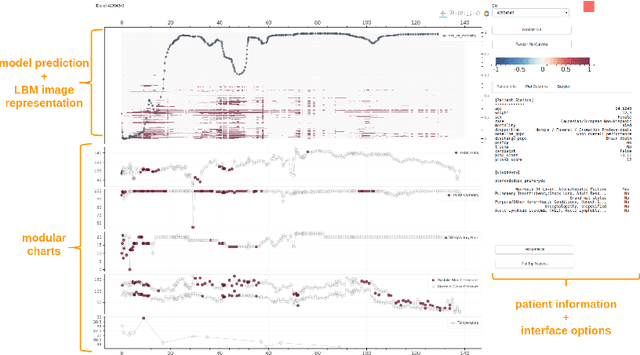

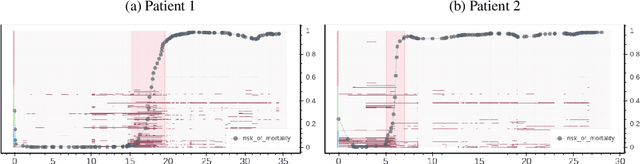

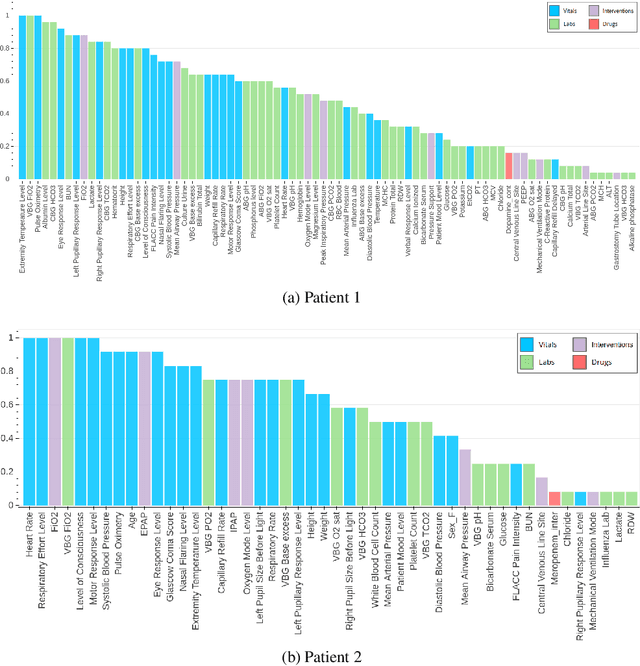

Abstract:An attribution method was developed to interpret a recurrent neural network (RNN) trained to predict a child's risk of ICU mortality using multi-modal, time series data in the Electronic Medical Records. By learning a sparse, binary mask that highlights salient features of the input data, critical features determining an individual patient's severity of illness could be identified. The method, called Learned Binary Masks (LBM), demonstrated that the RNN used different feature sets specific to each patient's illness; and further, the features highlighted aligned with clinical intuition of the patient's disease trajectories. LBM was also used to identify the most salient features across the model, analogous to "feature importance" computed in the Random Forest. This measure of the RNN's feature importance was further used to select the 25% most used features for training a second RNN model. Interestingly, but not surprisingly, the second model maintained similar performance to the model trained on all features. LBM is data-agnostic and can be used to interpret the predictions of any differentiable model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge