Mel Chekol

Leveraging Pre-trained Language Models for Time Interval Prediction in Text-Enhanced Temporal Knowledge Graphs

Sep 28, 2023

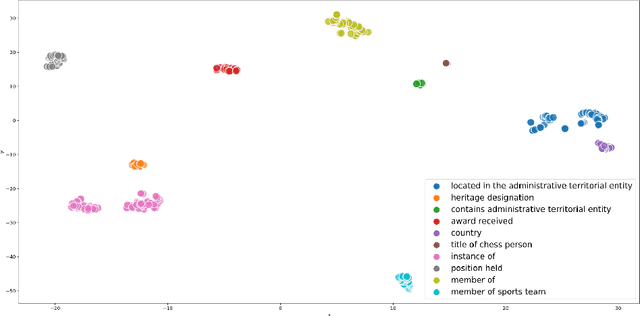

Abstract:Most knowledge graph completion (KGC) methods learn latent representations of entities and relations of a given graph by mapping them into a vector space. Although the majority of these methods focus on static knowledge graphs, a large number of publicly available KGs contain temporal information stating the time instant/period over which a certain fact has been true. Such graphs are often known as temporal knowledge graphs. Furthermore, knowledge graphs may also contain textual descriptions of entities and relations. Both temporal information and textual descriptions are not taken into account during representation learning by static KGC methods, and only structural information of the graph is leveraged. Recently, some studies have used temporal information to improve link prediction, yet they do not exploit textual descriptions and do not support inductive inference (prediction on entities that have not been seen in training). We propose a novel framework called TEMT that exploits the power of pre-trained language models (PLMs) for text-enhanced temporal knowledge graph completion. The knowledge stored in the parameters of a PLM allows TEMT to produce rich semantic representations of facts and to generalize on previously unseen entities. TEMT leverages textual and temporal information available in a KG, treats them separately, and fuses them to get plausibility scores of facts. Unlike previous approaches, TEMT effectively captures dependencies across different time points and enables predictions on unseen entities. To assess the performance of TEMT, we carried out several experiments including time interval prediction, both in transductive and inductive settings, and triple classification. The experimental results show that TEMT is competitive with the state-of-the-art.

Leveraging Static Models for Link Prediction in Temporal Knowledge Graphs

Jun 29, 2021

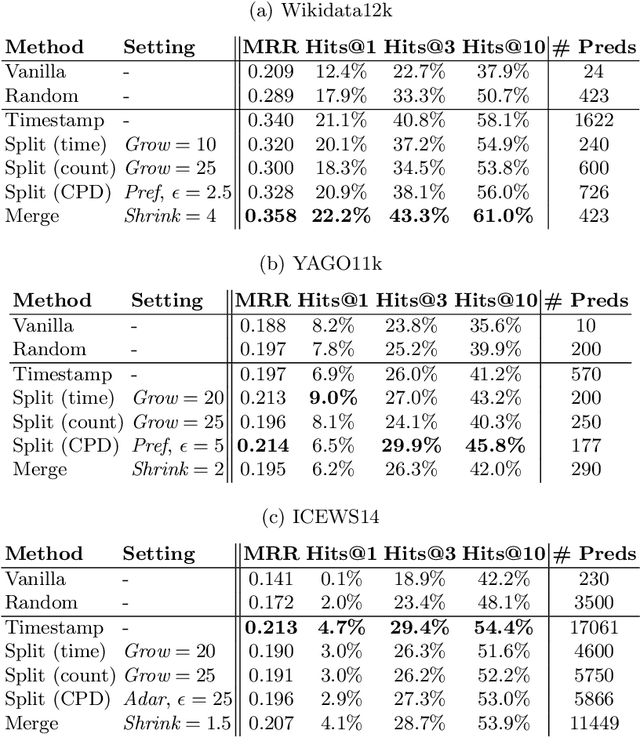

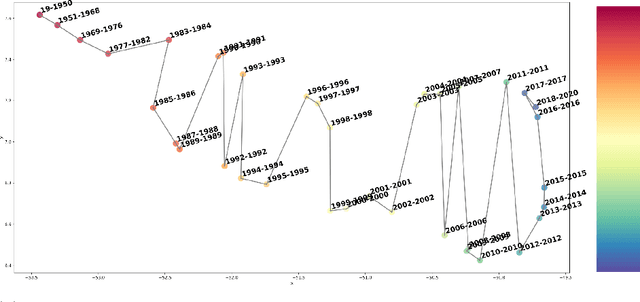

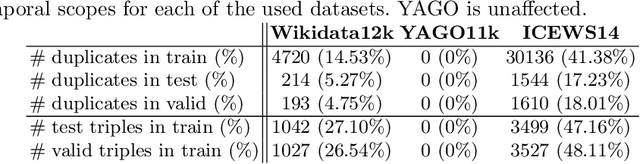

Abstract:The inclusion of temporal scopes of facts in knowledge graph embedding (KGE) presents significant opportunities for improving the resulting embeddings, and consequently for increased performance in downstream applications. Yet, little research effort has focussed on this area and much of the carried out research reports only marginally improved results compared to models trained without temporal scopes (static models). Furthermore, rather than leveraging existing work on static models, they introduce new models specific to temporal knowledge graphs. We propose a novel perspective that takes advantage of the power of existing static embedding models by focussing effort on manipulating the data instead. Our method, SpliMe, draws inspiration from the field of signal processing and early work in graph embedding. We show that SpliMe competes with or outperforms the current state of the art in temporal KGE. Additionally, we uncover issues with the procedure currently used to assess the performance of static models on temporal graphs and introduce two ways to counteract them.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge