Mazin Hnewa

Integrated Multiscale Domain Adaptive YOLO

Feb 10, 2022

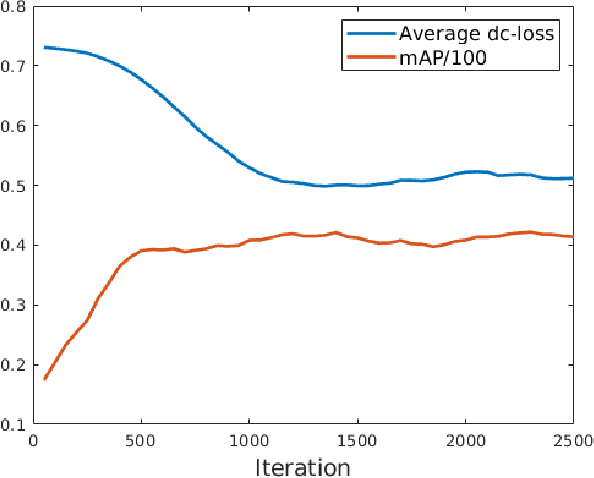

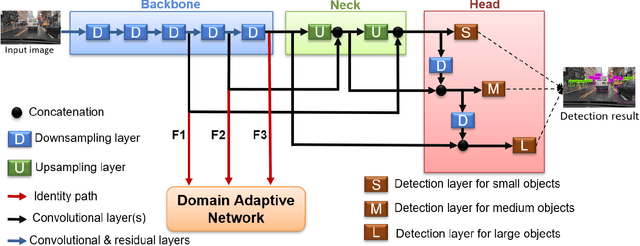

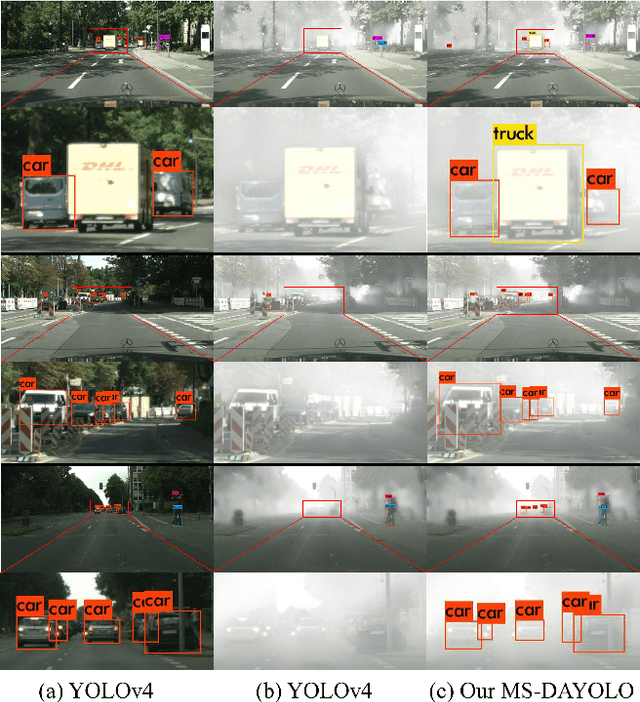

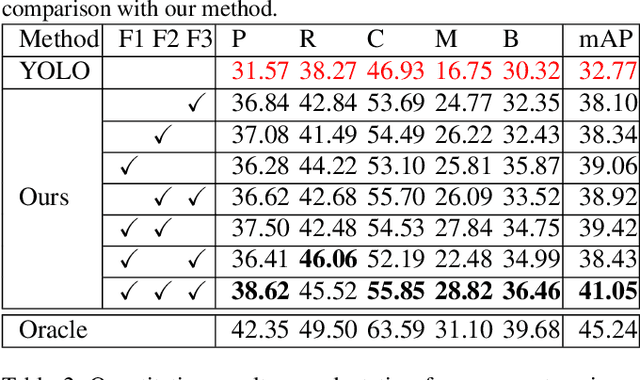

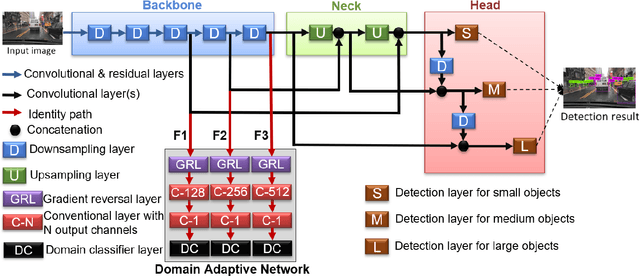

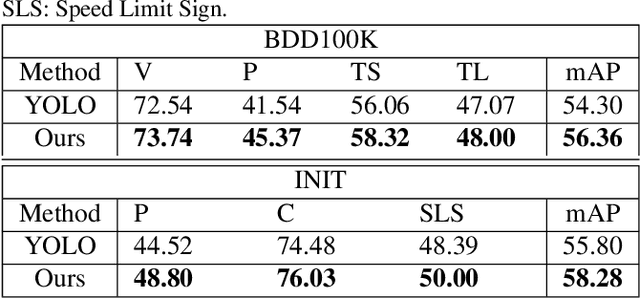

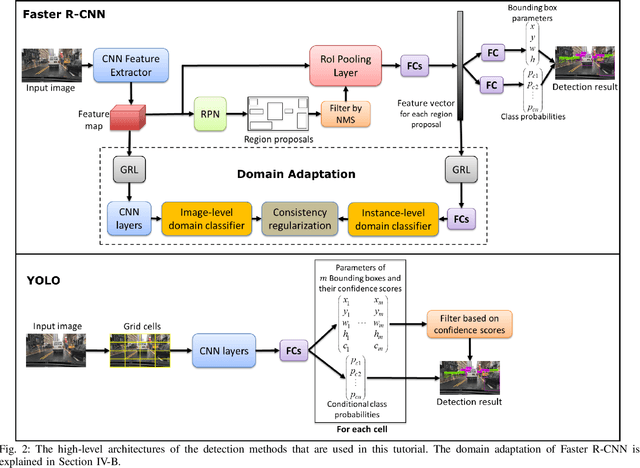

Abstract:The area of domain adaptation has been instrumental in addressing the domain shift problem encountered by many applications. This problem arises due to the difference between the distributions of source data used for training in comparison with target data used during realistic testing scenarios. In this paper, we introduce a novel MultiScale Domain Adaptive YOLO (MS-DAYOLO) framework that employs multiple domain adaptation paths and corresponding domain classifiers at different scales of the recently introduced YOLOv4 object detector. Building on our baseline multiscale DAYOLO framework, we introduce three novel deep learning architectures for a Domain Adaptation Network (DAN) that generates domain-invariant features. In particular, we propose a Progressive Feature Reduction (PFR), a Unified Classifier (UC), and an Integrated architecture. We train and test our proposed DAN architectures in conjunction with YOLOv4 using popular datasets. Our experiments show significant improvements in object detection performance when training YOLOv4 using the proposed MS-DAYOLO architectures and when tested on target data for autonomous driving applications. Moreover, MS-DAYOLO framework achieves an order of magnitude real-time speed improvement relative to Faster R-CNN solutions while providing comparable object detection performance.

Multiscale Domain Adaptive YOLO for Cross-Domain Object Detection

Jun 02, 2021

Abstract:The area of domain adaptation has been instrumental in addressing the domain shift problem encountered by many applications. This problem arises due to the difference between the distributions of source data used for training in comparison with target data used during realistic testing scenarios. In this paper, we introduce a novel MultiScale Domain Adaptive YOLO (MS-DAYOLO) framework that employs multiple domain adaptation paths and corresponding domain classifiers at different scales of the recently introduced YOLOv4 object detector to generate domain-invariant features. We train and test our proposed method using popular datasets. Our experiments show significant improvements in object detection performance when training YOLOv4 using the proposed MS-DAYOLO and when tested on target data representing challenging weather conditions for autonomous driving applications.

Object Detection under Rainy Conditions for Autonomous Vehicles

Jul 10, 2020

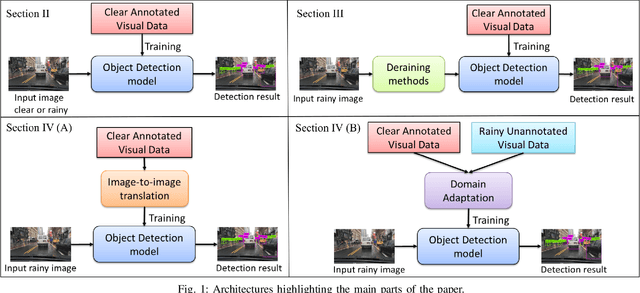

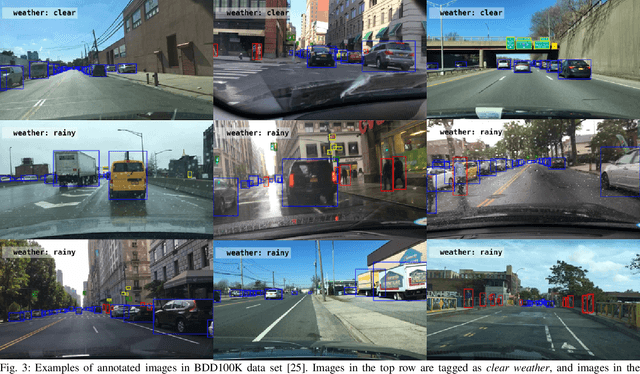

Abstract:Advanced automotive active-safety systems, in general, and autonomous vehicles, in particular, rely heavily on visual data to classify and localize objects such as pedestrians, traffic signs and lights, and other nearby cars, to assist the corresponding vehicles maneuver safely in their environments. However, the performance of object detection methods could degrade rather significantly under challenging weather scenarios including rainy conditions. Despite major advancements in the development of deraining approaches, the impact of rain on object detection has largely been understudied, especially in the context of autonomous driving. The main objective of this paper is to present a tutorial on state-of-the-art and emerging techniques that represent leading candidates for mitigating the influence of rainy conditions on an autonomous vehicle's ability to detect objects. Our goal includes surveying and analyzing the performance of object detection methods trained and tested using visual data captured under clear and rainy conditions. Moreover, we survey and evaluate the efficacy and limitations of leading deraining approaches, deep-learning based domain adaptation, and image translation frameworks that are being considered for addressing the problem of object detection under rainy conditions. Experimental results of a variety of the surveyed techniques are presented as part of this tutorial.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge