Mayura Manawadu

Voice-Assisted Real-Time Traffic Sign Recognition System Using Convolutional Neural Network

Apr 11, 2024

Abstract:Traffic signs are important in communicating information to drivers. Thus, comprehension of traffic signs is essential for road safety and ignorance may result in road accidents. Traffic sign detection has been a research spotlight over the past few decades. Real-time and accurate detections are the preliminaries of robust traffic sign detection system which is yet to be achieved. This study presents a voice-assisted real-time traffic sign recognition system which is capable of assisting drivers. This system functions under two subsystems. Initially, the detection and recognition of the traffic signs are carried out using a trained Convolutional Neural Network (CNN). After recognizing the specific traffic sign, it is narrated to the driver as a voice message using a text-to-speech engine. An efficient CNN model for a benchmark dataset is developed for real-time detection and recognition using Deep Learning techniques. The advantage of this system is that even if the driver misses a traffic sign, or does not look at the traffic sign, or is unable to comprehend the sign, the system detects it and narrates it to the driver. A system of this type is also important in the development of autonomous vehicles.

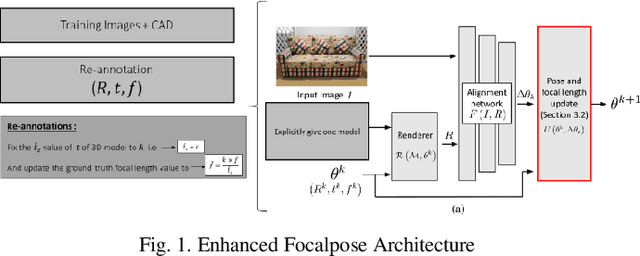

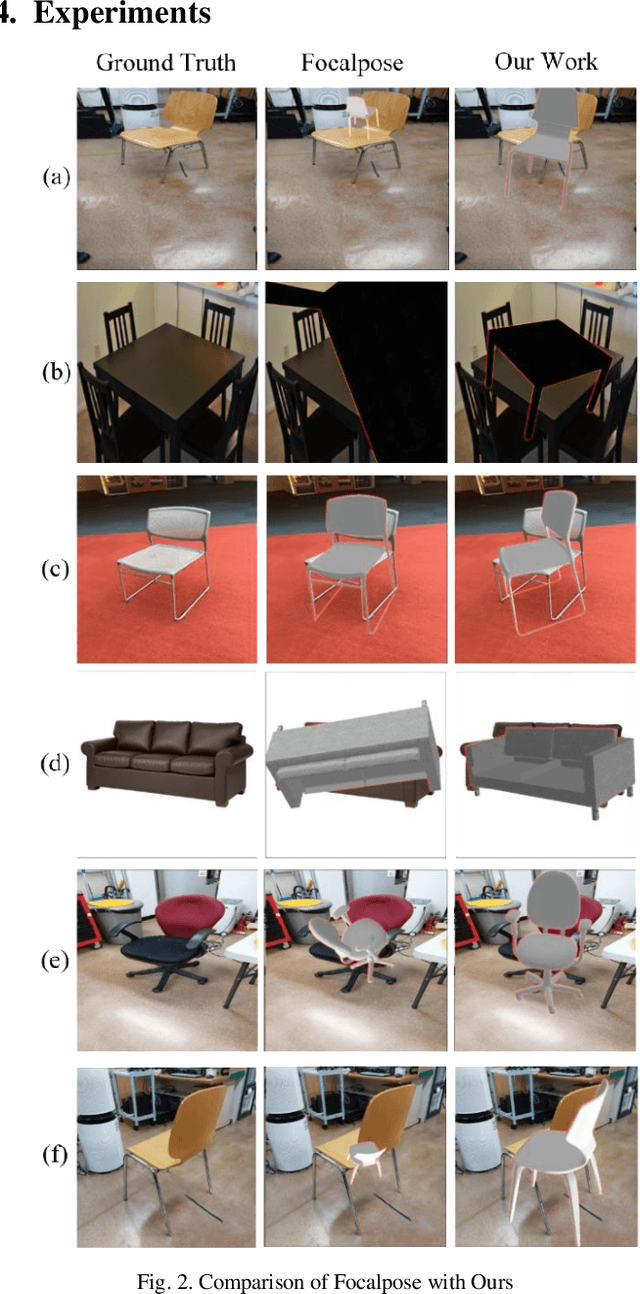

Advancing 6D Pose Estimation in Augmented Reality -- Overcoming Projection Ambiguity with Uncontrolled Imagery

Mar 20, 2024

Abstract:This study addresses the challenge of accurate 6D pose estimation in Augmented Reality (AR), a critical component for seamlessly integrating virtual objects into real-world environments. Our research primarily addresses the difficulty of estimating 6D poses from uncontrolled RGB images, a common scenario in AR applications, which lacks metadata such as focal length. We propose a novel approach that strategically decomposes the estimation of z-axis translation and focal length, leveraging the neural-render and compare strategy inherent in the FocalPose architecture. This methodology not only streamlines the 6D pose estimation process but also significantly enhances the accuracy of 3D object overlaying in AR settings. Our experimental results demonstrate a marked improvement in 6D pose estimation accuracy, with promising applications in manufacturing and robotics. Here, the precise overlay of AR visualizations and the advancement of robotic vision systems stand to benefit substantially from our findings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge