Maxime Gabella

Implementing Learning Principles with a Personal AI Tutor: A Case Study

Sep 10, 2023Abstract:Effective learning strategies based on principles like personalization, retrieval practice, and spaced repetition are often challenging to implement due to practical constraints. Here we explore the integration of AI tutors to complement learning programs in accordance with learning sciences. A semester-long study was conducted at UniDistance Suisse, where an AI tutor app was provided to psychology students taking a neuroscience course (N=51). After automatically generating microlearning questions from existing course materials using GPT-3, the AI tutor developed a dynamic neural-network model of each student's grasp of key concepts. This enabled the implementation of distributed retrieval practice, personalized to each student's individual level and abilities. The results indicate that students who actively engaged with the AI tutor achieved significantly higher grades. Moreover, active engagement led to an average improvement of up to 15 percentile points compared to a parallel course without AI tutor. Additionally, the grasp strongly correlated with the exam grade, thus validating the relevance of neural-network predictions. This research demonstrates the ability of personal AI tutors to model human learning processes and effectively enhance academic performance. By integrating AI tutors into their programs, educators can offer students personalized learning experiences grounded in the principles of learning sciences, thereby addressing the challenges associated with implementing effective learning strategies. These findings contribute to the growing body of knowledge on the transformative potential of AI in education.

Topology of Learning in Artificial Neural Networks

Feb 21, 2019

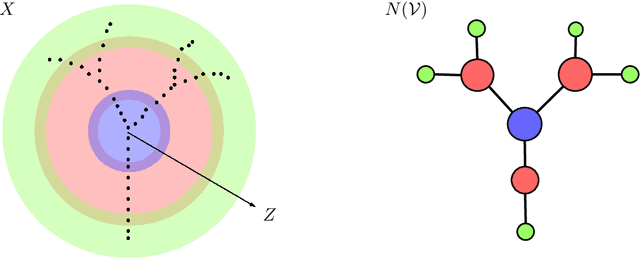

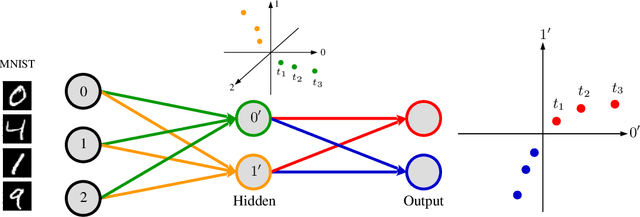

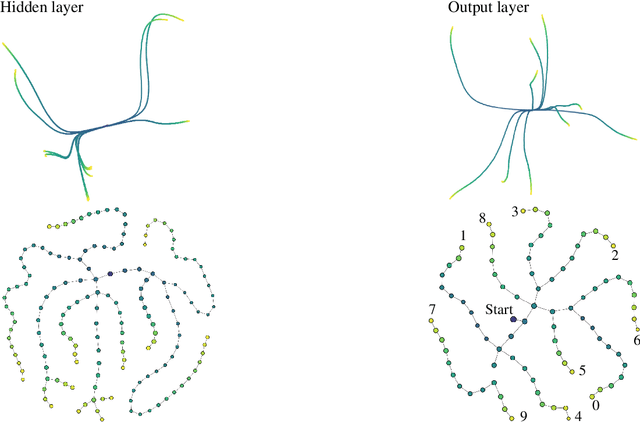

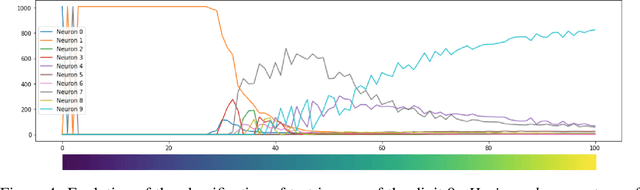

Abstract:Understanding how neural networks learn remains one of the central challenges in machine learning research. From random at the start of training, the weights of a neural network evolve in such a way as to be able to perform a variety of tasks, like classifying images. Here we study the emergence of structure in the weights by applying methods from topological data analysis. We train simple feedforward neural networks on the MNIST dataset and monitor the evolution of the weights. When initialized to zero, the weights follow trajectories that branch off recurrently, thus generating trees that describe the growth of the effective capacity of each layer. When initialized to tiny random values, the weights evolve smoothly along two-dimensional surfaces. We show that natural coordinates on these learning surfaces correspond to important factors of variation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge