Max Losch

Certified Robust Models with Slack Control and Large Lipschitz Constants

Sep 12, 2023

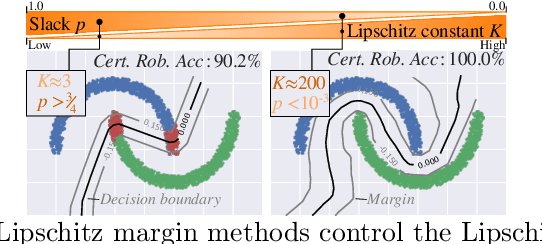

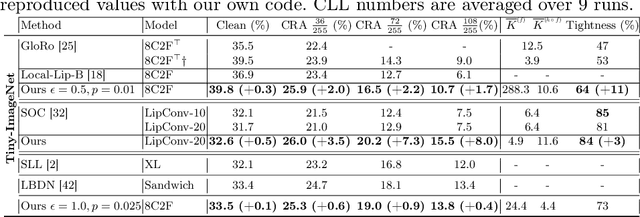

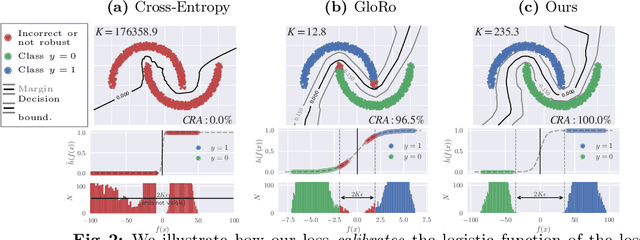

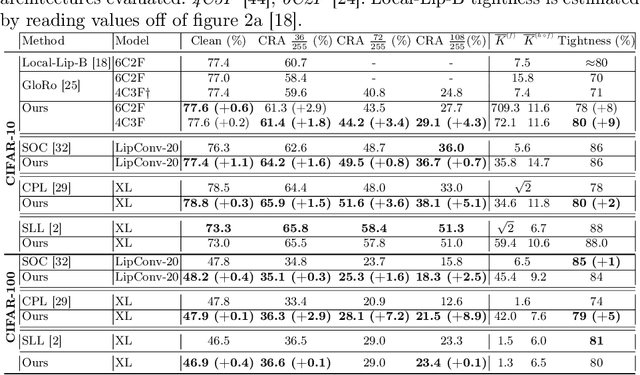

Abstract:Despite recent success, state-of-the-art learning-based models remain highly vulnerable to input changes such as adversarial examples. In order to obtain certifiable robustness against such perturbations, recent work considers Lipschitz-based regularizers or constraints while at the same time increasing prediction margin. Unfortunately, this comes at the cost of significantly decreased accuracy. In this paper, we propose a Calibrated Lipschitz-Margin Loss (CLL) that addresses this issue and improves certified robustness by tackling two problems: Firstly, commonly used margin losses do not adjust the penalties to the shrinking output distribution; caused by minimizing the Lipschitz constant $K$. Secondly, and most importantly, we observe that minimization of $K$ can lead to overly smooth decision functions. This limits the model's complexity and thus reduces accuracy. Our CLL addresses these issues by explicitly calibrating the loss w.r.t. margin and Lipschitz constant, thereby establishing full control over slack and improving robustness certificates even with larger Lipschitz constants. On CIFAR-10, CIFAR-100 and Tiny-ImageNet, our models consistently outperform losses that leave the constant unattended. On CIFAR-100 and Tiny-ImageNet, CLL improves upon state-of-the-art deterministic $L_2$ robust accuracies. In contrast to current trends, we unlock potential of much smaller models without $K=1$ constraints.

Interpretability Beyond Classification Output: Semantic Bottleneck Networks

Jul 28, 2019

Abstract:Today's deep learning systems deliver high performance based on end-to-end training. While they deliver strong performance, these systems are hard to interpret. To address this issue, we propose Semantic Bottleneck Networks (SBN): deep networks with semantically interpretable intermediate layers that all downstream results are based on. As a consequence, the analysis on what the final prediction is based on is transparent to the engineer and failure cases and modes can be analyzed and avoided by high-level reasoning. We present a case study on street scene segmentation to demonstrate the feasibility and power of SBN. In particular, we start from a well performing classic deep network which we adapt to house a SB-Layer containing task related semantic concepts (such as object-parts and materials). Importantly, we can recover state of the art performance despite a drastic dimensionality reduction from 1000s (non-semantic feature) to 10s (semantic concept) channels. Additionally we show how the activations of the SB-Layer can be used for both the interpretation of failure cases of the network as well as for confidence prediction of the resulting output. For the first time, e.g., we show interpretable segmentation results for most predictions at over 99% accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge