Matthew Burlick

Towards Evaluating Driver Fatigue with Robust Deep Learning Models

Jul 16, 2020

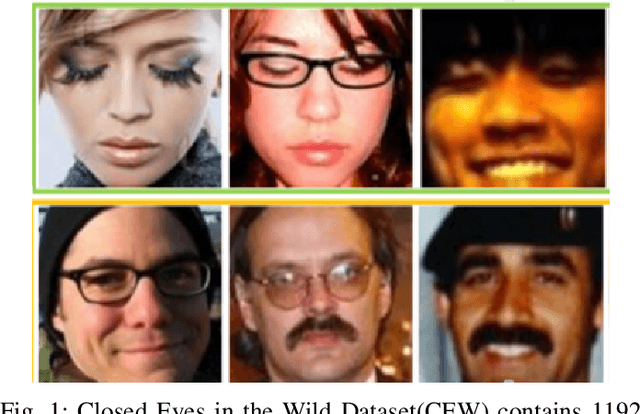

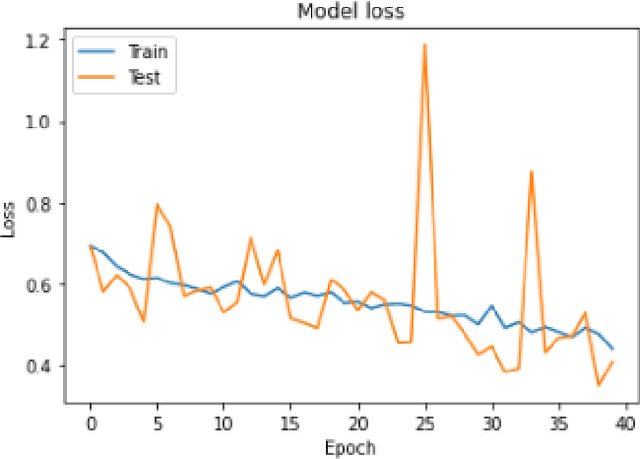

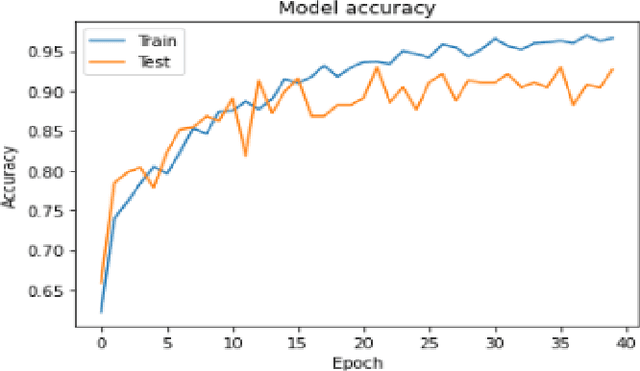

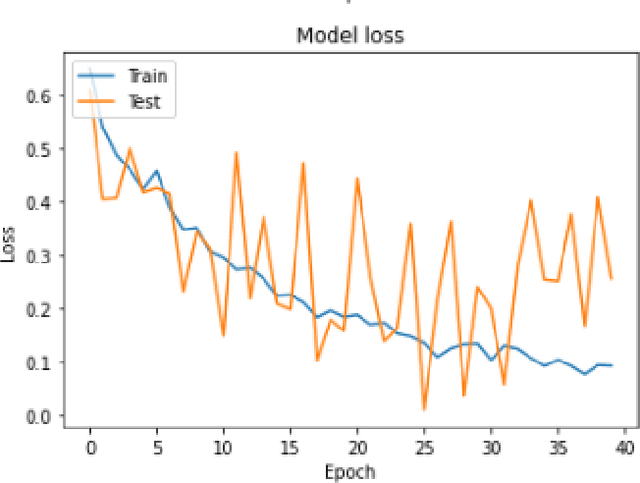

Abstract:In this paper, we explore different deep learning based approaches to detect driver fatigue. Drowsy driving results in approximately 72,000 crashes and 44,000 injuries every year in the US and detecting drowsiness and alerting the driver can save many lives. There have been many approaches to detect fatigue, of which eye closedness detection is one. We propose a framework to detect eye closedness in a captured camera frame as a gateway for detecting drowsiness. We explore two different datasets to detect eye closedness. We develop an eye model by using new Eye-blink dataset and a face model by using the Closed Eyes in the Wild (CEW). We also explore different techniques to make the models more robust by adding noise. We achieve 95.84% accuracy on our eye model that detects eye blinking and 80.01% accuracy on our face model that detects eye blinking. We also see that we can improve our accuracy on the face model by 6% when we add noise to our training data and apply data augmentation. We hope that our work will be useful to the field of driver fatigue detection to avoid potential vehicle accidents related to drowsy driving.

Adversarial Attacks against Neural Networks in Audio Domain: Exploiting Principal Components

Jul 14, 2020

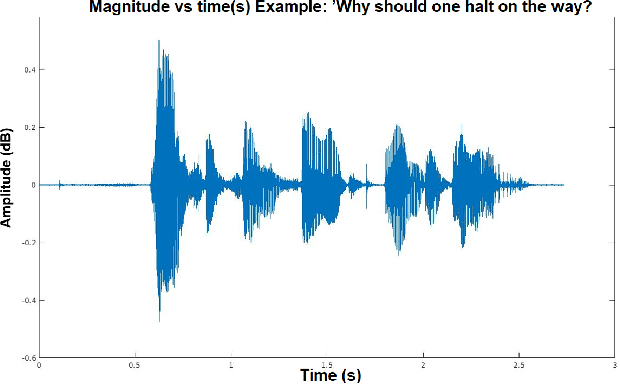

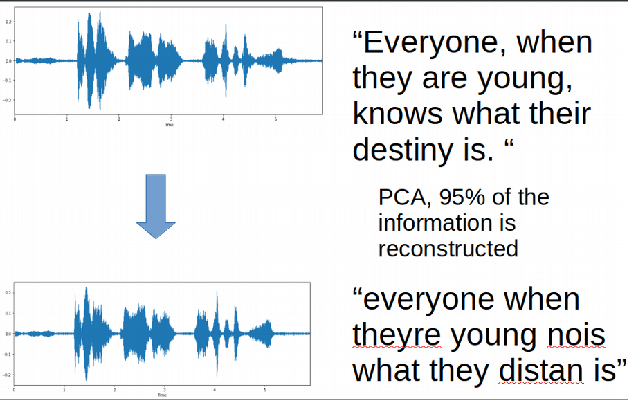

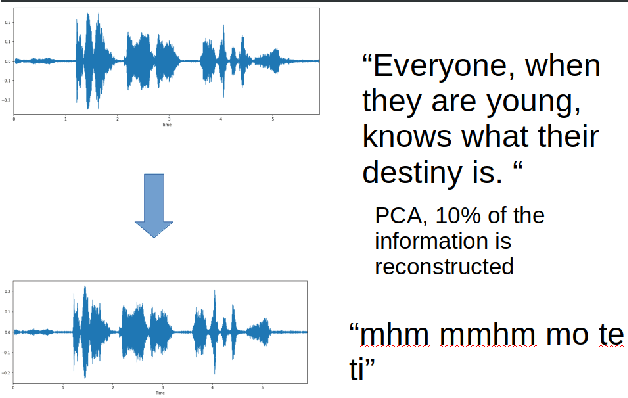

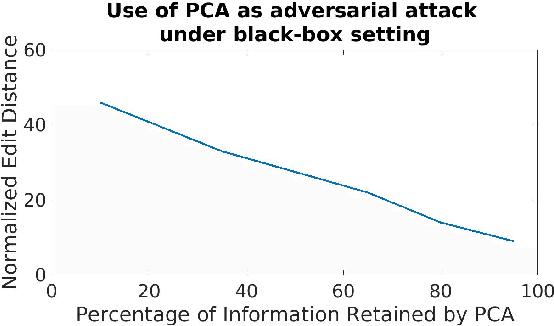

Abstract:Adversarial attacks are inputs that are similar to original inputs but altered on purpose. Speech-to-text neural networks that are widely used today are prone to misclassify adversarial attacks. In this study, first, we investigate the presence of targeted adversarial attacks by altering wave forms from Common Voice data set. We craft adversarial wave forms via Connectionist Temporal Classification Loss Function, and attack DeepSpeech, a speech-to-text neural network implemented by Mozilla. We achieve 100% adversarial success rate (zero successful classification by DeepSpeech) on all 25 adversarial wave forms that we crafted. Second, we investigate the use of PCA as a defense mechanism against adversarial attacks. We reduce dimensionality by applying PCA to these 25 attacks that we created and test them against DeepSpeech. We observe zero successful classification by DeepSpeech, which suggests PCA is not a good defense mechanism in audio domain. Finally, instead of using PCA as a defense mechanism, we use PCA this time to craft adversarial inputs under a black-box setting with minimal adversarial knowledge. With no knowledge regarding the model, parameters, or weights, we craft adversarial attacks by applying PCA to samples from Common Voice data set and achieve 100% adversarial success under black-box setting again when tested against DeepSpeech. We also experiment with different percentage of components necessary to result in a classification during attacking process. In all cases, adversary becomes successful.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge