Matteo Borrotti

Impact of multi-armed bandit strategies on deep recurrent reinforcement learning

Oct 12, 2023

Abstract:Incomplete knowledge of the environment leads an agent to make decisions under uncertainty. One of the major dilemmas in Reinforcement Learning (RL) where an autonomous agent has to balance two contrasting needs in making its decisions is: exploiting the current knowledge of the environment to maximize the cumulative reward as well as exploring actions that allow improving the knowledge of the environment, hopefully leading to higher reward values (exploration-exploitation trade-off). Concurrently, another relevant issue regards the full observability of the states, which may not be assumed in all applications. Such as when only 2D images are considered as input in a RL approach used for finding the optimal action within a 3D simulation environment. In this work, we address these issues by deploying and testing several techniques to balance exploration and exploitation trade-off on partially observable systems for predicting steering wheels in autonomous driving scenario. More precisely, the final aim is to investigate the effects of using both stochastic and deterministic multi-armed bandit strategies coupled with a Deep Recurrent Q-Network. Additionally, we adapted and evaluated the impact of an innovative method to improve the learning phase of the underlying Convolutional Recurrent Neural Network. We aim to show that adaptive stochastic methods for exploration better approximate the trade-off between exploration and exploitation as, in general, Softmax and Max-Boltzmann strategies are able to outperform epsilon-greedy techniques.

Bayesian Optimization and Deep Learning forsteering wheel angle prediction

Oct 22, 2021

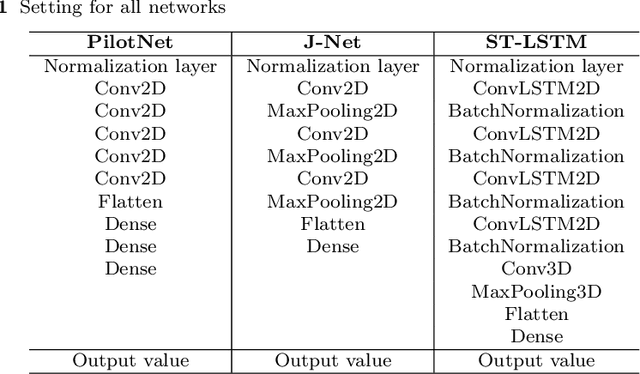

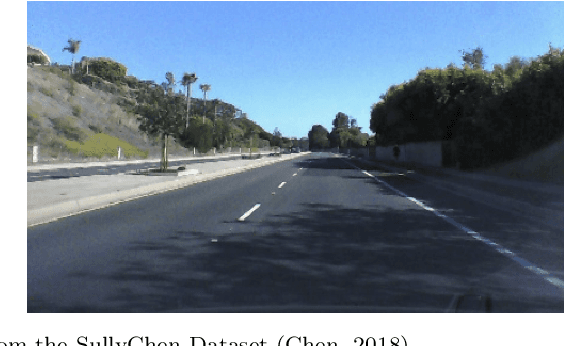

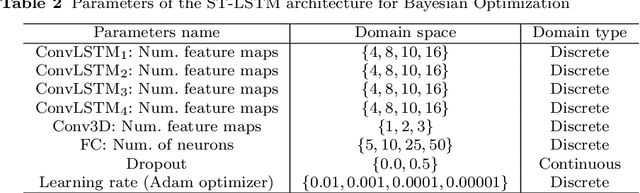

Abstract:Automated driving systems (ADS) have undergone a significant improvement in the last years. ADS and more precisely self-driving cars technologies will change the way we perceive and know the world of transportation systems in terms of user experience, mode choices and business models. The emerging field of Deep Learning (DL) has been successfully applied for the development of innovative ADS solutions. However, the attempt to single out the best deep neural network architecture and tuning its hyperparameters are all expensive processes, both in terms of time and computational resources. In this work, Bayesian Optimization (BO) is used to optimize the hyperparameters of a Spatiotemporal-Long Short Term Memory (ST-LSTM) network with the aim to obtain an accurate model for the prediction of the steering angle in a ADS. BO was able to identify, within a limited number of trials, a model -- namely BOST-LSTM -- which resulted, on a public dataset, the most accurate when compared to classical end-to-end driving models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge